Follow us and stay on top of everything CRO

Read summarized version with

What is mobile app A/B testing?

Did you know that over 200 billion apps were downloaded worldwide in 2019? However, the user retention rate for mobile apps stood at 32%. If these numbers are anything to go by, they clearly indicate that while it may be tough to get your app downloaded, it’s tougher to engage and retain users and make them return to your app.

Therefore, the deciding factor in this scenario could definitely be your app’s user experience.

However, consistently delivering in-app experiences that delight can be tricky. How do you ensure that each and every element, page, and feature of your mobile app adds up to an enticing experience and moves the user down the conversion funnel? One way to get there is by harnessing the power of experimentation; and here’s where mobile app A/B testing steps in.

Mobile app A/B testing is a form of A/B testing wherein various user segments are presented with different variations of an in-app experience to determine which one induces the desired action from them or has a positive (or better) impact on app key metrics. By figuring out what works for your mobile app and what doesn’t, you can systematically optimize it for your desired metrics and unlock limitless growth opportunities for your business that were always lurking in plain sight.

What differentiates mobile app A/B testing from the standard A/B testing done on the web is that it is deployed on the server-side instead of the client-side. As opposed to client-side testing, which involves delivering variations that rest on the users’ browser (client), server-side testing allows tests to be run, and modifications to be made directly on the app server and then premeditatedly render the same to the users’ device. Both have varied scope and serve diverse business needs.

Server-side testing is more robust and built for fairly complex tests. Implementation directly on the server allows you to experiment deep within your stack and run more sophisticated tests, that are beyond the scope of cosmetic or UI-based changes. Mobile app A/B testing encompasses all capabilities of server-side testing and allows marketers and product managers to optimize their end-to-end user experiences.

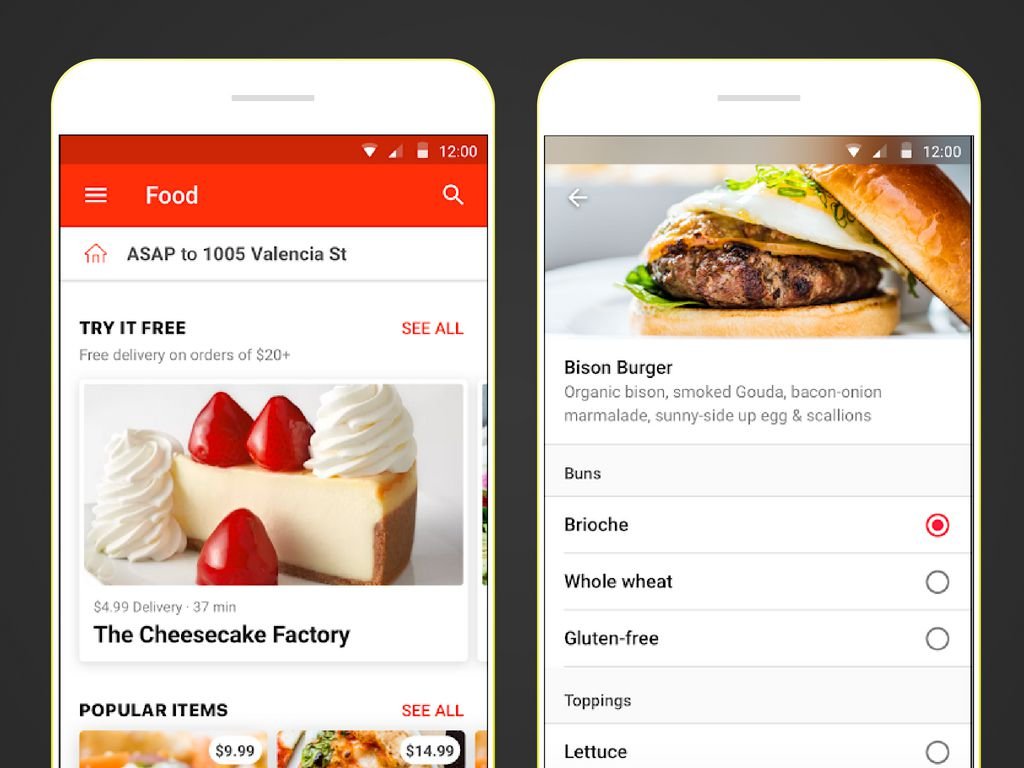

From experimenting with multiple combinations of in-app experiences to testing those high-impact app features pre and post-launch, mobile app A/B testing enables you to do it all comprehensively and optimize your app for enhanced user engagement, retention, and conversions. VWO offers a holistic experimentation suite for all your mobile app A/B testing needs.

Why should you use mobile App A/B testing?

At the surface level, mobile app A/B testing is essential to optimize mobile apps for the same reasons client-side A/B testing is essential for optimizing web and mobile sites. However, when it comes to mobile apps, A/B testing offers a multitude of additional benefits that can be leveraged to solve pain points in mobile users’ journeys and significantly improve app-specific metrics. You should consider investing in mobile app A/B testing to:

Optimize in-app experiences and boost core metrics

To ensure you consistently provide delightful user experiences, you need to thoroughly test deep within your app so you can measure which iteration leads to an improvement in your key metrics such as monthly active users, retention rate, drop-offs, and so on. This way, you’ll only rely on data-driven decisions while creating your mobile app development roadmap. So, whether it’s a simple front-end change such as the placement of your CTA button or a complete overhaul of your search algorithm, testing will ensure you have answers on how to make your in-app experience more comfortable. Here’s how you can leverage mobile app A/B testing to consistently optimize and outdo your app experiences:

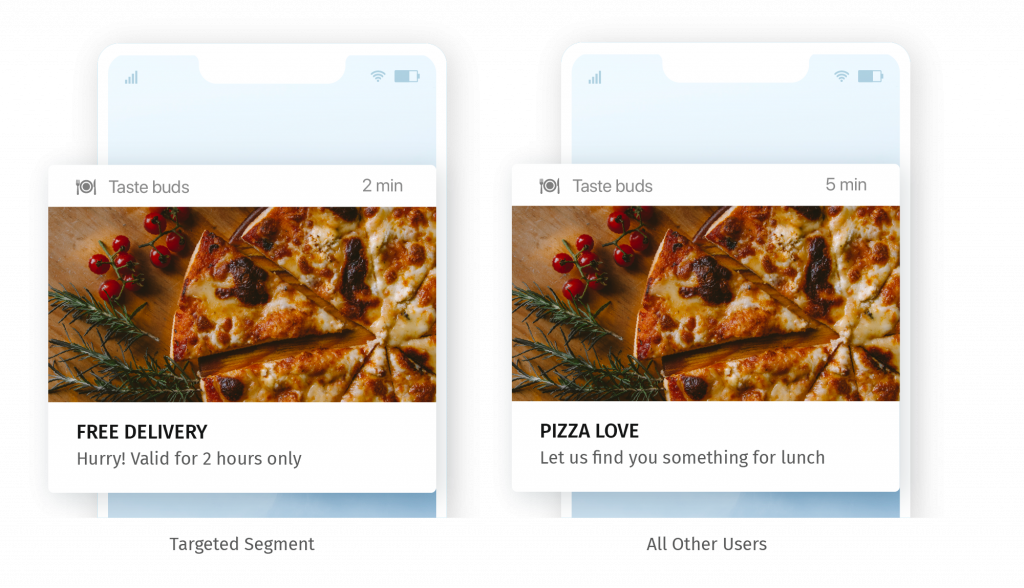

• Segment your user base and deliver targeted experiences

With mobile app A/B testing, you can deploy deep segmentation to bucket and categorize your users and target them distinguishably. The segmentation can be based on geographic, behavioral, demographic, or technographic factors, to name a few. You can then target each user segment separately (based on their characteristics) with a specific variation of your app experience and even control the percentage of a user segment that will be presented with a particular variation of an A/B test. This will help you understand your audience closely, experiment at a granular level, and deliver better, more relevant experiences. The results will also help you figure out your ideal group of power users, which can further shape your future optimization and marketing efforts.

For example, a food delivery app finds out that, on average, a significant percentage of their daily orders are received from 20-35 year-olds during lunch. To target this segment, they start offering deals such as free delivery to this particular customer segment, specifically during lunch hours, to see if this causes an uplift in daily sales.

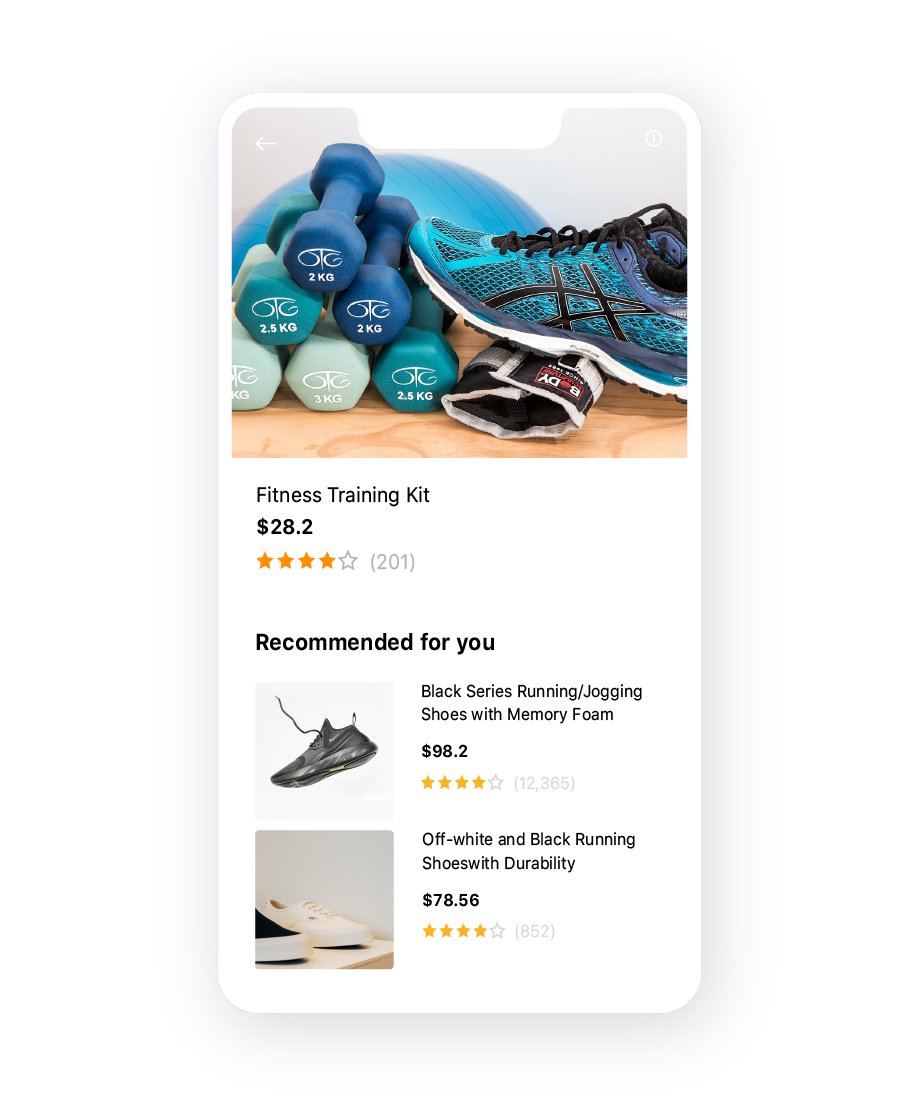

• Deliver personalized in-app experiences

In today’s day and age of always-connected mobile users, your app optimization journey isn’t complete until your app experiences are personalized. With mobile app A/B testing, you can go beyond serving different variations of your app pages to different users to tailoring each variation basis the interests of your users and/or their past interaction with your brand.

For example, an eCommerce app repeatedly showcases a variety of products from the activewear catalog to a user who recently bought a pair of running shoes.

Experiment with features in production

Apart from running standard UI-based tests, mobile app A/B testing allows you to go a step ahead to even test your app features, and thereby validate your product ideas. Needless to say, you might want to test out multiple variations of a crucial feature or even multiple features before finally launching one to ensure it positively impacts your app’s performance. You might even want to test a single feature with different user segments to understand its stickiness with the right audience. Mobile app A/B testing empowers app developers and product managers to do it all by allowing them to test and optimize their features (and align them for various user segments) while in production to ensure that the final feature launch is a successful one.

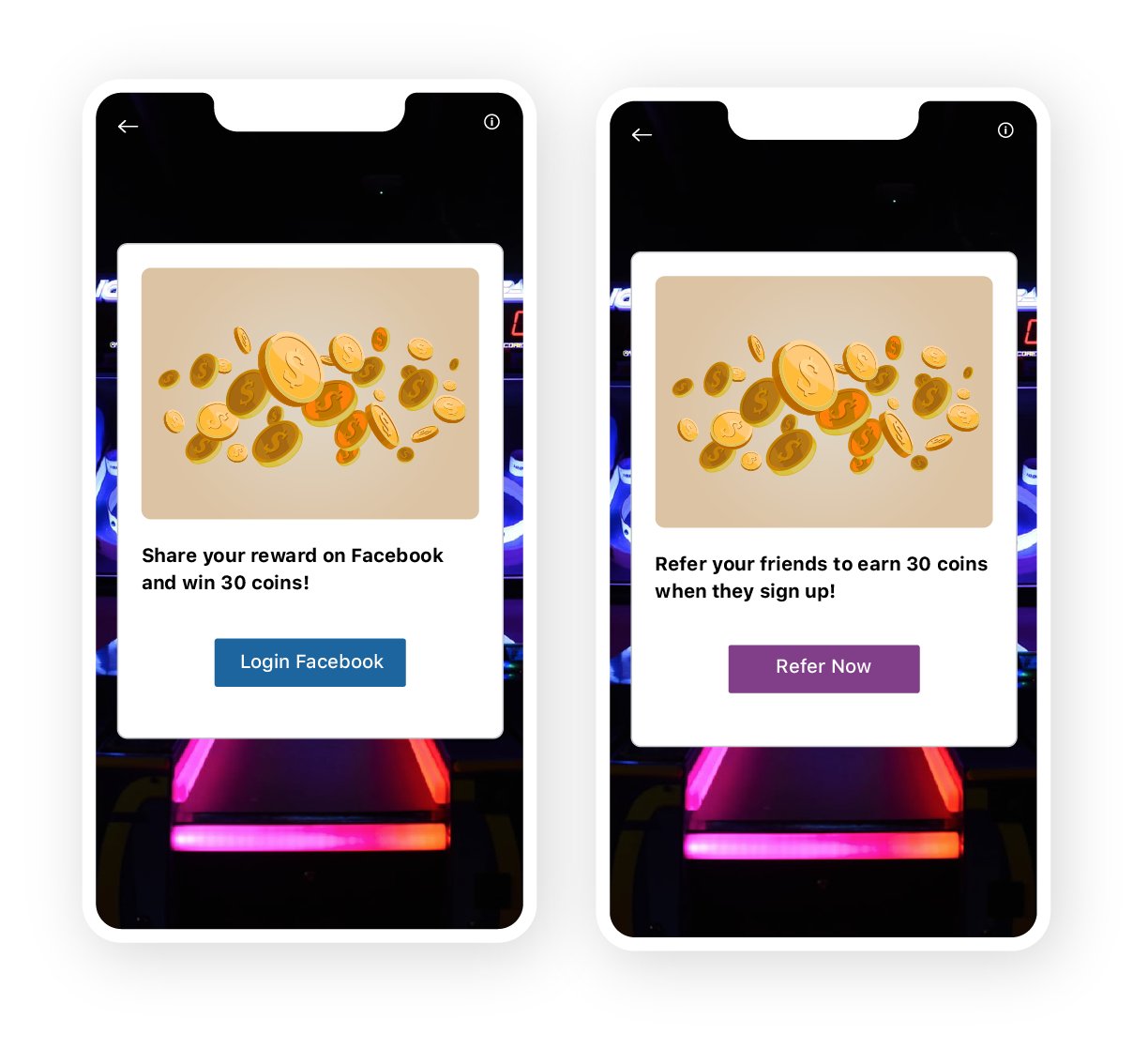

For example, a gaming app could test whether offering a social media sharing option or referral rewards program leads to an increase in sign-ups.

Minimize risk and roll out features confidently in stages

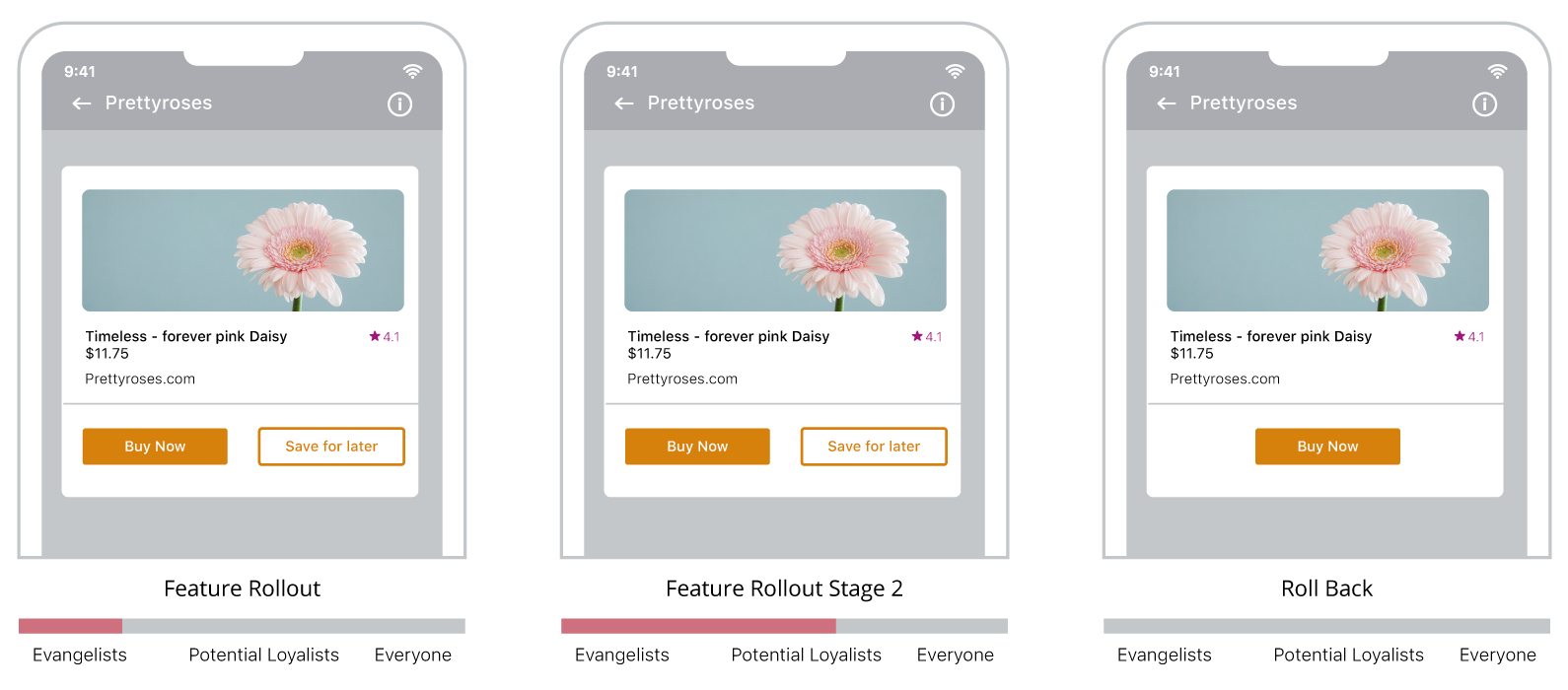

Experimenting with features before rolling them out is not all; you can further minimize the risk associated with launching a critical feature by releasing it in stages to your audience rather than making it live universally in one go. This is possible using a technique called Feature Flagging, wherein you can alter system behavior by controlling a feature (switching it ON/OFF, hiding it for a particular user segment, disabling it for another, etc.) without having to make any modifications to the code.

You can segment your user base, create your own groups of evangelists, potential loyalists, new customers, and so on, and systematically deliver features in progressive stages as and when they are ready to go live. Furthermore, you can rollback a feature almost instantly in case you notice a bug or scope for improvement and then relaunch the correct or improved version on the go.

For example, if an eCommerce app decides to launch a feature that allows users to save products for later, they can test it by first rolling it out only to their loyal customers, analyze its performance, and then release it for the next segment, and so on. And if the results are negative or unsatisfactory, they can always roll it back and relaunch the improved version without any hassles.

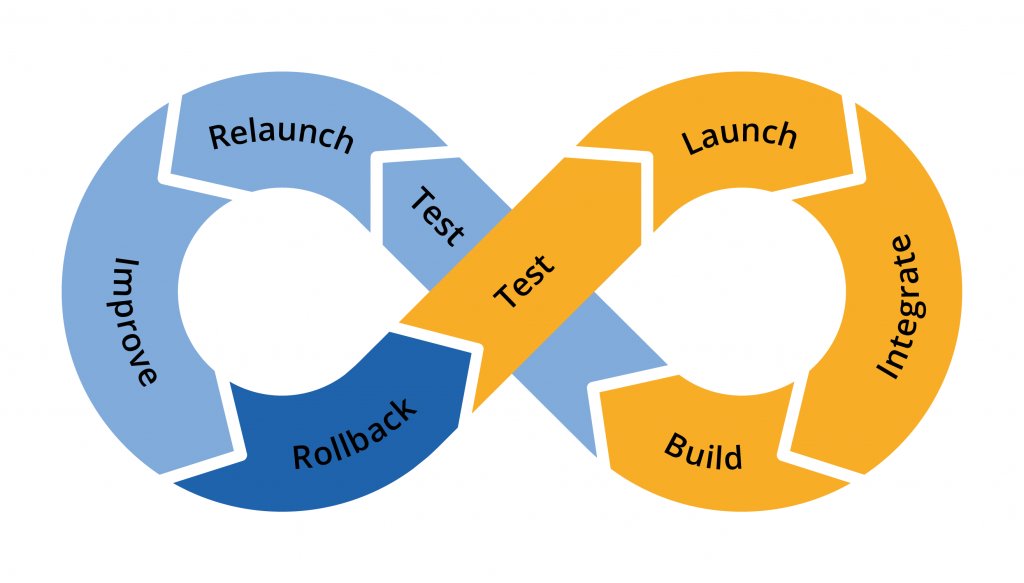

Implement and manage continuous integration & continuous delivery (CI/CD)

Continuous Integration & Continuous Delivery is the practice of software development and delivery aimed at maximizing the output of software teams. As per the technique, features are released by periodically integrating code changes into the main software branch, testing them as early and as frequently as possible, and incorporating feedback/fixing bugs and relaunching them. This continuous cycle of automating the product delivery pipeline ensures that features are made available to users quickly, efficiently, and sustainably. CI/CD makes for an uncompromisable practice in the Agile software development and project management lifecycle.

Mobile app A/B testing has a huge part to play in facilitating CI/CD and empowering developers to deliver better features, faster. By methodically and regularly testing your mobile app features, you can ensure developers receive feedback systematically that they can quickly incorporate to launch impactful features efficiently. This can help you shorten your release cycles and smoothly build and confidently launch user-centric, market-ready products.

Deliver optimal user experiences mapped with user journeys

Understanding the nuances of an ideal app user experience is crucial for marketers, product managers, and UX designers alike. There is no ‘one-size-fits-all’ solution. This is fundamentally because no two user journeys are alike, and users have varying expectations depending on their stage in those journeys. You just need to figure out how to deliver the same.

Mobile app A/B testing can help you streamline this process and deliver not just enjoyable, but also relevant experiences for each and every user journey. By testing exhaustively within your stack at each user touchpoint, you can validate what works for which user journey and optimize for them accordingly. This will help you build a well-defined product roadmap that aligns with your user journeys and deliver experiences that engage, delight, as well as convert.

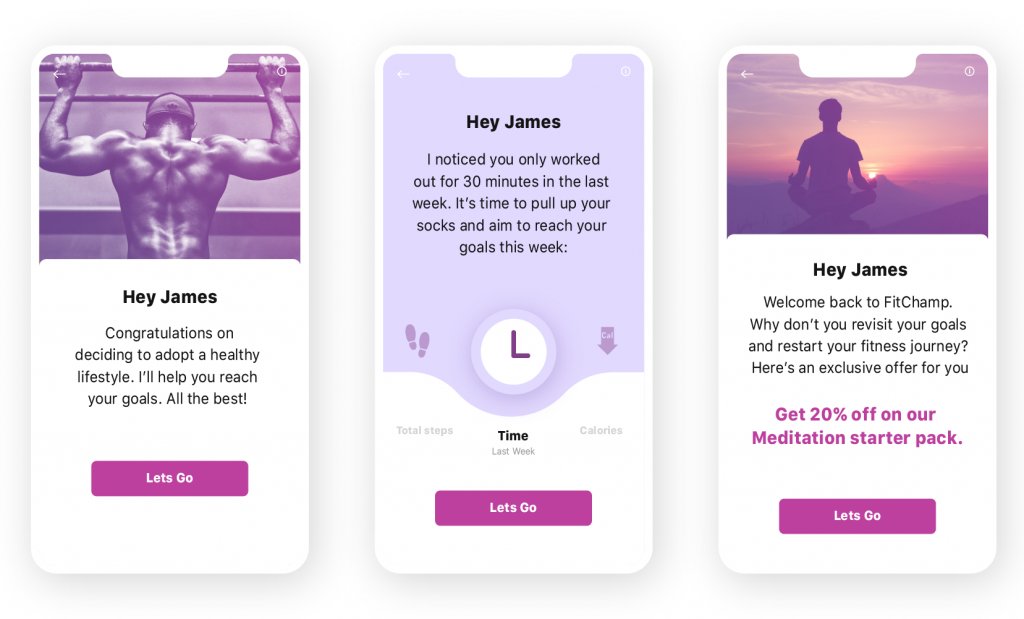

For example, for a health and fitness app, consider three types of users: someone who’s just signed up, a loyal user, and one who has been inactive for a while. The app experience for each of these users would need to be drastically different and aligned with their stage in your pre-defined user journeys. The home screen should ideally greet each of them with a different message, as depicted in the following illustration:

What can you A/B test in your mobile app?

In-app experiences

• Messaging

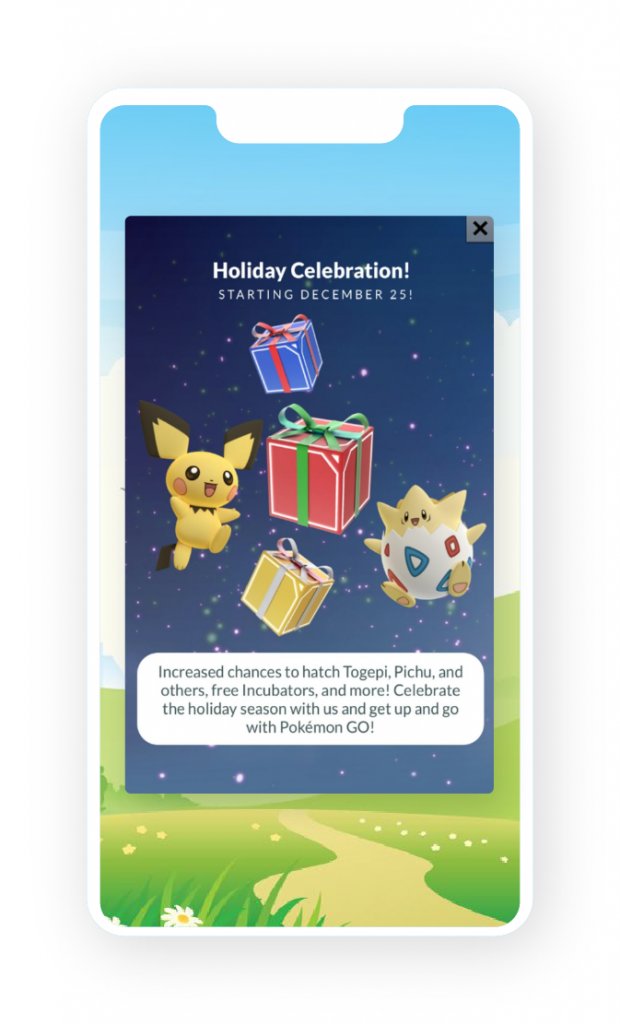

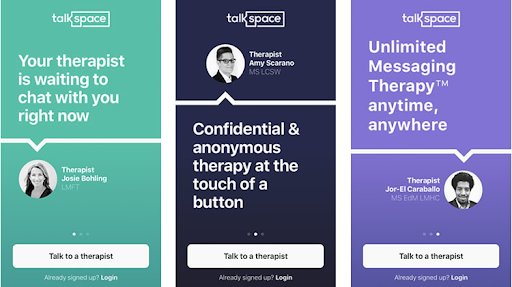

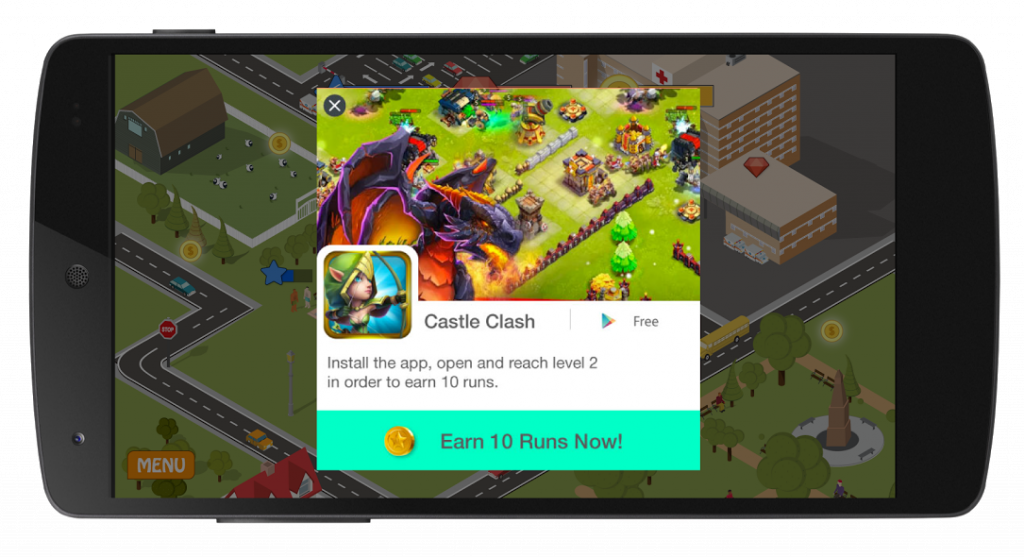

Undoubtedly one of the most critical aspects of a mobile app that can make or break user experience is the in-app messaging. There are countless tests you can run with your in-app prompts or pop-ups to ensure the right message is conveyed in the right way at the right time, while persuading the user to take the desired action.

For example, a gaming app could test rewards pop-ups for reaching specific milestones to figure out the ideal timing for such pop-ups that has a positive impact on engagement metrics. Similarly, a publishing app could test the ideal copy for a subscription prompt that leads to an increase in subscriptions.

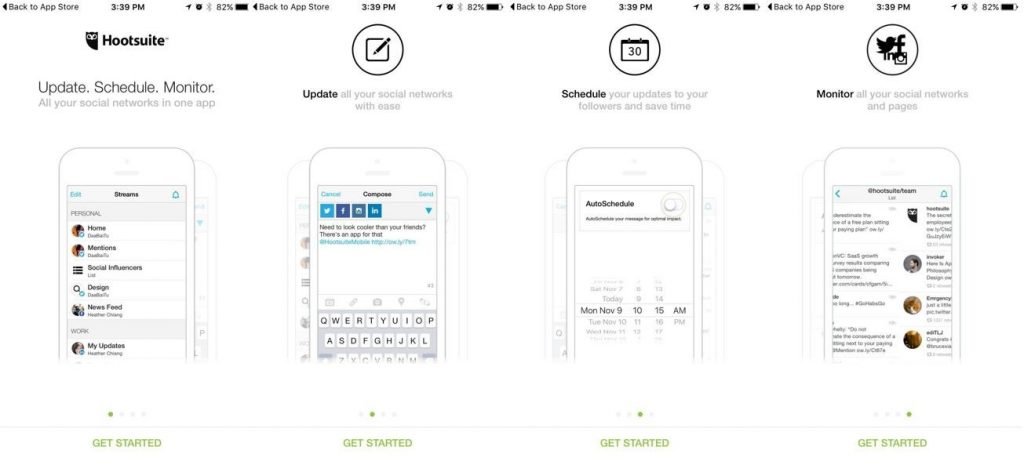

• Layout

The layout is the first impression your app leaves on the user, and you want it to be as engrossing as possible. While there are multiple aspects and steps to perfecting your app layout, you’d definitely want it to be distinguishable, aesthetically pleasing, and extremely functional; one that promises a smooth and seamless user experience.

Rigorously testing your app layout can be immensely rewarding as it will help you understand how your users prefer to be interacted with, the ideal app structure that allows them to comfortably get to what they were looking for, and the right experience that delights them into becoming loyalists.

• UI copy

Be it the CTA button text, captions, product descriptions, or headlines; your app copy plays a pivotal role in the overall user experience. Nailing the copy can get your users hooked, and failing to convey the right message, in the right tone and the right number of words can negatively impact your core metrics. To find that ideal mix that resonates and engages with your audience, while encouraging them to continue actively using your app, you need to consistently test your app copy.

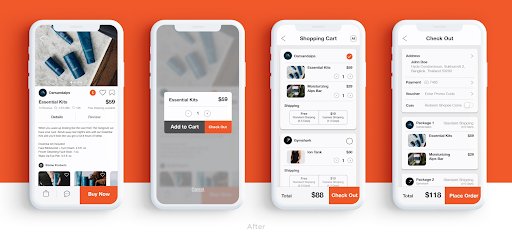

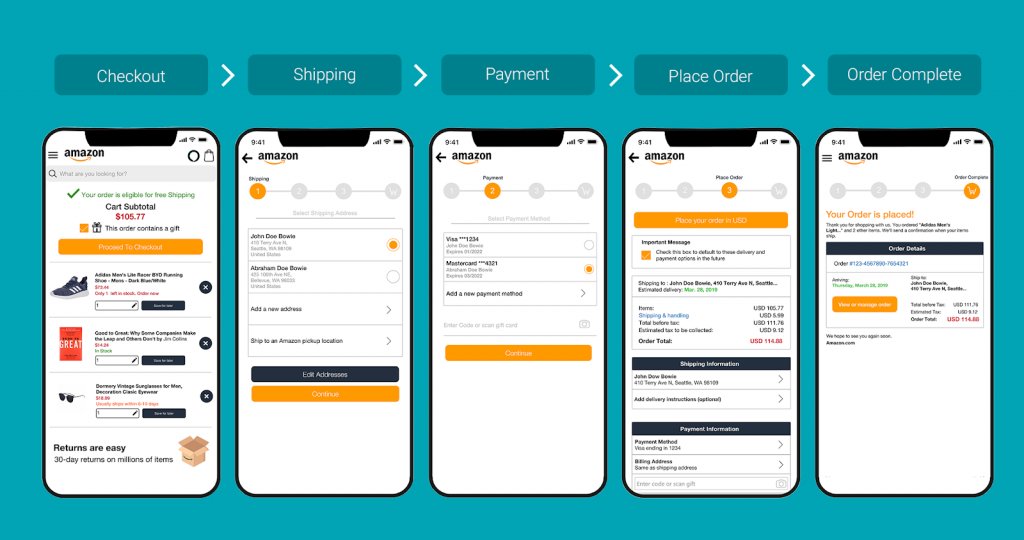

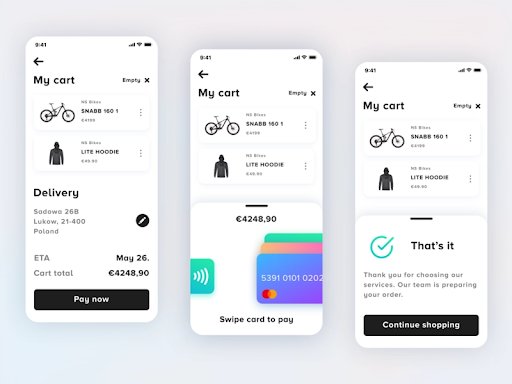

User flow

User flow refers to the sequence of actions a typical user is likely to perform to complete the desired task on your app. For instance, the checkout flow for an eCommerce app would look something like this:

Whether it is creating a playlist on a music streaming app or applying for a loan through a mobile banking app, perfecting the flow of a mobile app is extremely critical to reduce drop-offs. Experimenting with your user flow can help you figure out how to reduce friction points to ensure that users are able to seamlessly perform the desired actions and navigate smoothly through the app.

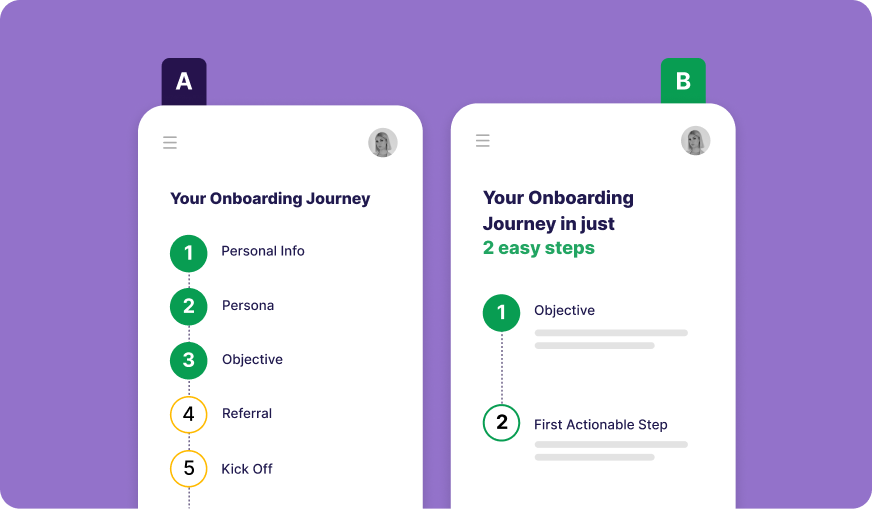

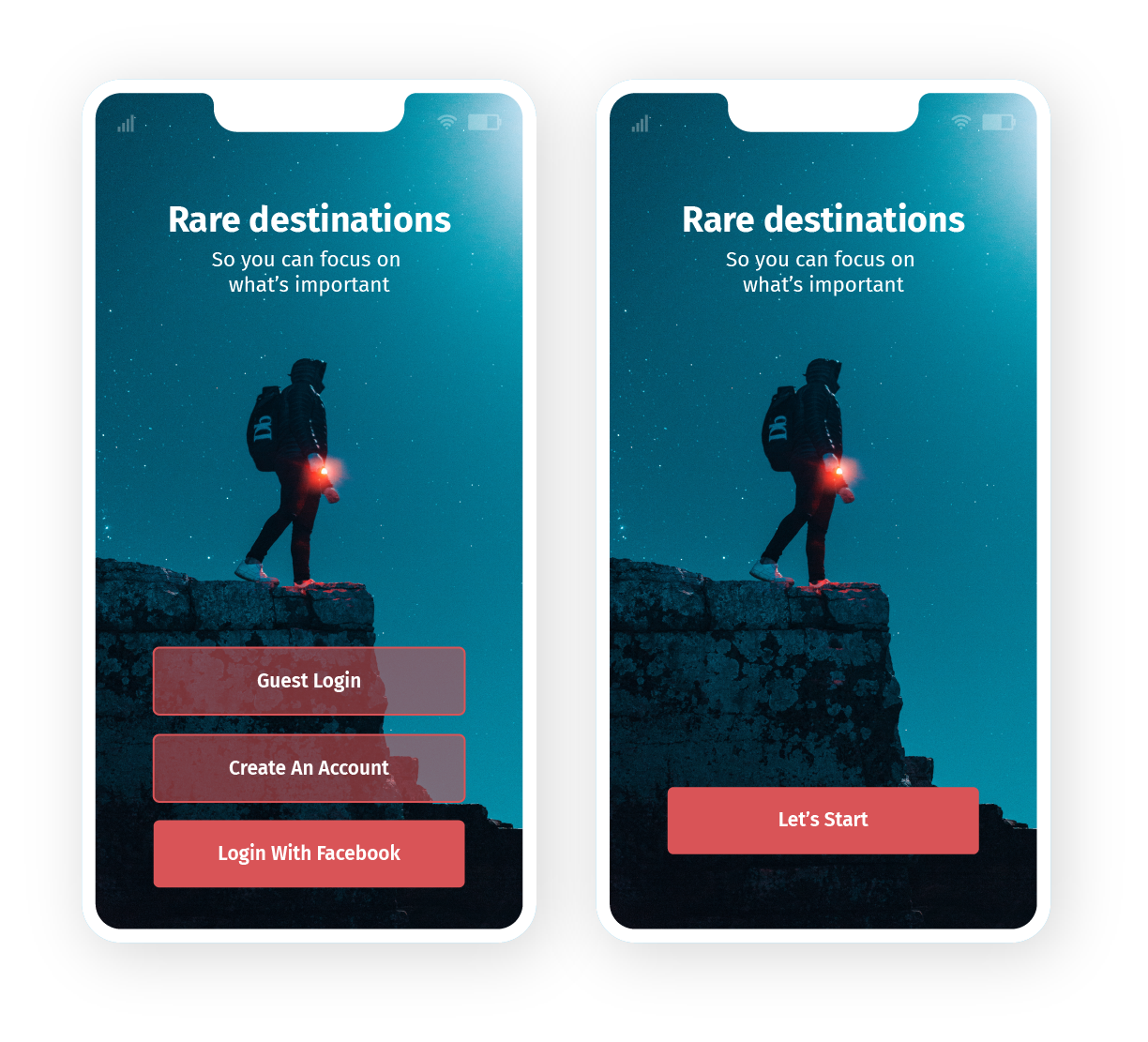

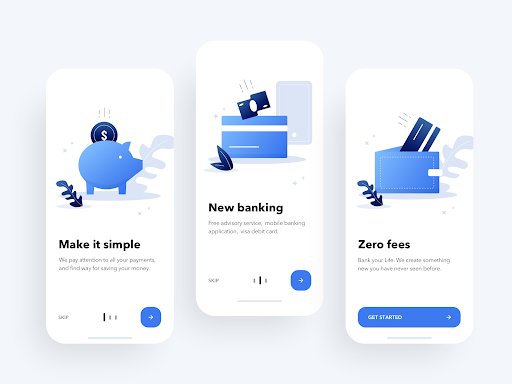

• Onboarding flow

The very first touchpoint for any user on a mobile app is through the onboarding process. To ensure the user sticks around to experience what lies ahead, you need to ensure that the onboarding process is as comfortable for them as possible. The process could have multiple steps, including the sign-up, app tutorial, and app permissions. What you can test for is the number of sign-up options to provide, whether to offer guest login or not, the ideal time to ask for app permissions, whether or not the tutorial must be mandatory, etc. to determine just the right number, combination, and flow of steps that provide a smooth onboarding experience and ultimately leads to the highest onboarding completion rate.

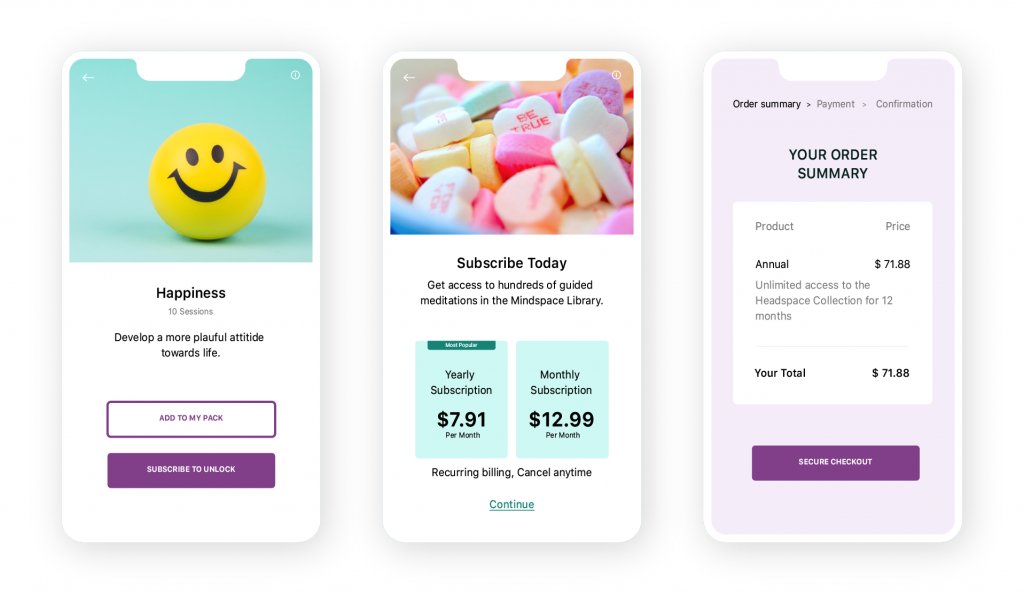

• Subscription flow

If your app has a subscription-based model, carefully constructing the subscription flow can positively impact your revenue. A smooth flow will ensure your users are able to quickly opt for a plan of their preference and make the payment without any friction. With mobile app A/B testing, you can experiment with the number of plans/pricing of various plans, the timing of the subscription pop-up, number of steps in the subscription flow, layout of the billing page, etc. – all to ensure the subscription process is as organic as it can be and positively impacts the users’ purchase decision.

• Checkout Flow

Did you know that of all channels, mobile device has the highest cart abandonment rate of 85.65%? To ensure your users don’t abandon their carts during checkout, you need to continually optimize the app checkout processes to do away with all probable friction points in the entire flow. And for that, you will have to test frequently to figure out whether you need to go for a single-page checkout or a multi-step one, the ideal placement and prominence of coupons on the page, if you should provide mandatory registration, etc.

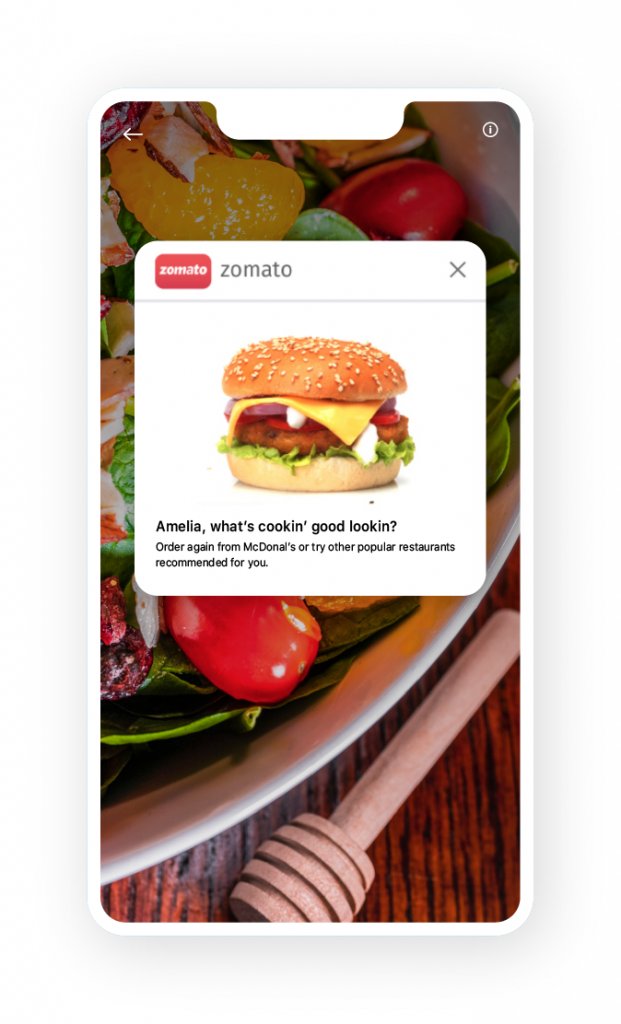

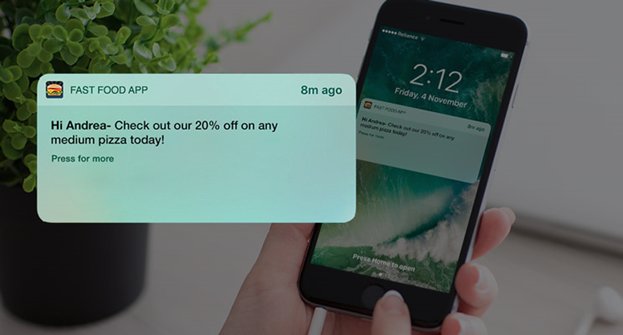

Push notifications

Re-engaging with dormant users using push notifications is a strategy most marketers swear by. However, getting the desired engagement via this channel can be tricky as there are a number of factors that determine the success or failure of your push notification campaigns. Some of the indispensable ones include the copy, timing, and frequency of your push notifications. Test your push notifications to figure out the ideal time to send them for the best response, the number of notifications you should send in a day to boost engagement without annoying the user, the right copy that increases the CTR, and so on. You could even go a step further and test whether personalizing push messages, combining text with images or emojis has any impact on your core metrics.

For example, a food delivery app could randomly segment their dormant user base and send three different push notifications to 3 user segments to see which one leads to a higher CTR.

Features & functionalities

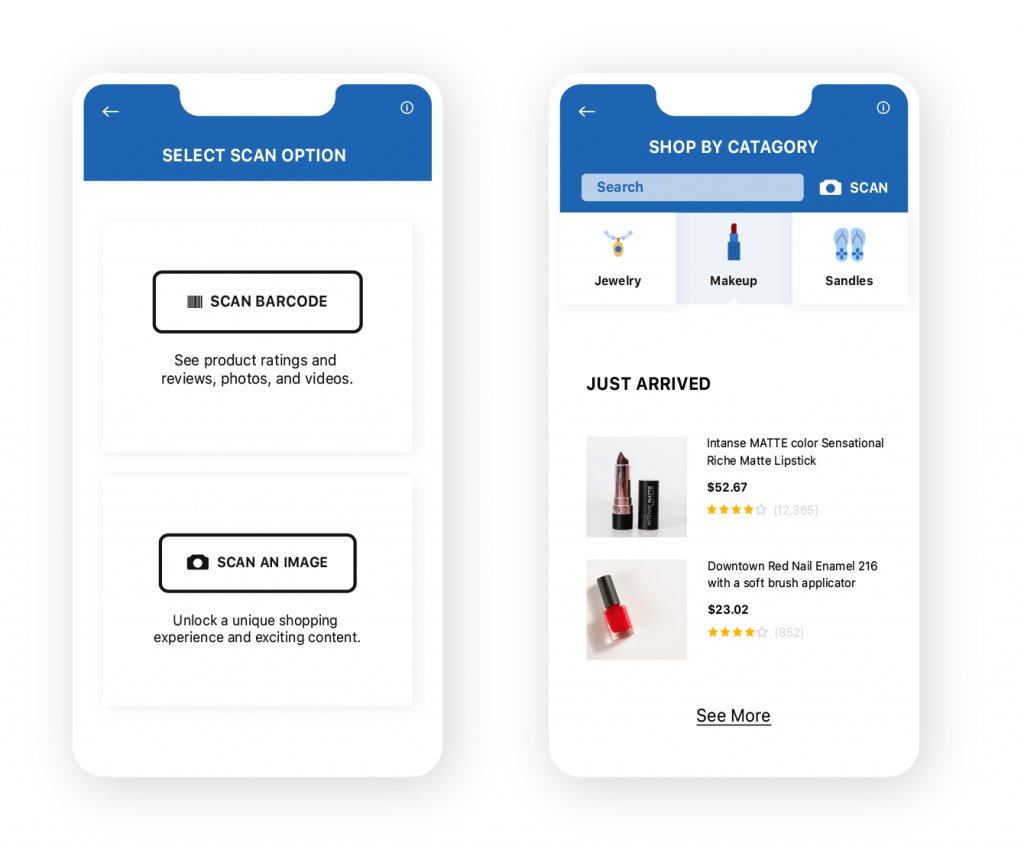

Apart from testing UI-based changes, mobile app A/B testing also allows you to experiment deep within your stack to measure the performance of certain features of your app during production. This can help you avoid taking bug-infected features live universally, segment your user base, roll out features progressively, and validate your product development ideas. Furthermore, you can also experiment with high-impact functionalities such as search and product recommendation algorithms, payment gateways, architectural changes, paywall accesses, and all other critical aspects of your mobile app that can only be tested on the server-side.

So, mobile app A/B testing not only helps marketers streamline their user funnel to optimize for conversions, but also product managers, UX designers, and app developers develop highly-competitive apps that deliver delightful experiences.

For example, a personal care and beauty app could test whether offering app-exclusive features such as scanning barcodes for product information and how-to videos, helps positively impact their customers’ in-app or in-store purchase decisions.

How does mobile app A/B testing work?

Since testing on mobile apps is deployed at the server’s end, its working is largely similar to that of server-side testing. Therefore, each variation of a test needs to be coded, and there is no drag/drop mechanism available, like in the case of client-side testing. While you might argue that this might lead to the relatively slower implementation of tests, experimentation on the server side considerably enhances the scope of testing. So, mobile app A/B testing allows you to experiment deep by testing product features, algorithms, in-app flows, push messaging channels so as to thoroughly optimize your user experience and customer journeys. Another important reason for deploying mobile app A/B testing on the server-side is the probable side effects that could be caused if it is implemented on the client-side, including performance issues (such as the Flicker Effect), and UI bugs. Therefore, to leverage deep experimentation and get the desired results seamlessly, mobile app testing requires all variations to be coded, and all changes to be implemented directly at the server’s end as opposed to relying on a visual editor.

Here’s the step-by-step working of mobile app A/B testing:

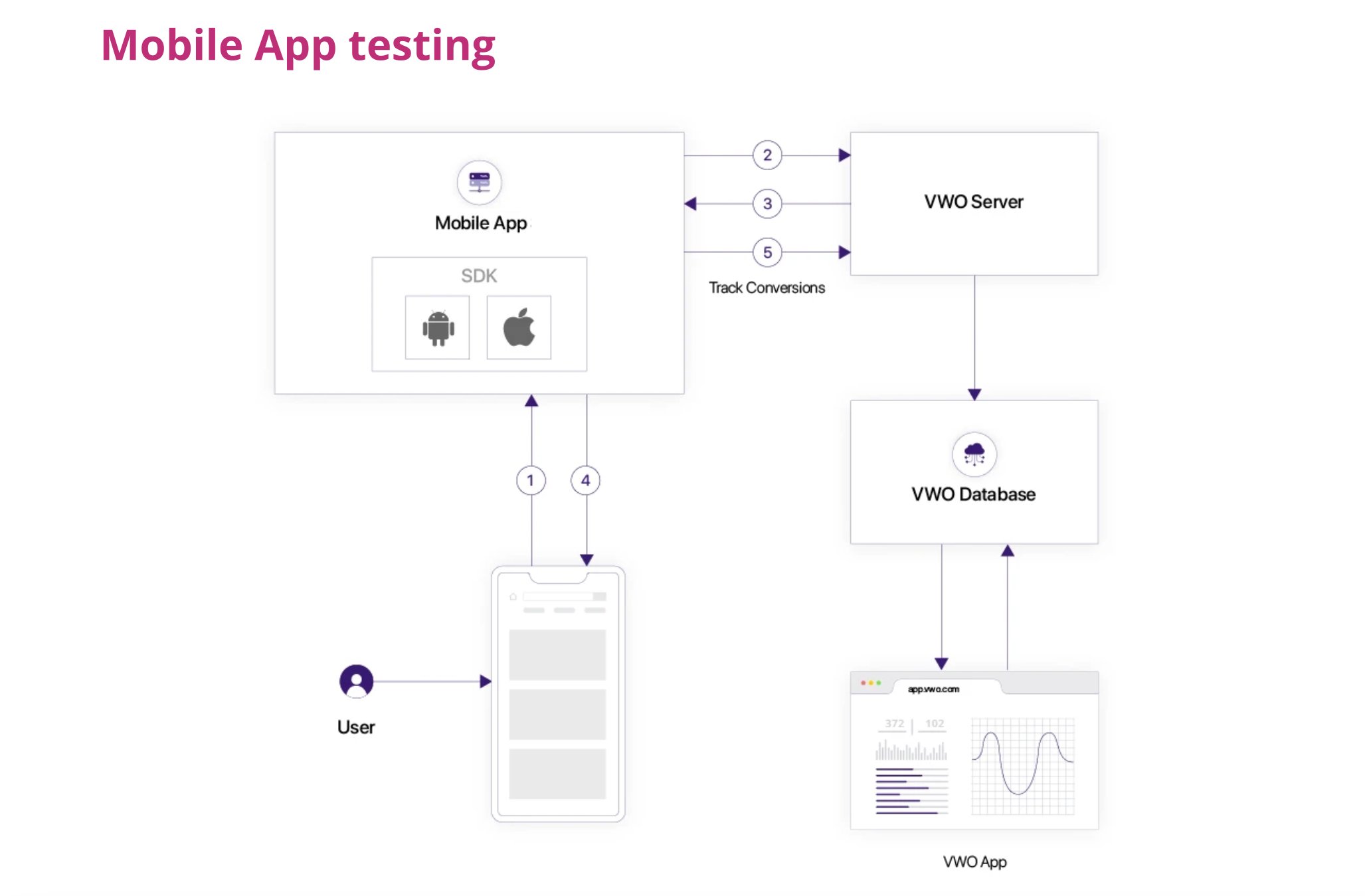

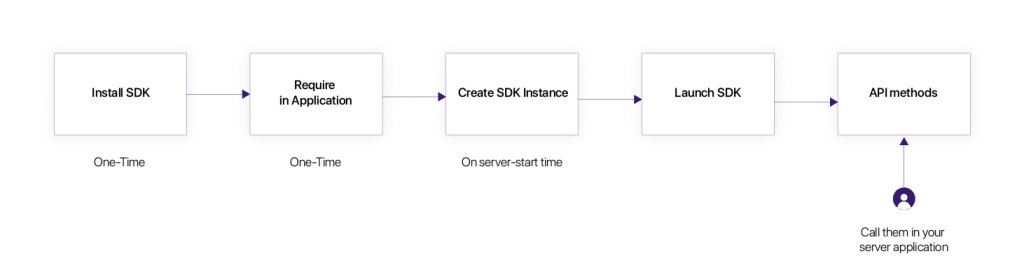

First, mobile app A/B testing requires a one-time SDK installation. The SDK is available for all platforms including iOS, Android, and all backend languages. Once installed, SDK can be integrated into your mobile app server application by simply requiring it.

1. Whenever a user launches a mobile app the SDK is initialized which sends a call to the VWO server.

2. The SDK acts as an interface between the mobile app and the VWO servers, which contains all the campaign-related information such as the number of variations, the number of goals, overall traffic distribution, variation level traffic allocation, etc.

3. VWO servers are responsible for the calculations determining which variation is to be shown to which user and these settings are finally sent to the SDK running on the mobile app.

4. These settings are then used to create an instance of the SDK, which offers various capabilities including A/B testing by providing a variation to a particular user, enabling a feature for a visitor, tracking goals, etc.

5. Once the variation is assigned, and the goal is tracked by the mobile app’s server, the data is sent to the tool for tracking purposes. This data is fetched while viewing the campaign’s report or application dashboard.

How to successfully conduct mobile app A/B testing

Revisit and benchmark your existing core metrics

Depending on the type of your app, your core metrics could range from daily/monthly active users, customer lifetime value, and cost per acquisition to conversion, churn, and retention rate. Start by revisiting your app’s core metrics and then use analytics to determine where you currently stand on those. Next, revisit the corresponding KPIs that you use to measure those metrics. Now you know what is performing well and what isn’t for your mobile app. Being aware of your app’s current performance will help you draft a definitive mobile app A/B testing and optimization roadmap.

Identify key areas of improvement and set clear goals

Once you have brushed up on your app’s current performance data, you will be able to identify majority of the key areas of improvement for optimization. For the rest, you should carry out extensive market research to figure out what you’re missing out on and then come up with other improvement areas in your app that need attention. Basis these, you can set a priority order by calculating which of these optimization areas will have the highest positive impact on your KPIs. This priority list now dictates your mobile app optimization goals.

Take the segment-first approach while constructing hypotheses

Once you have defined the key areas of improvement, analyzed the numbers, and figured out the reason behind them, you are only a step away from constructing a solid hypothesis– conducting extensive quantitative and qualitative research based on different user segments. When hypothesizing for a test, one of the mistakes you want to avoid is going by averages instead of segment-specific data.

For instance, if a push notification has a poor CTR or your cart abandonment rate was higher this quarter, you can leverage segmentation to drill down further to find out which segment is responsible for those numbers. This will give you useful data and actionable insights to create granular hypotheses and run targeted tests.

You can now start formulating your hypotheses based on insights and observations churned out from the qualitative analysis of your user behavior. All you need to keep in mind is that these should propose a feasible solution to a critical business problem.

Put simply; your hypotheses should:

- Be based on data collected after analysis of the behavior of different user segments on your app

- Propose a practical solution; outline the change that will address a business problem

- Highlight the anticipated results of implementing the solution and provide insights on the problem being addressed

Here’s what well-constructed hypotheses should look like:

eCommerce app: Based on the analysis of the behavior of users browsing product pages, we expect that adding better quality images and including product videos will address the problem of users not adding items to their cart.

Gaming app: Based on the analysis of the behavior of users at level 7 of the game, we expect that providing a hint pop-up would address the problem of users dropping off.

Hyperlocal food delivery app: Based on the analysis of user behavior and queries received by support, adding a feature to customize dishes would help increase the number of orders as well as the average order value.

Test frequently and iteratively

Spending significant time and effort in the research and hypotheses creation phase is futile if you just run the test once and expect actionable results. There are countless different ways of addressing a user pain-point or optimizing an experience on your app. So, by concluding tests prematurely or not running them iteratively, you are missing out on a whole lot of better optimization possibilities or worse, going by inaccurate results. Instead of deciding winners and losers ahead of time, you must derive insights from each test, build the next test on top of the previous one and test each element, feature, or experience repetitively to churn statistically significant results.

Keep a log of your learnings and inculcate them

Documenting your learnings from a test and applying them in all future mobile app optimization decisions is just as important as running the test itself. To ensure each of your tests streamlines your optimization efforts and contributes to business growth at large, you need to systematically archive your test results and meticulously use them in all decision-making going forward. And you never know, a failed test might just help you solve the most complex dilemma at some point in your experience optimization journey.

Realign your product roadmap

Once done with successfully executing a set of A/B tests and having reached a significant milestone in your testing roadmap, it’s time to think about how mobile app A/B testing has a larger role to play in your product and experience optimization journey. This involves revisiting your entire roadmap to work out how you can use your test outcomes as well as learnings to thoroughly optimize your in-app experiences and build a better product. Tying different test learnings together will help you determine how different aspects of your app work in conjunction to provide an experience as a whole. This will not only give you testing ideas to further streamline your experiences but also allows you to examine the performance of your implemented tests holistically in amalgamation.

Pitfalls to avoid with mobile app A/B testing

Not creating a mobile app-exclusive optimization strategy & roadmap

One of the most common mistakes marketers and product managers make when getting started with mobile app A/B testing is not being mindful of how diverse a channel mobile app is from the web and therefore end up applying the same principles of experimentation for mobile app experience optimization they have been following for web. Why this approach doesn’t work is because whether it is the user base, user behavior, customer journey, in-app experiences, or user flow, mobile apps have highly distinctive characteristics, and are vastly dissimilar from the web. To be able to extensively optimize mobile experiences, you need to create a separate strategy altogether that tackles mobile users’ pain points and focuses on overcoming mobile app-specific challenges.

Not testing methodically

Running ad-hoc tests without paying close attention to some critical factors can leave you with inaccurate results and ineffectual insights. Not running a test methodically can also be one of the reasons your test fails. To ensure your mobile app A/B testing efforts are going in the right direction and are fruitful for business growth, be sure to test systematically and strategically, and avoid the following:

• Concluding A/B tests before reaching statistical significance

Successfully conducting any kind of test requires patience, and mobile app A/B testing is no different. You need to give your tests enough time before you can begin analyzing results and devising the way forward. Therefore, the primary pitfall to avoid here is the tendency to conclude tests prematurely, before reaching statistical significance. Before commencing any test, always have a pre-calculated duration for that test as well as the sample size, and ensure that the test is run on the entire sample size so that you have enough data (maximum true positives) to safely draw conclusions. Declaring tests done before this pre-decided time duration can negatively impact the intended purpose of running them. Also, while it’s alright to peek at the test results while it is being run, never stop the test as soon as you detect the first statistically significant result or even a failed one. Run the test for the same amount of time as was pre-decided (until the set number of visitors have been tested on).

• Testing without enough traffic

If your app doesn’t have enough users, it makes little sense to run a test as it might take you months to reach statistical significance. And wasting that amount of time and money can’t be good for your business. Therefore, mobile app A/B testing is considered a viable option only when you have a large enough sample size that can drive substantial results and help you take better decisions in a reasonable amount of time.

• Modifying key parameters mid-test

Whether it is the testing environment, the control and variation design, traffic allocation, sample size, or the experiment goals, the key parameters of your test need to be locked before you get started. By altering any parameter mid-test, such as changing the traffic split between the variations, you could skew your test results and in worst cases, invalidate them – all without having achieved the intended purpose of making the modification.

• Running concurrent tests with overlapping traffic

If you’re planning to run multiple tests that can have some level of interaction, you will have to be mindful of a few critical factors to ensure your test results aren’t skewed. For example, if you are running tests on all the steps involved in the onboarding flow, you can’t be sure of the accuracy of the results of any of the tests. In such a case, it is essential to run controlled tests rather than running multiple of them simultaneously. Also, if the traffic for multiple tests overlaps, you need to ensure that the traffic from each version of a test is split equally between the next, and so on.

Going with hunches vs. data-backed hypotheses

Is it possible to run an A/B test on your mobile app without a hypothesis? Yes. But, could skipping the hypothesis render your test results useless? Also, yes.

A hypothesis is a statement that offers a definitive starting point to run a test and can be substantiated based on research and analysis. It clearly states the problem being tackled, the element being tested, the reason behind testing it, and the expected result. While it could later be validated or disproved, the hypothesis defines the rationale behind running the experiment. If you get started without a strong, data-backed hypothesis by testing random ideas, your experiment won’t yield any useful or actionable insights, even if your hunch is ultimately validated. Also, you’ll end up with a huge, unnecessary expense without reaching any logical conclusion.

Running tests with too many variations

You might argue that testing with fewer variations limits the scope of your test and keeps you from discovering solutions you never thought existed. Experts also emphasize the fact that limiting the number of variations also limits teams’ ability to explore and validate diverse testing ideas and concepts. And these are valid points. However, one can’t ignore the fact that testing with too many variations can lead to some issues with regards to the authenticity of your test results.

First, you are likely to end up with way more false positives as the significance level of your test will decrease with an increase in the number of variations. Technically referred to as the multiple comparison problem, this could drastically impact the accuracy of your test results.

Next, needless to say, too many test variations will slow down your test as you’ll need significantly longer to deliver these variations to your entire sample size. The other issue it causes is commonly known as sample pollution. Since you will be running your test for longer than usual, one of the repercussions might be the high probability of users returning to your app after deleting their app cache and becoming a part of the test again, which would negatively affect the accuracy of your sample size. The conversion rates for the variations could turn out to be skewed because of the polluted samples, and you will notice little difference in the numbers for different variations.

All of these critical factors add up to conclude that increasing variations just for the sake of it or doing so and expecting better test results is not going to work in your favor. You should only consider increasing the number of variations if your experimentation and resource bandwidth permits, you have the required number of app users and sample size to run long tests on, and if it is absolutely necessary for a particular test.

Mobile app A/B testing challenges

Dependency on engineering teams for end-to-end implementation

Mobile app A/B testing empowers you to experiment with ideas beyond the scope of client-side testing and optimize your entire stack by testing on the server-side. All of this apart from helping you test and make quick, UI-based fixes to your app. Due to this enhanced scope, the requirement of an SDK installation and the involvement of developers becomes imperative to successfully run and conclude tests on mobile apps. This fundamentally boils down to reduced autonomy for marketers, product managers, and app UX designers as they can’t independently deploy and conclude tests on mobile apps. It might also lead to an increase in the effective time required for end-to-end implementation of tests on mobile apps due to dependencies on multiple teams.

While this can be challenging for teams new to A/B testing, it is also not impossible to leverage the situation to your advantage. All you need to do is meticulously plan and devise a framework for leveraging every test to drive business growth so that having to dedicated, additional resources for A/B testing doesn’t hurt your pocket or slow you down, and is worth going the extra mile.

Increased complexity of tests owing to nonlinearity of mobile UX

Unlike web or mobile sites, the user experience, app flow, and conversion funnel in mobile apps are not linear. Users seldom navigate unidirectionally through the app and almost never follow the classic conversion funnel model. Whether it is an eCommerce app or a gaming one, users’ interaction across multiple app touchpoints is more nuanced and complex as compared to that on a website.

In a gaming app, for instance, gamers are more often than not found switching levels, engaging in chat rooms, and making in-app purchases, all at once. Given this dynamic flow of users’ interaction with your app, clearly defining KPIs becomes complicated as user journeys tend to intertwine with one another. Hence, testing on mobile apps demands a much more sophisticated and comprehensive approach. Therefore, instead of merely testing standalone elements, it would be more fruitful to first understand how your app user journeys intersect with one another and then accordingly define your testing roadmap to optimize user flows, in-app experiences, and features that holistically uplift your app performance.

Probable increase in time-to-market of an app update

When it comes to mobile apps, a common argument made is that A/B testing can cause a delay in making an app update live. This is because, unlike in the case of websites, you can’t simply set up a test using a visual editor, take the winning variation live universally, and ship the new version of your app instantly. For every app update, you need to wait for app store approvals and for users to update their apps to be able to finally view the winning variation. Therefore, building and maintaining a culture of continuous experimentation becomes tough in case of mobile apps, as probable delays caused in universally deploying winning variations post tests, and launching app updates post approvals could negatively impact the time-to-market of app updates, feature release cycles, and testing schedule.

While this can be quite a challenge for marketers and product managers, it’s not something that should stop you from adopting mobile app A/B testing. You can work your way around this by initiating the testing process better prepared. You can use test calculators to estimate test durations beforehand so that you can schedule and time your app updates, feature releases, and experimentation roadmap accordingly, so that continually running tests and updating your app does not slow down your app or business.

Mobile app A/B testing use cases

eCommerce

• Eliminating friction in the checkout flow

Notice how eCommerce giants such as Amazon or eBay make buying from their mobile apps such a breeze, there are times you almost inadvertently make a purchase while on the go and don’t even regret it? And then there are apps that you open determined to make a purchase, add your favorite items to the cart, but somehow end up losing interest and dropping off.

This contrast in users’ purchasing behavior can be largely attributed to the quite obvious difference in the checkout flows of various eCommerce apps. Friction in checkout flows can lead to frustrated users and a significant increase in the cart abandonment rate. More so because mobile users have even shorter attention spans, and hence demand a checkout flow that is absolutely seamless. All you need to do is create one that automatically entices them to hit ‘Place Order’ even when they’re in the middle of a meeting on a Tuesday morning.

A/B testing various ideas on how to build that perfect flow can be a good place to start. You can begin by analyzing your app’s performance to gain user insights that list their pain points in your checkout flow and then work towards creating hypotheses tailored to tackle them. For instance, if you’ve noticed that people drop-off to fetch a coupon code, you could test offering an option to generate in-app coupon codes and see if it positively impacts your conversion rate. Other commonly tested mobile commerce ideas include reducing the number of steps and app pages in the checkout process, reducing unnecessary fields in your forms (you can use form analytics to analyze your forms), providing options for guest checkout and social media logins, offering mobile wallet payment options to encourage users who are hesitant or busy to add their credit card details to make their purchase, and providing option to save items for later so that users who don’t buy right away know where to look for them.

With mobile app A/B testing, you can even create different versions of checkout flows to target various user segments and gain granular insights on what’s working for which particular segment of your audience.

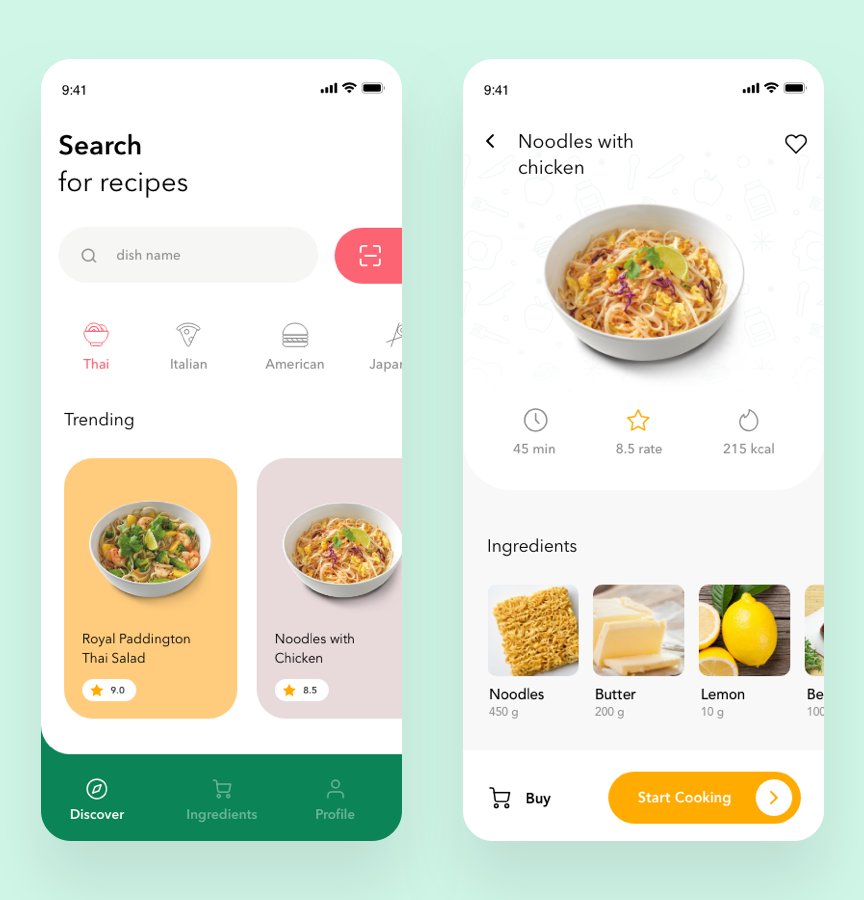

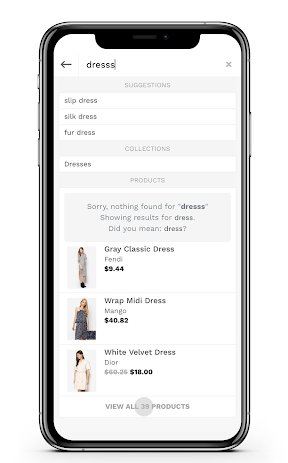

• Improving the efficacy of the search algorithm

Due to the obvious space constraint on mobile screens, it becomes all the more critical to ensure that your search algorithm works effectively to fetch and display the most relevant items on the top to get users hooked from the moment they hit the search button. To test how successful your search algorithm is in doing so, you can simply test it. You can experiment with multiple search algorithms that use different ways to categorize products and several criteria to decide their relevance to a search query to finally narrow down on the one that proves to be the most effective. You could test an algorithm that uses products’ popularity as a metric to rank them, one that ranks them basis pricing (or promotions), or an algorithm that bases its results on users’ behavior, past searches or their location, to name a few.

Whatever the results of experimentation, there are a few things you can incorporate to ensure your users always find something that interests them or at least never leave disappointed. One such tactic is always showcasing a few relevant or related products (even if they don’t exactly match the search query) as opposed to just throwing the message – “We’re sorry, we couldn’t find any product that matched your search term” on a blank screen. Another one could be to provide autocomplete for quicker searches. It is, in fact, even advisable to ensure that misspellings also have results. All these impactful steps will work together with an effective algorithm to create a delightfully seamless shopping experience for your users.

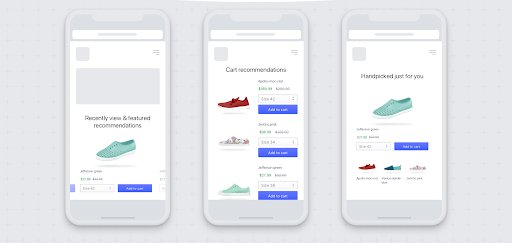

• Optimizing the dynamic product recommendation algorithm

According to a research by Salesforce, mobile shoppers that click recommendations complete orders at a 25% higher rate than those who don’t. Come to think of it; this number isn’t even surprising. Your customers are on-the-go shoppers who thrive in a mobile-first world, where personalization is king (and thankfully, even more effective on mobile). Therefore, meticulously offering personalized, relevant, and intelligent product recommendations is as customary as it is an urgency, to unlock a whole new bunch of conversion opportunities. However, how successfully you are able to do so depends on the efficacy of your product recommendation algorithm. And mobile app A/B testing allows you to put that to test. You can experiment with multiple algorithms based on the diverse criteria of selection of the products to be recommended. The most common criteria you can test with include bestsellers from the category, trending products, new arrivals, top-rated products, etc.

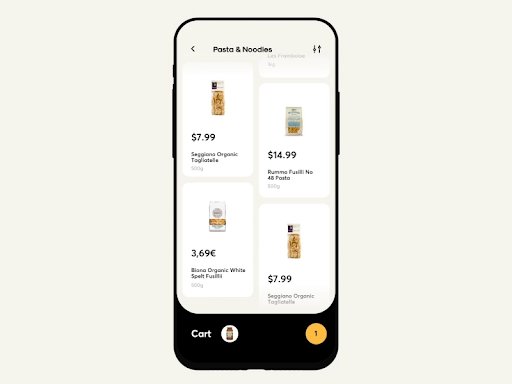

• Customizing the search results page layout

The search results page is where users are first introduced to your products. Once you have optimized for quality results by experimenting with your search algorithm, the next step would be to test and optimize your search results page’s design and layout to ensure navigating through the page to get to the desired product is absolutely seamless. You can start by running tests around the structure of the page and the arrangement of products, the CTAs to provide with each product (Add To Cart, Save For Later etc.), the amount of product description to feature, the tags to be assigned to products (Bestseller, New Arrival, etc.), the discount amount to be featured with each item, etc. This will ensure your customers are given all the relevant information on the search results page that makes them take the desired action.

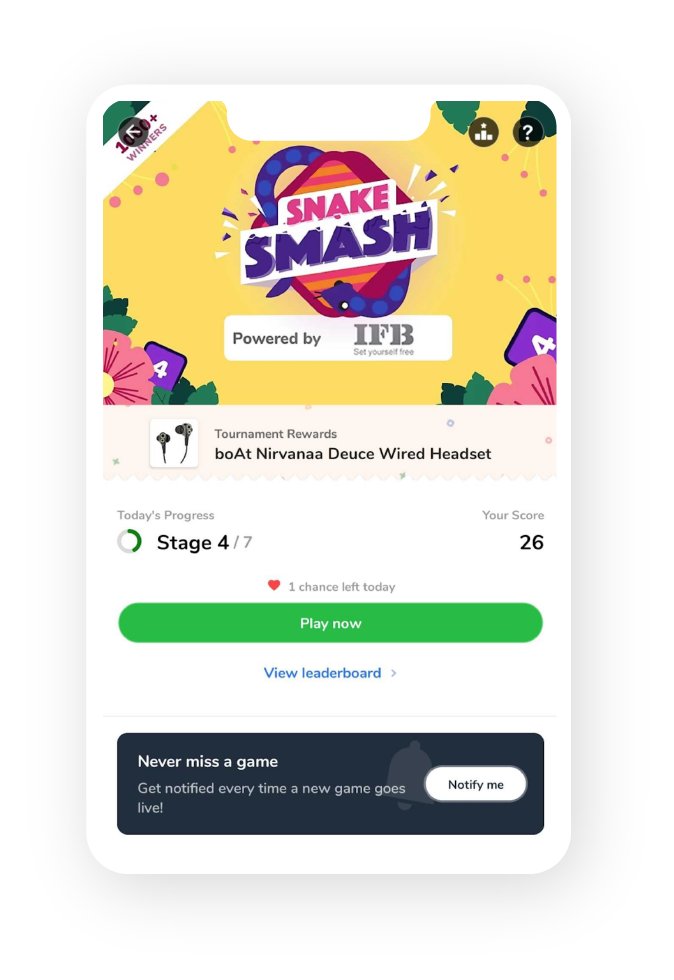

Gaming

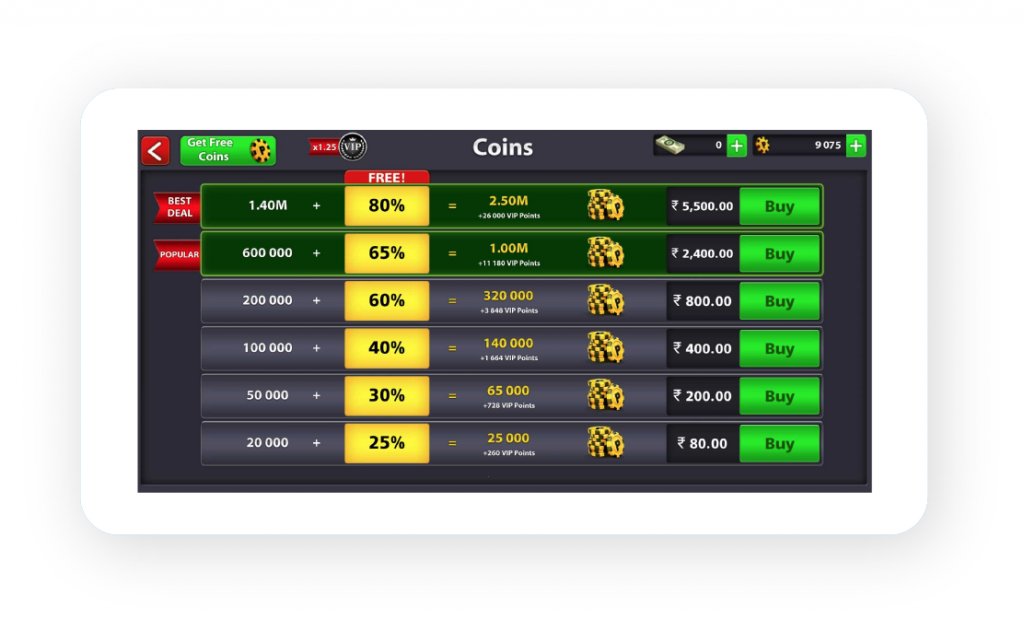

• Optimizing for the ideal amount of free credit/unlocked levels

How do you decide on the right amount of free credits (in-game currency) to offer that ensures the ideal balance between happy (and hopefully, returning) users and increased profitability? You simply A/B test different values and again, use segmentation to see which of your hypotheses works for which user segment and then select the amount of credits to offer upon various milestones such as sign-up, referral, social sharing, level completion, etc. Similarly, you can test to find out the ideal number of unlocked levels you should offer to ensure minimal drop-offs, maximum retention, and optimal monetization.

• Streamlining in-app advertisement strategy

An effective and almost indispensable strategy for monetization, in-game ads need to be seamlessly integrated within the app environment so they enhance the gaming experience rather than causing an unwelcome and annoying distraction. To ensure that ads don’t hurt your customer retention rate and amplify your monetization efforts, experiment with and optimize your ads with regards to the positioning, timing, and targeting. You can run A/B tests to figure out the safest levels to run ads to reduce drop-offs, the ideal user segment that you can target your ads to, the number of ads to run, and so on.

• Staging tailored gaming experiences

Fun fact: In the year 2018 alone, the mobile gaming industry generated $70.3B in revenue, which is almost $30B more than the worldwide box office revenue of $41.7B.

At this unbelievable pace of growth, relentless innovation, and cut-throat competition in the mobile gaming industry, gaming app developers are now forced to constantly outdo their gaming experience to make the cut. And so it’s no longer enough to create an immersive and enriching mobile game; you need to go the extra mile to deliver targeted experiences that engage better. Since mobile app A/B testing allows you to experiment with even the dynamic elements of your app, you can alter different features for various user segments to tailor experiences for all gamers basis their level in the game, behavior, and demographic factors.

For instance, you could test whether dynamically changing gaming levels for certain dormant users can get them to return, level-up easily, and re-engage. This will not only deliver better, more delightful gaming experiences, but also help you figure out the critical pain points you can optimize to get users hooked.

• Improving targeted pricing strategies

Pricing is another element that can be dynamically altered to strategically target various user segments to boost profitability while keeping users engaged. You can run A/B tests around tweaking pricing for profitable user segments, increasing prices before holidays and weekends, and offering discounts to unengaged, dormant ones to see if this has any impact on your core metrics.

FinTech

• Perfecting the onboarding flow

Seamlessly onboarding a user on a mobile banking app can be challenging due to the obvious hesitation people have when it comes to sharing sensitive information online. Therefore, the onboarding process should be created so as to instill a sense of confidence within the users to shift their financial lives to their mobile phones. Continually testing your onboarding flow will empower you to optimize it for the most seamless, user-friendly experience to ensure your customers are not intimidated or hesitant to sign-up and use your app for all their banking needs.

However, a challenge encountered when optimizing mobile apps is the concern regarding data security as businesses are hesitant in trusting third-party client-side mobile app A/B testing tools with storing and manipulating sensitive user information. Mobile app A/B testing comes to the rescue here. By deploying tests on the server-side, it ensures testing attributes are safe internally within the app server, and there is no security threat to users’ confidential information. So, mobile app testing becomes the obvious choice for optimizing mobile apps where data security is paramount.

If you are just getting started with mobile app A/B testing, you can take up simple tests to optimize your FinTech app. For example, providing a progress bar that indicates the user’s progress on the sign-up process. By highlighting how far users have come, you can eliminate roadblocks with regards to time constraints and confusion on the way ahead by clearly showcasing how close they are to the finish line. Another hypothesis you can test for is postponing inessential bits of the onboarding process until after the user has successfully registered. For instance, instead of providing the onboarding tutorial upfront during the registration process itself, go for the in-app contextual and interactive tours layered on top of UI elements, wherein hints are triggered, and tips are provided whenever users reach the relevant sections of the app or perform the relevant action for the first time. Also, when it comes to asking for permissions and personal information, you can test if giving more context as to why you need it can help you get the information or permission quickly.

Hyperlocal delivery

• Segmenting and targeting users

For a food delivery business, the target audience, in all likelihood, ranges from white-collar folks looking to order organic or gourmet food to university-goers looking for the cheapest late-night pizza place in town. By not using segmentation to target these two segments (and all the others that lie in between) separately, you are probably missing out on quite significant business opportunities. By using analytics to better understand your users basis their demographic, behavioral, or geographic attributes, you can categorically target them. Some A/B tests you can run around this include offering special deals to the bargain hunters, displaying restaurants within 5km radius upfront to those who look for quick delivery, showcasing gourmet dishes in the first fold for the health-conscious, etc.

• Personalizing in-app experiences

With the advent and huge success of the on-demand economy, effortless and promising experiences can be instrumental in driving users to your app. However, given the fierce competition in the category, it’s nearly impossible to grab and retain attention or loyalty without going a step further to create an experience that connects with users. And this is primarily why in-app personalization has taken center stage in the hyperlocal delivery landscape.

By leveraging historical data to better understand your users, you can test to see if tailoring various elements of your app to showcase what’s relevant and useful to them, instead of offering the same generic experience to every user positively impacts your engagement and retention metrics. For instance, a food delivery app could use a user’s historical data to display the restaurants most frequently ordered from, right at the top. Similarly, even push notifications can be personalized by including the user’s first name or their favorite dish in the copy.

Mobile app A/B testing tools

We have been through the nitty-gritty of mobile app A/B testing, but your optimization efforts are incomplete without the right tool. Here is an overview of five mobile app A/B testing tools that are prominent players in the market. Information about these tools will help you zero down on the one that will serve your business purpose the best.

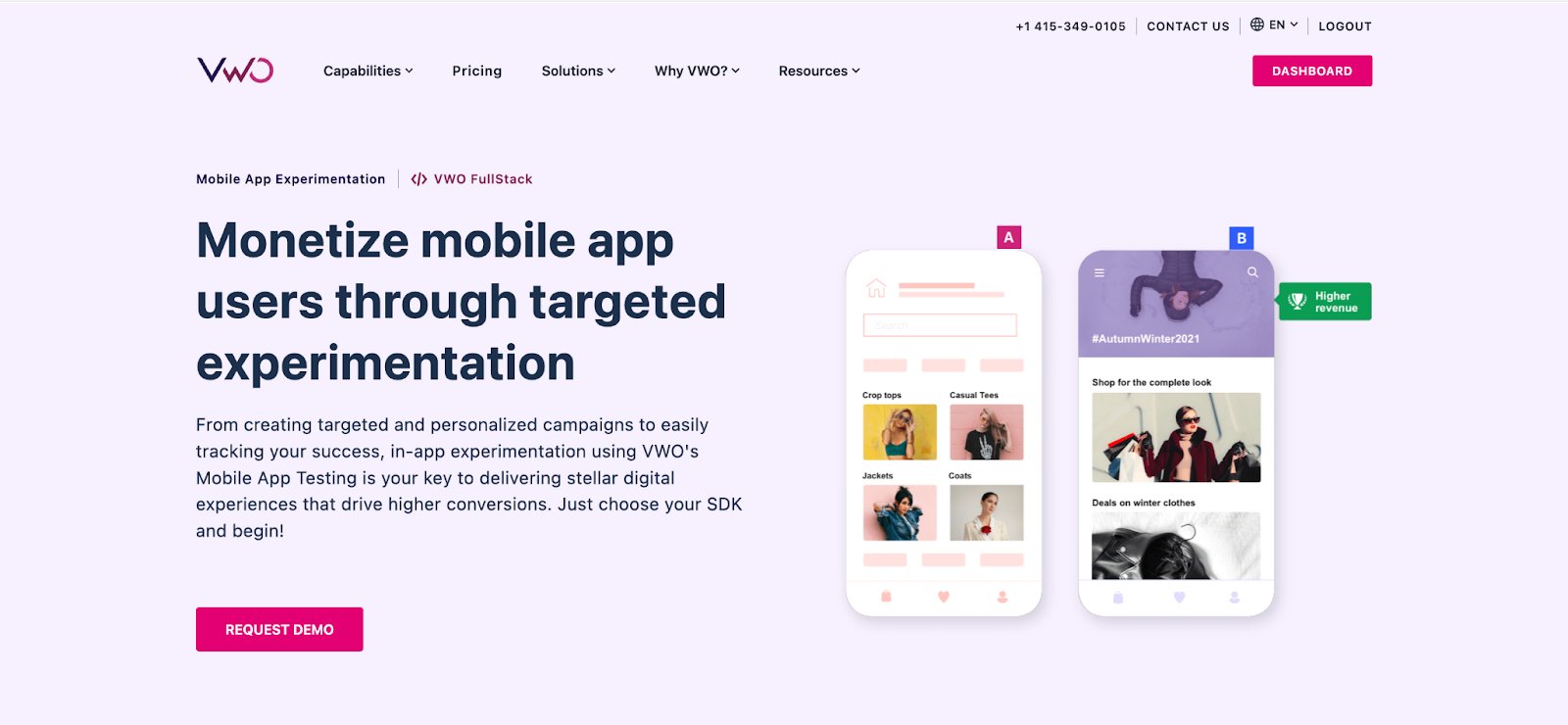

VWO Mobile App Testing

VWO Mobile App Testing lets you test apps and their features to create a stellar digital experience for the end user. The tool is known for its straightforward experiment execution with less dependence on the development team. Here are some of its noteworthy features.

- Bayesian-powered statistical engine for smart A/B testing results.

- Ability to A/B test Android and iOS apps and the ones developed with frameworks like React Native, Flutter, and Cordavo.

- Provision to send campaign information from VWO to third-party analytics tools like Google Analytics.

- Intuitive reports to track KPIs, slice and dice data, and understand elements that trigger conversion.

The tool is a one-stop solution for all mobile app testing needs. Request a free demo to access all capabilities.

Firebase A/B Testing

Google offers Firebase A/B testing for experimentation and feature management capabilities. One of its USPs is seamless integration with other Google tools, which eases data sourcing and analysis. The offered features are as follows:

- Bayesian analysis powered by Google Optimize to A/B test product features and choose the winning variation.

- A/B testing Remote Config, which is a Firebase cloud service that makes changes to the app without downloading the update.

- Experimentation with Firebase in-app and cloud messaging.

Like other Google tools, this mobile app A/B testing tool is offered for free.

Adobe Target

Adobe Target is an omnichannel experience optimization tool that allows integration with Adobe Audience Manager and Adobe Analytics. It is primarily utilized for user experience personalization on digital properties. Here are some of its offered features:

- A/B testing, multivariate testing, multi-armed bandit testing across the web, mobile app, and IoT.

- Testing and optimizing single-page app optimization and dynamic websites.

- Server-side testing for complex experience optimization campaigns and optimizing apps that don’t support JavaScript.

Based on offered features, Adobe Target is available as a standard or a premium version. However, it does not support feature management capabilities.

Leanplum

Leanplum is a Clevertap company that offers web and mobile app A/B testing with multi-channel lifecycle marketing for end-to-end personalized mobile journeys. It serves 200+ global brands in optimizing their user experience. The features of Leanplum are as follows:

- A/B testing mobile app UI, store promotions, message content and timing, and promotion channels from a single interface.

- Post-experimentation report with funnel and cohort analysis along with retention and revenue tracking.

- Drag and drop editor and in-app templates to create personalized messages for the end-user.

Leanplum is available for demo on request and offers custom pricing.

Apptimize

Apptimize is a cross-channel A/B testing tool that allows experimentation on any platform like the app, mobile web, web, and OTT. The offered features are as follows:

- Ability to create personalized user experiences across channels.

- Cross-channel feature release management.

- Single dashboard to manage and test experience for all channels.

Apptimize offers a demo on request with a free trial for feature management capabilities.

Conclusion

In today’s always-on, mobile-first era, where users have an ever-diminishing attention span, offering an exceptional and distinctive in-app experience is as indispensable as developing the app itself. To rise above the noise and clutter, and create an app that delights, you need to let data and insights guide your product and marketing decisions.

By adopting mobile app A/B testing as a part of your app strategy, you can rely on data-backed, actionable insights to constantly outdo your app experience, and consequently, boost your app core metrics, including user engagement, retention, and monetization. Using your test learnings to optimize each and every element, flow, and feature of your app can help you maximize conversions at every user touchpoint, and ultimately, streamline your entire user journey.

So, test regularly and keep building on top of your learnings, so that your optimization efforts are compounded with time and you can persistently deliver enjoyable end-to-end app experiences and enhance your overall app performance.

With this mobile app A/B testing guide, you’re all set to design your mobile app optimization roadmap. So, set the ball rolling, build an engaging app, and deliver delightful experiences, with mobile app A/B testing!

Frequently asked questions on mobile app A/B testing

We are referring to Mobile App A/B testing in software engineering.

Mobile app A/B testing is a form of A/B testing wherein different user segments are presented with different variations of the in-app experience to determine which one has a better impact on key app metrics.

Mobile app A/B testing requires all the variations to be coded, and all changes to be implemented directly at the server’s end as opposed to relying on a visual editor. It is very similar to server-side testing.

There are various benefits to Mobile App A/B Testing. Some of them include optimizing in-app experiences, boosting core metrics, and experimenting with the existing mobile app features in production.

Through Mobile App A/B testing, you can test different in-app experiences such as messaging & layout as well as different user flows such as onboarding and checkout.