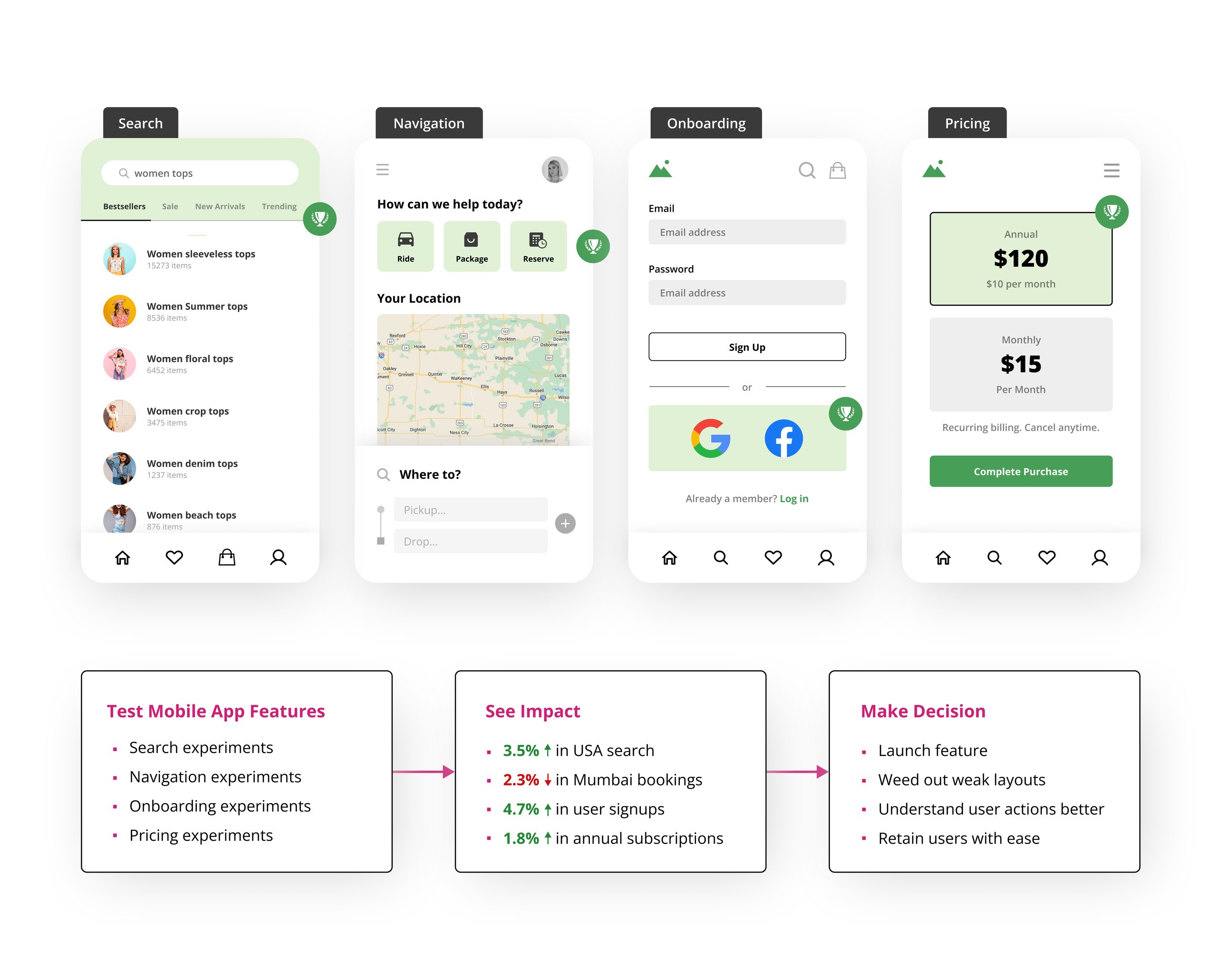

If you want to start experimenting with your mobile apps, this is the article you need. The objective is to familiarize you with creating hypotheses, testing variations, and measuring and implementing changes by the end of this article. Hence, the ideas and the examples discussed are simple and easy to implement.

Types of mobile app experiments you can try

Let’s look at the top four experiments that impact app user experience significantly and show, using real-world examples, how you can try them out in VWO.

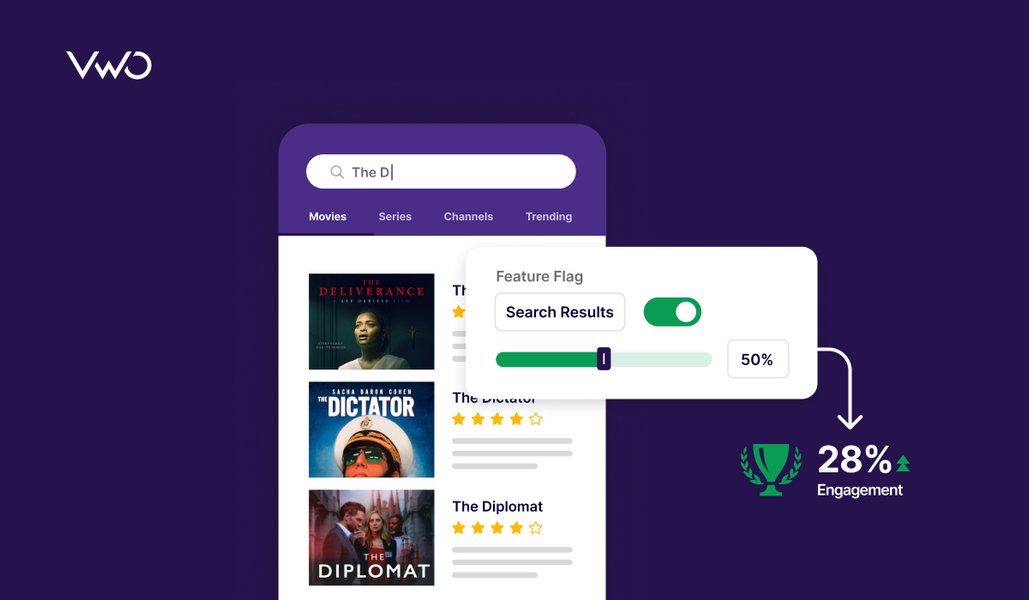

1. Search experiments

Users with shorter attention spans are easily distracted and may not take action if there are too many obstacles. Given the limited screen space on mobile devices, it is not practical to include an extensive menu or a variety of filters to aid navigation within the app. Therefore, incorporating and optimizing search functionality becomes necessary to improve product or feature visibility and ensure proper showcasing for mobile apps.

Metrics to track

Assuming you’re already considering optimizing your app search, here are some metrics to keep a close eye on:

- Search exit rate

- No. of users selecting the item shown in the search

- No. of searches with no results

These metrics can be defined easily in VWO. Insights drawn from this data will give you a clear understanding of where your app’s search performance currently stands and where it is lagging.

Deep-dive into user insights with qualitative behavior research

To provide more depth to your observations, supplementing quantitative data with qualitative research can prove valuable. Heatmaps are widely used (and loved) for this, and for a good reason. Say you see a dip in the number of clicks on the search results. There could be a few reasons why:

- The search results are not relevant

- The results order is not personalized

- The number of items displayed after the search is too low

VWO’s upcoming Mobile Insights product makes it easy to leverage session recordings and heatmaps or scroll-maps to delve deeper into user behavior and identify what needs to be optimized. With VWO Mobile Insights, you can find answers to questions like:

- How are visitors using the search function? (e.g., to look for categories vs. pointed specific products)

- Is auto-complete impacting conversion?

- Is surfacing their search history effective in improving sales for previously purchased items?

- What is causing friction in their search experience?

Examples that you can try

You can formulate and validate hypotheses through A/B tests based on your observations.

If you are wondering where to start, here are a few real-world examples you can refer to for inspiration.

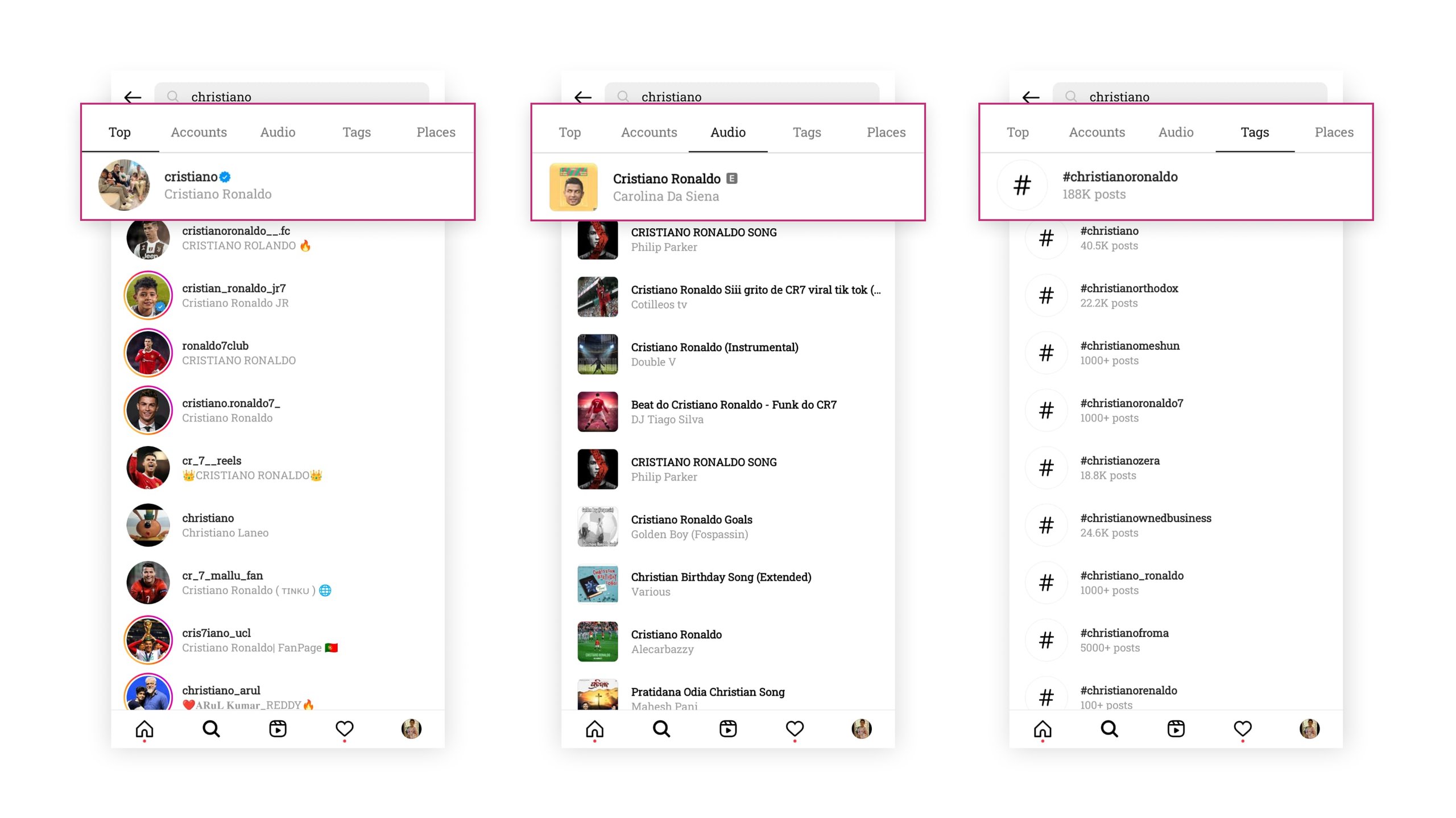

Example 1 – Search results by category

Some of the best implementations of app search are on social media sites like Instagram and YouTube. When you begin typing on the Instagram search bar, you will see results organized under categories like accounts, audio, tags, places, etc. Instagram and YouTube show search history, giving users one-click access to retry their previous search phrases.

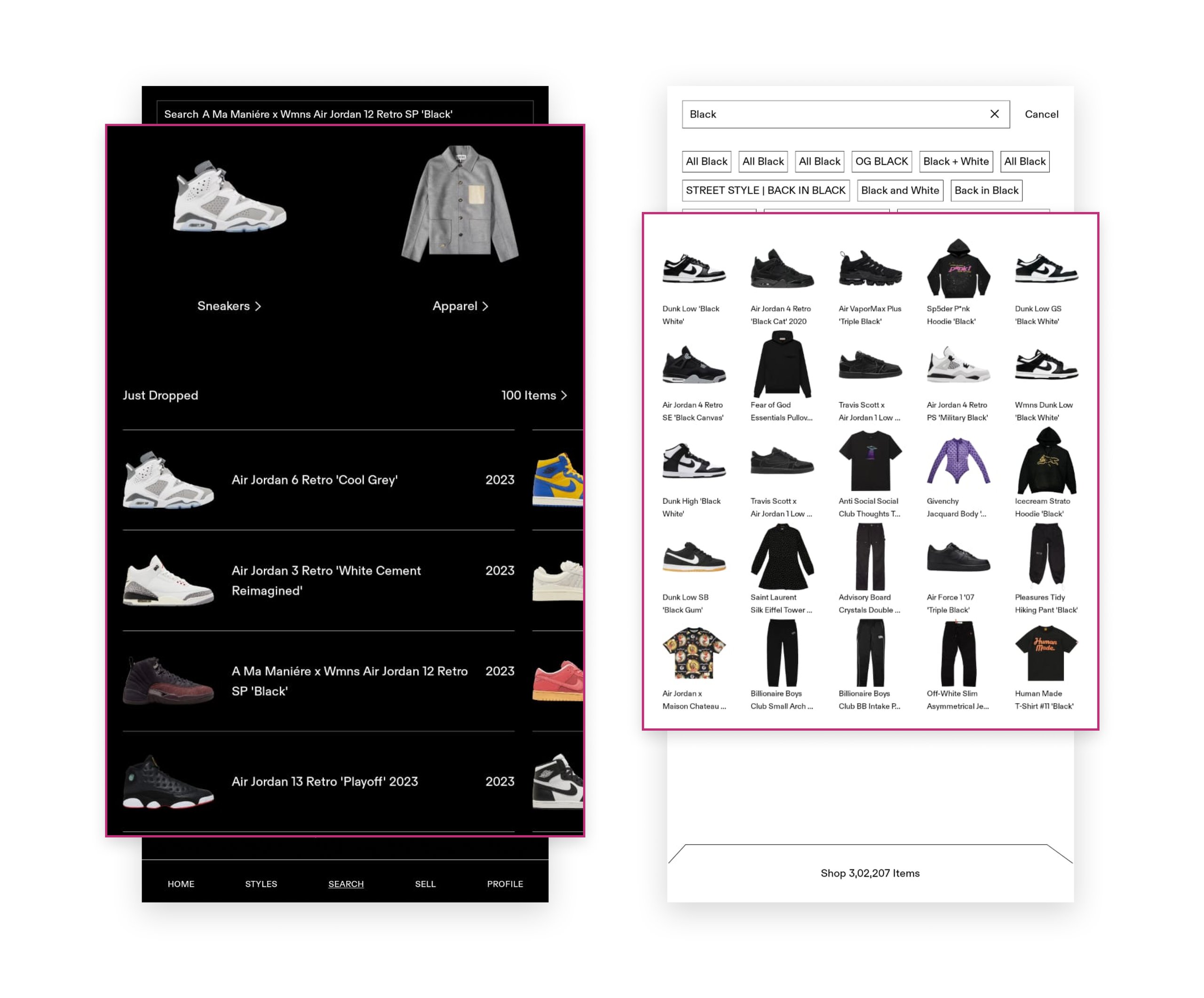

Example 2 – Search results with product suggestions

GOAT, an American eCommerce platform, has implemented an impressive search feature that enables users to find what they need swiftly. When you click the search icon on the bottom nav bar, it shows you a few product categories to browse and fills the page with items under the chosen category. When you click the search bar and start typing for something, you can see product suggestions with corresponding text and images.

Tests that can be devised

So, let’s say you want to improve the click-through rate for your search results. Here are two hypotheses you can test based on the above examples to meet the said goal.

Test 1 Idea

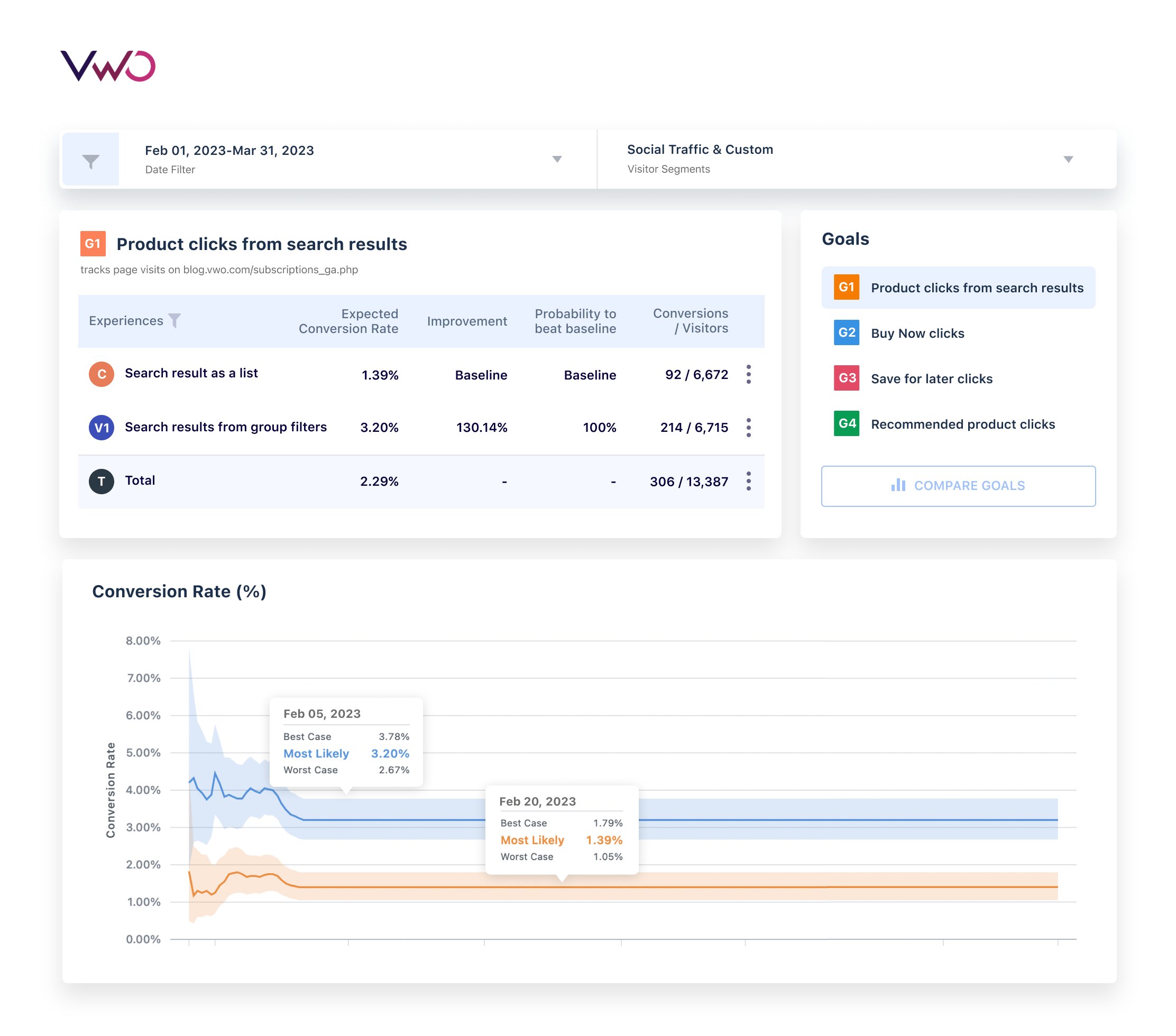

Hypothesis 1: Grouping search results under different categories like people, places, and groups will improve user engagement.

Control: Search results are displayed as a product list.

Variant: Search results displayed along with ‘group by’ filters.

Test 2 Idea

Hypothesis 2: Showing images along with search results will improve the click-through rate for product suggestions.

Control: Results showing product suggestions that have only text.

Variant: Results showing product suggestions that have both text and images.

You can quickly implement such tests on VWO’s Mobile A/B testing platform in just a few steps. Below is a video demonstrating the steps involved in creating a test based on hypothesis 1 for an Android application built using Java, for instance. VWO also supports frameworks such as Flutter, Cordova, and React-Native.

Behind the scenes in VWO

VWO provides lightweight SDKs for iOS, Android, and all popular backend languages. Adding the mobile app SDK is a one-time integration process, after which VWO generates API keys that you can use for app initialization for both iOS and Android apps. You can refer to this comprehensive guide if you need a detailed explanation of the steps.

So you’ve created variations, added conversion goals, and launched a mobile app test. The next crucial step is to analyze and extract insights from the test results. VWO’s SmartStats engine, based on Bayesian statistics, does the heavy lifting to compute and present precise test results as easy-to-consume reports that you can analyze to draw actionable insights. VWO’s reports are comprehensive and allow you to filter down results by date and visitor segments and even compare the performance of multiple goals in a test.

2. Navigation experiments

Navigation is among the trickiest things to build because it involves multiple complexities like grouping, design, and ease of feature discovery. Experts recommend “Tree Test” to help set a baseline for how “findable” things are on your app. It is a closed test conducted among users to see how quickly they can find something within the app. This article is a great piece to get you started on Tree Tests and also serves as a significant first step toward designing experiments to improve navigation.

Metrics to track

Just like we did with experiments to improve search, here are a few metrics you must keep a tab on

- Item findability rate

- The time taken to find a feature or product

- No. of times users got it right on their first click

- Variability in finding time

By combining the performance of these metrics with qualitative research insights, you can determine an effective strategy for enhancing your app’s navigation.

Examples that you can try

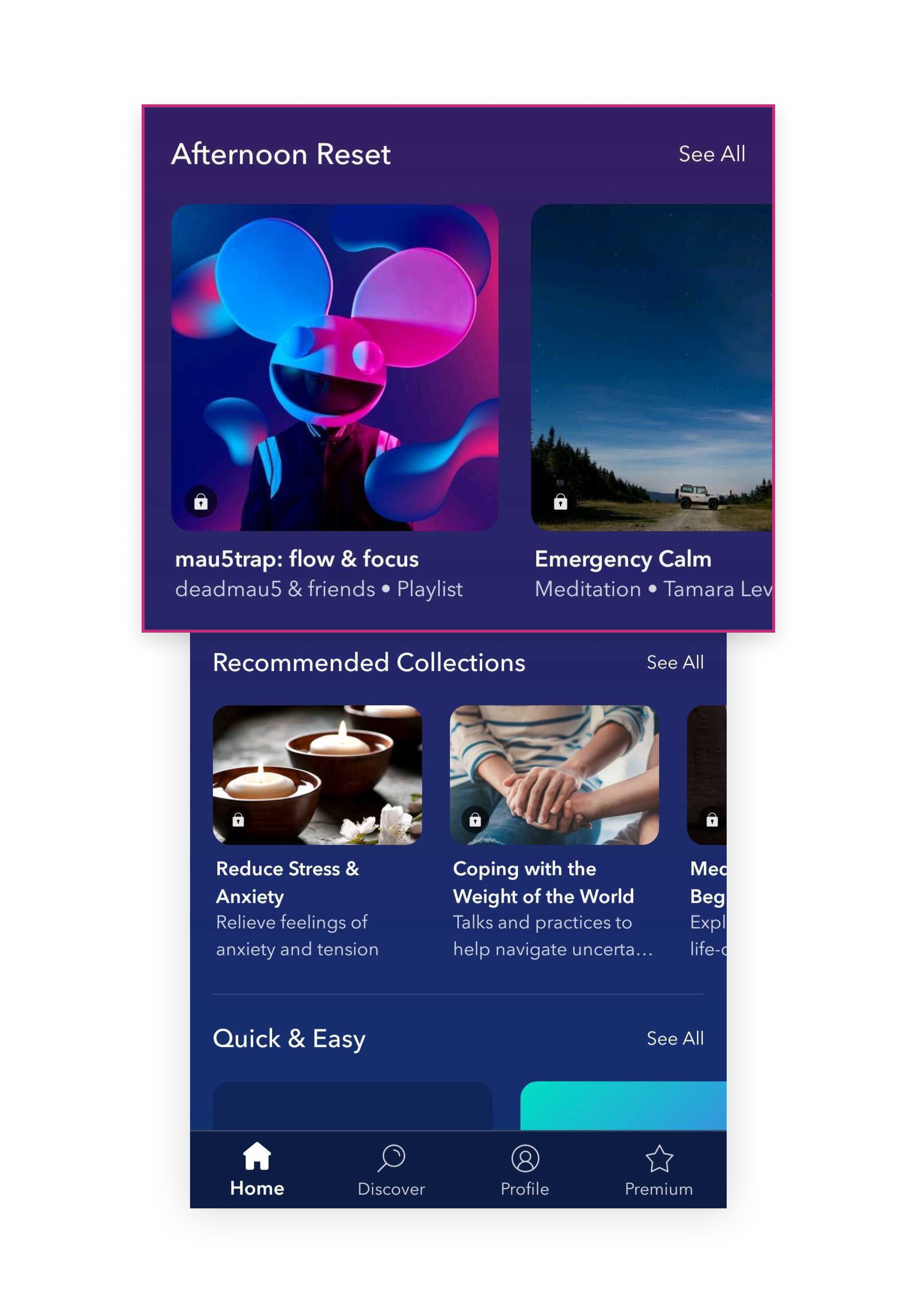

Example 1 – Card navigation

If you’re looking for navigation inspirations, one of my favorites is Calm, a meditation app. Their layout resembles a series of doors, each serving as an entry point. The cards are further segmented into categories like ‘Mental Fitness,’ ‘Recommended Collections,’ ‘Quick & Easy,’ and so on. The hamburger menu could be an alternative to this navigation style, but its use has declined due to its low discoverability, decreasing user clicks. On the contrary, card-style navigation is increasingly gaining traction for its user-friendly design.

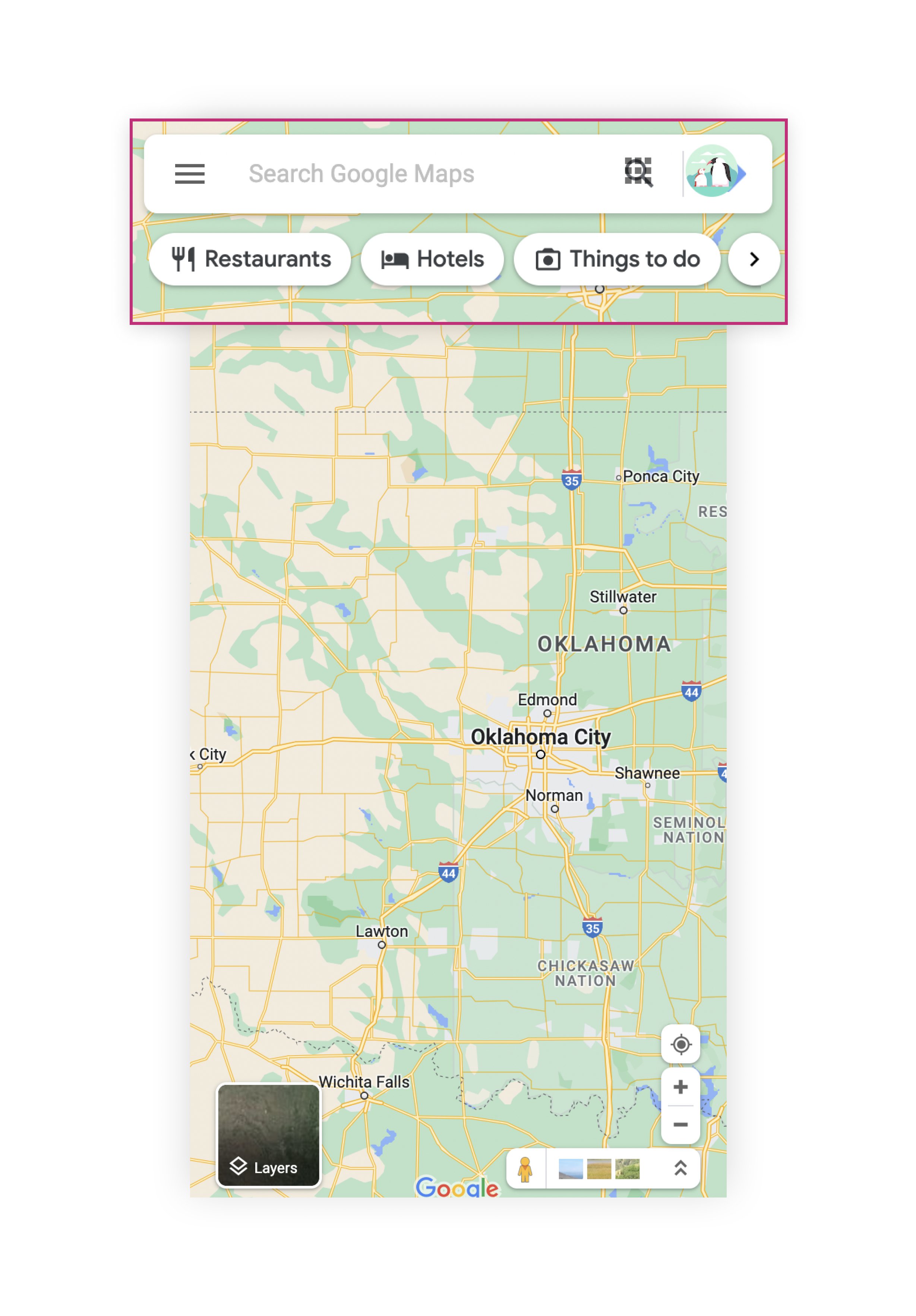

Example 2 – Simple tag navigation

Google Maps is another app that has user-friendly navigation. Once you enter a location, you see options like directions, share, and save location in the form of easily noticeable filter buttons. In addition, you also find commonly used facilities as filter buttons, helping you explore restaurants, shopping malls, ATMs, and parks near the entered location. Google Maps navigation is simple and helps people get the most out of the app.

Tests that can be devised

Improving the click-through rate of products from the navigation is usually the main goal of eCommerce owners trying to improve their app navigation experience. If that’s what you’re trying to do as well, here are two hypotheses to test:

Test 1 Idea

Hypothesis 1: Replacing the hamburger menu with card-based navigation tiles will increase conversion rates.

Control: The hamburger menu displays different product categories for users to explore.

Variant: Product categories shown in a card layout format.

Test 2 Idea

Hypothesis 2: Showing filter buttons for everyday use cases will result in users finding the relevant information quicker and using them more often.

Control: The feature search bar stays at the app’s top without filter buttons.

Variant: The search bar is at the top, with filter buttons for everyday use cases are at the top and bottom of the screen.

If you wish to run both tests in parallel, you can do so with VWO without worrying about skewed data. VWO has the capability of running mutually exclusive tests on mobile apps. This ensures that a mobile app user participating in one campaign does not participate in another campaign that is part of the mutually exclusive group. You can accomplish this by adding multiple campaigns to a mutually exclusive group to ensure no visitor overlap across any of the campaigns. Creating a mutually exclusive group on VWO guarantees that your mobile app provides a consistent experience and that one campaign’s results are not influenced by another, attributing conversions to the right campaign.

3. Onboarding experiments

The app onboarding experience is subject to most change as it evolves with your products, categories, audience, goals, and others. While an onboarding experience changes vastly depending on what your product does, all good ones have a few things in common. They:

- Reinstate the value proposition of the app

- Familiarize users with the features and benefits

- Encourage successful adoption of the app

- Minimize the time taken by customers to derive the value they want from using your app

So, if you want to improve your app optimization experience, it might be a good idea to find answers to some pertinent questions first:

- Is our app onboarding process too lengthy?

- When do people realize value during the onboarding process?

- Which steps in the onboarding process should be optional?

- Do users look for onboarding help and support?

Metrics to track

To support your goals and discussions effectively, relying on data and allowing them to steer your testing roadmap is essential. Tracking basic metrics like the ones listed below can be helpful:

- Onboarding completion rate

- Time to value (time between onboarding and getting the first value from your app)

- Activation rate reflects how new users perceive your app

- No. of onboarding support tickets generated for a specific period

Examples that you can try

Let us discuss some examples that can inspire you to test and improve your app onboarding process.

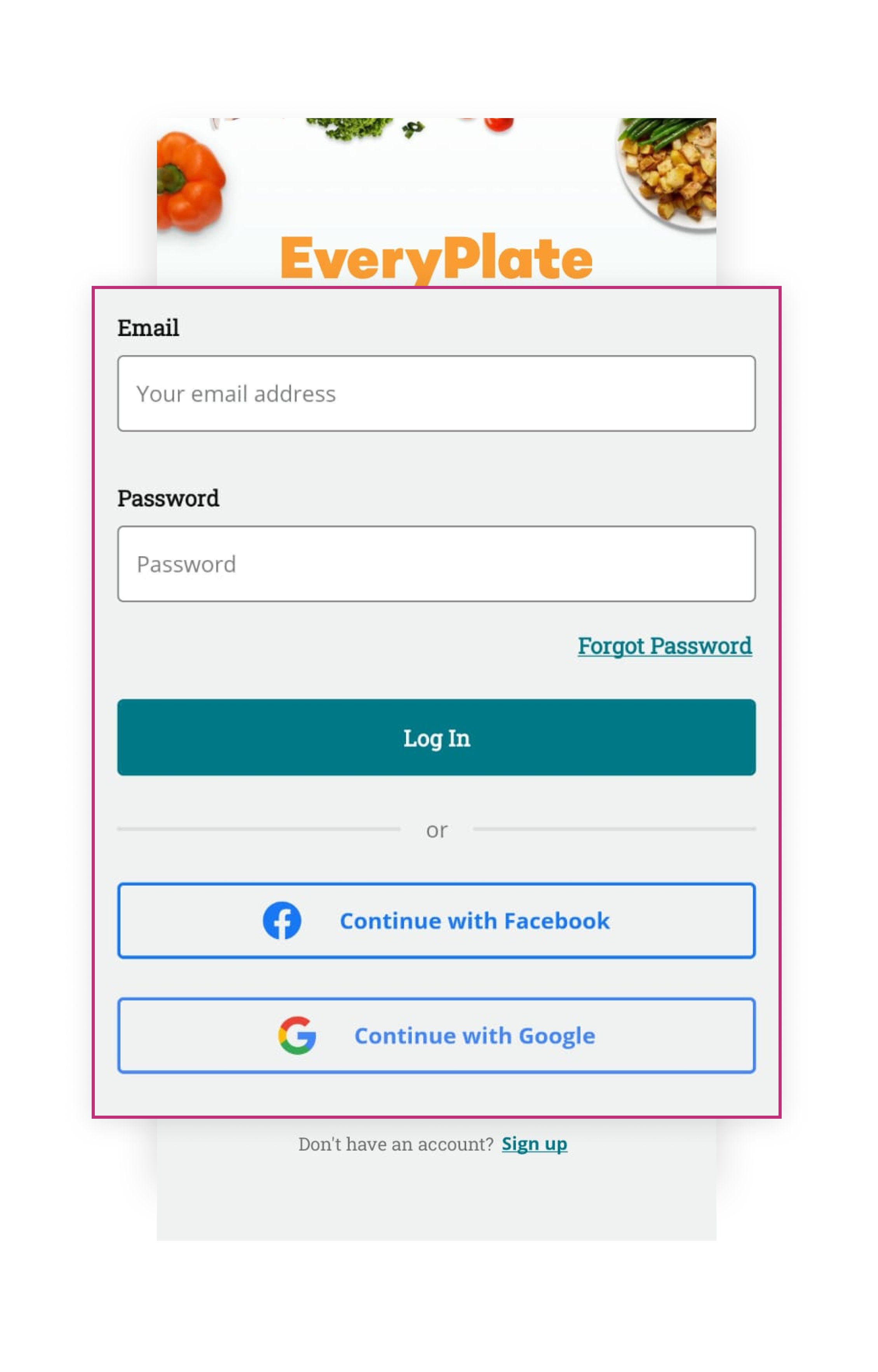

Example 1 – Multiple log-in options in the onboarding flow

Do you wonder if you should offer email, social login, or both during user onboarding? Every Plate, a US-based meal delivery platform, lets users choose any of the two options for logging in.

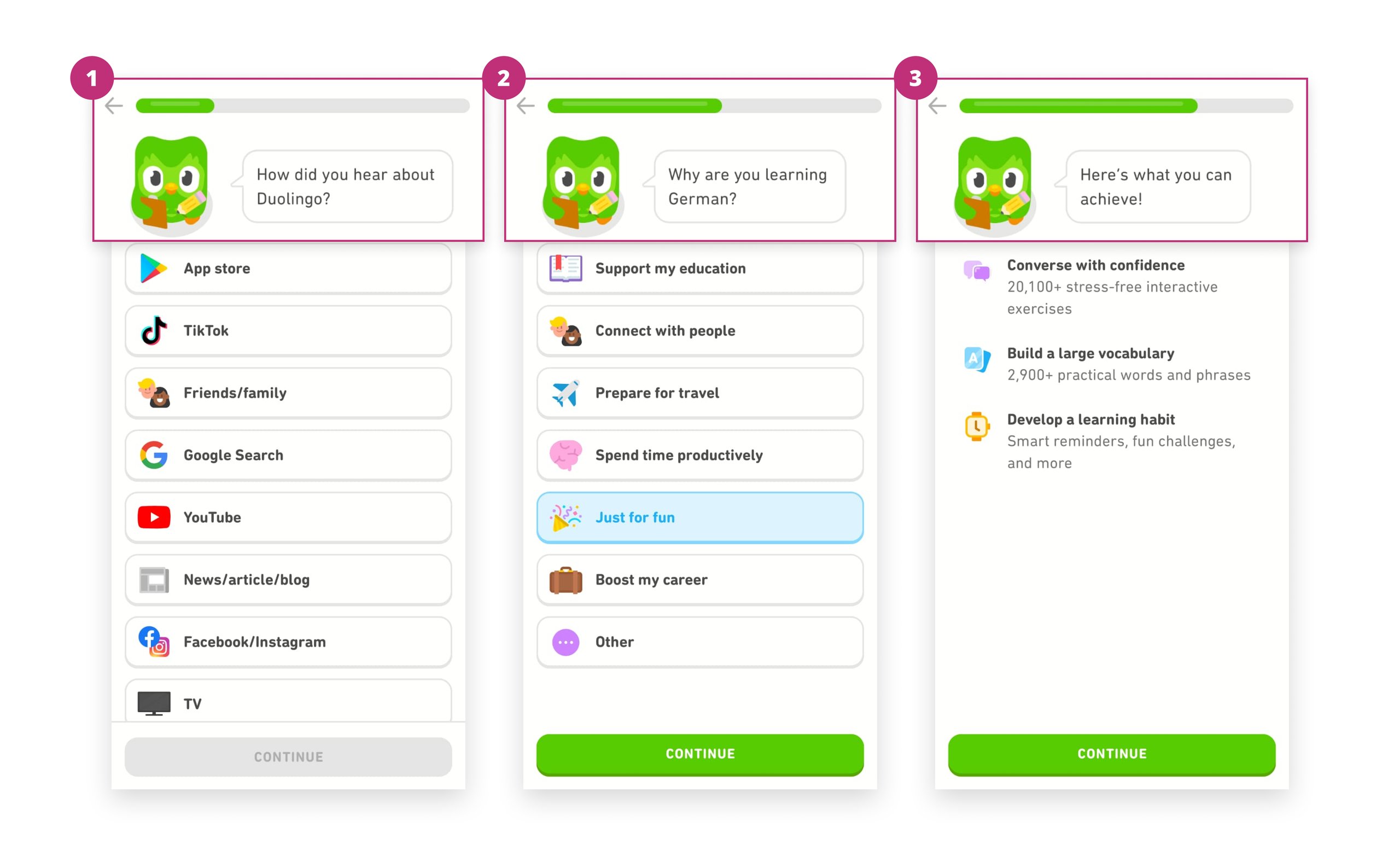

Example 2 – Multi-step onboarding flow

How many steps should you include in your app onboarding process? See how Duolingo has aced it – proving that a well-crafted multi-step onboarding process can be successful without losing the user’s interest. The language learning app displays a series of screens asking users several (yet relevant) questions during onboarding to improve their learning experience.

Tests that can be devised

Would you like to know how many people have completed the onboarding process or how many support tickets have been raised? You can keep track of these goals by trying out the following testing ideas.

Test 1 Idea

Hypothesis 1: Providing social logins along with email can result in better conversion in step 1

Control: Just email/phone login

Variant: Email login + Google + Facebook

Test 2 Idea

Hypothesis 2: Showing a progress bar during onboarding will nudge users to complete the onboarding process.

Control: A multi-step onboarding process presented without a progress bar.

Variant: A multi-step onboarding process with a progress bar at the top of every onboarding screen.

With a tool like VWO, you can customize the target audience for your tests based on various parameters such as technology, visitor type, time, etc. You can select a targeting segment from the Segment Gallery or create a custom one.

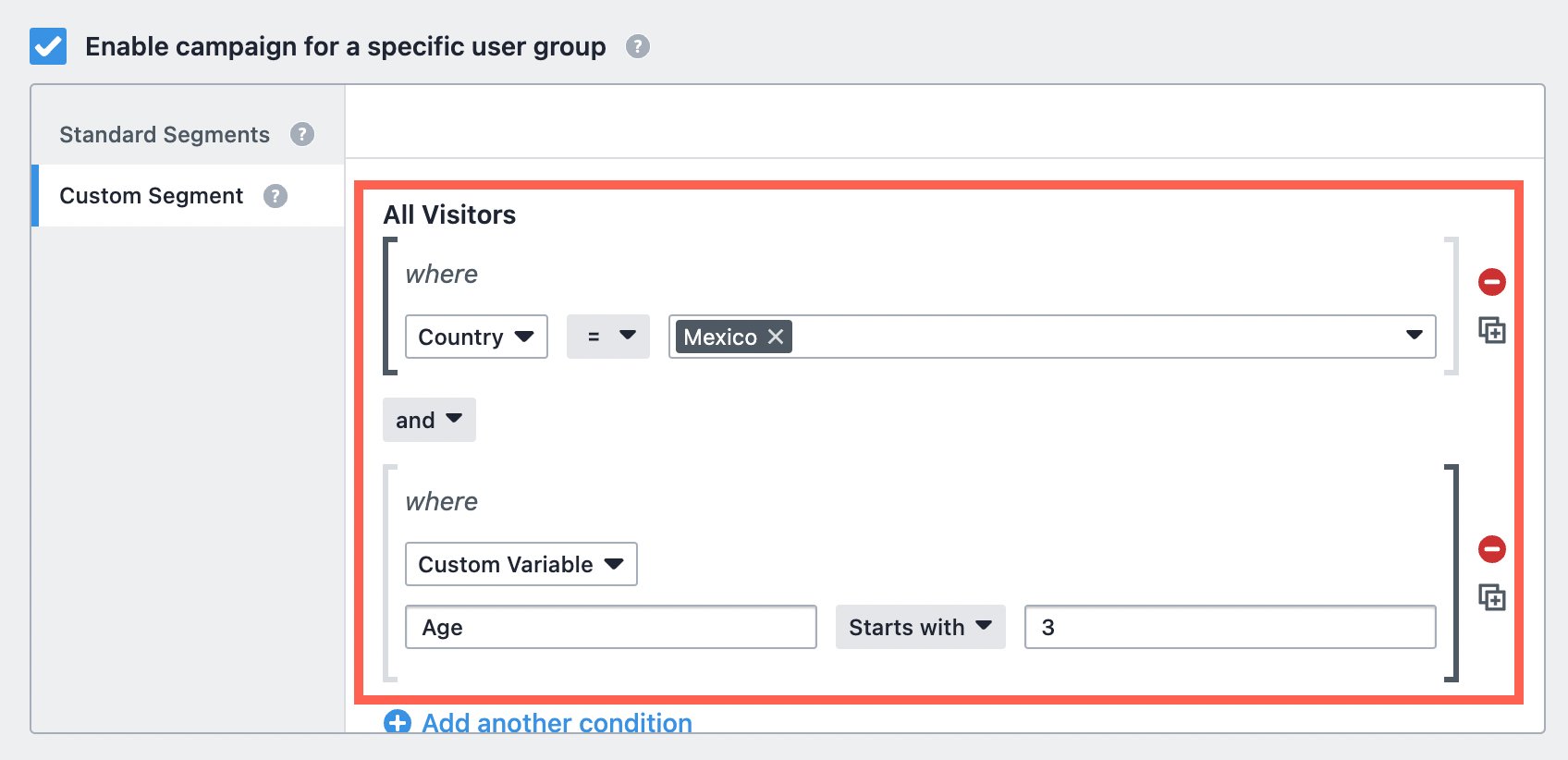

In the context of the previous test example, suppose user research indicates that users are averse to entering their email and password and prefer more flexible login options. Based on this research, you could first target users in a slow market, say Mexico, to see if offering social login options generates a positive response and increases the number of users completing the first onboarding step. To accomplish this, you can go to custom segments to add conditions and select the corresponding attribute (Country) and value (Mexico).

Further, you can use ‘AND/OR’ operators for more precise audience selection. For example, suppose your learning application targets primarily mid-career professionals in Mexico. In that case, you can choose the custom variable option and enter ‘Age’ in the name field and an age group (such as 35-45) in the value field. Then, you can select the bracket on both sides and choose the ‘And’ operation. Alternatively, if you want to track the performance of any one of the groups, you can use the ‘OR’ operator in audience selection.

Here’s a short article on custom segments if you want to learn more.

4. Pricing experiments

Offering discounts or coupons are necessary to boost sales and attract customers to your app. But how can you be sure your pricing strategy is helping your business grow?

Setting prices too high may drive customers away, while setting them too low could mean missing out on revenue.

Metrics to track

To determine if your pricing is effective, analyze the following revenue-related metrics for your app:

- Lifetime value – Revenue generated per user from the time they’ve installed your app

- Purchase frequency – The average number of purchases your users make in a given time.

- Cost per install – prices paid to acquire new users from paid ads.

These metrics can be configured in VWO. If you believe the numbers are not up to your expectations, it may be time to consider A/B testing pricing plans. Doing so can help you maximize revenue without risking losses.

Examples that you can try

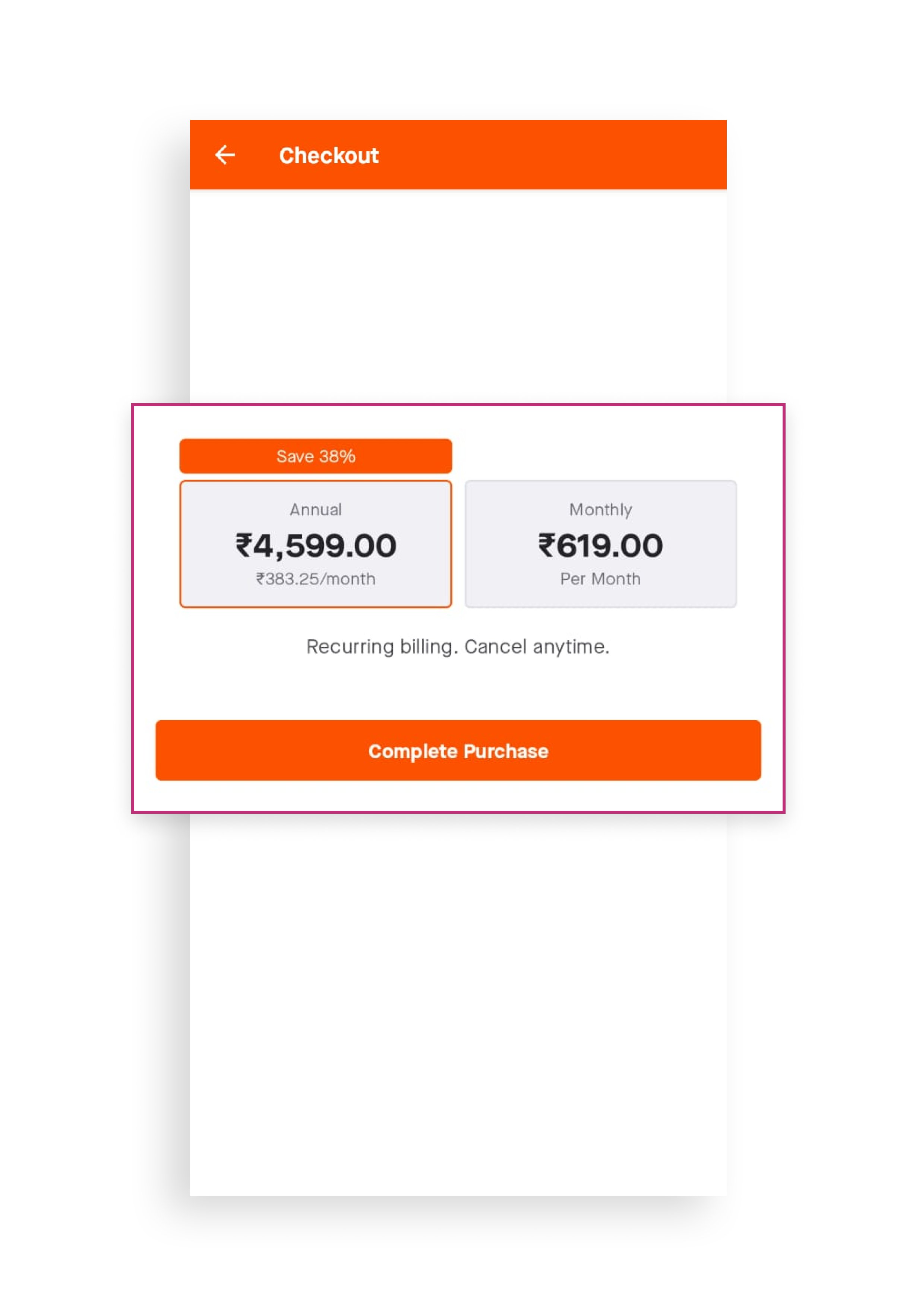

Example 1 – Giving a discounted annual pricing to subscribers

Strava, a popular fitness app, requires its users to pay monthly or annual subscription fees to access its advanced features. Customers can choose between monthly or yearly billing cycles, with the potential savings mentioned for the latter. This discount may incentivize users to opt for the annual plan.

Example 2 – Displaying dynamic pricing with a time limit

Heads Up! is a popular game where players hold a mobile device up to their forehead and try to guess the word or phrase that appears on the screen based on their friends’ clues. Notice how the original price is crossed out, and a time limit is displayed to create a sense of urgency and encourage users to act quickly.

You can create effective app tests based on these pricing display methods.

Tests that can be devised

Let’s say you want to increase the number of transactions/paid subscriptions on your app. The following are the test ideas you can experiment with.

Test 1 Idea

Hypothesis 1: Showing potential savings for a subscription plan will encourage users to opt for the longer one.

Control: Monthly subscription plan and annual subscription plan.

Variation: Monthly and yearly subscriptions have potential savings for both plans mentioned.

Test 2 Idea

Hypothesis 2: Psychological tactics like urgency and striking out the original price can increase the number of users opting for this discount offer.

Control: A simple discount banner with a new price written out.

Variation: A vibrant discount banner with the original price struck out, the discounted price displayed, and a timer indicating the availability of the offer.

Let’s explore a more advanced approach called the multi-armed bandit (MAB) for one of the experiments we discussed earlier, such as the discount test inspired by the Heads Up example. Unlike the A/B tests we previously discussed, the multi-armed bandit approach is a bit more complex and involves a different methodology.

Suppose you have a time-sensitive discount offer with multiple variations to test, and you need to identify the best-performing variation as quickly as possible to minimize opportunity loss. Unlike A/B tests focused on determining the best variation, MAB focuses on getting to the better variation faster. When the optimization window is small, MAB is more suited to minimize opportunity loss in pricing, discounts, subscriptions, etc. In such cases, visitors who are served the second-best variation and don’t convert constitute lost revenue opportunities since they may have converted if they were part of the winning variation. You can learn more about MAB on VWO here.

Accelerate your app success with awesome testing ideas!

We hope you found the A/B testing ideas discussed in this article helpful. However, great ideas are only beneficial when implemented correctly. So, if you’re looking for a platform that offers comprehensive functionalities like light-weight Android/iOS SDKs, 24*7 tech support for multiple languages, the ability to select custom audiences for tests, and gives you reliable, real-time reports, VWO Mobile App Testing should be your top choice. Further, with the full release of Mobile Insights quickly approaching, you can gain a more in-depth understanding of user behavior, improve conversion metrics, and, most importantly, enhance the overall user experience of your app. Request a free demo to see how VWO improves your mobile app users’ journey.

![Top 10 A/B Testing Tools for Mobile Apps [2025]](https://static.wingify.com/gcp/uploads/sites/3/2021/01/Feature-image_Mobile-App-AB-Testing-Tool-Copy.png?tr=h-600)