What is Sample Ratio Mismatch?

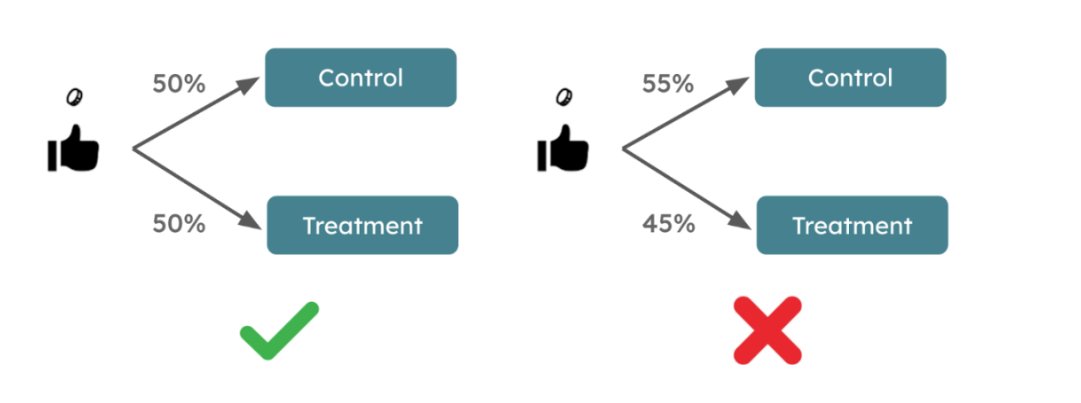

Sample Ratio Mismatch (SRM) in the context of an A/B test refers to an imbalance in the distribution of users between the control and variation groups. It happens when the intended randomization fails, leading to unequal sample sizes in a test.

For example, you assign 50% of users to the control group and 50% to the variation group for an A/B test. However due to some issues, the actual distribution results in allocating 45% of users in the control group and 55% in the treatment group. This is a case of SRM, affecting the accuracy and reliability of your test results.

Another scenario is when the configured allocation is, say, 60:40 in the A/B test, but the observed allocation turns out to be 70:30. Any deviation from the planned distribution is considered an SRM issue.

What causes SRM issues?

There may be several reasons why SRM creeps into your A/B test. Let’s look at some of the classic reasons why this happens below:

User behavior

If users delete or block cookies, it can disrupt the tracking and randomization process, leading to a sample ratio mismatch. This is because regular clearing of cookies may lead to the counting of such users as new users leading to their overrepresentation in one group.

Technical bugs

Technical issues can also cause an SRM. Consider a test with JavaScript code that’s making one variation crash. Due to this, some visitors sent to the crashing variant may not be recorded properly, causing SRM.

Geographic or time differences

Geographic or time differences can influence user behaviors, affecting the distribution of users across groups in the A/B test. So, for example, consider an online retail website with a global user base. If your test does not account for time zone differences, it may unwittingly include a significant number of users from a specific region in one group during certain hours. This could result in an SRM in the segment of users coming from that particular location.

Browser or device biases

When specific browsers or devices are overrepresented due to biases in the randomization process, the integrity of the test can be compromised. For example, suppose you run an A/B test on your SaaS website for mobile but its slow loading speed led to a decreased sample allocation to the mobile variation. Without careful randomization, one group ends up with a higher proportion of users due to device or browser issues, skewing the test results.

Dogfooding

Employees, being internal users, are exposed to the latest features or tests by default. As they interact with the product more frequently than external users, their inclusion in the treatment group significantly skews the metrics. This inadvertent inclusion of one’s own company’s employees in a test, also known as dogfooding, can distort test results and lead to an overestimation of the impact of a test.

When is SRM a problem and when is it not?

Put simply, SRM arises when one version of a test receives a significantly different number of visitors than originally expected. A classic A/B test has a 50/50 traffic split between two variations.

But you see that toward the end of the test, the control gets 5,000 visitors, and the variation gets 4982 visitors. Would you call this a massive problem? Not really.

In the final stage of an A/B test, a slight deviation in traffic allocation can happen due to the inherent randonment in allocation. So, if you see, that the majority of traffic is rightly allocated (calculated confidence being 95%-99%), you need not worry about a slight difference in sample ratios.

But SRM becomes a notable issue when the difference in traffic is substantial, such as 5,000 visitors directed to one version and 2100 to the other.

That’s why staying alert, and keeping an eye on visitor count is so important if you want to obtain accurate test results.

Want to watch how you can split traffic for your A/B test on VWO? Here is a video for you:

How to check for SRM?

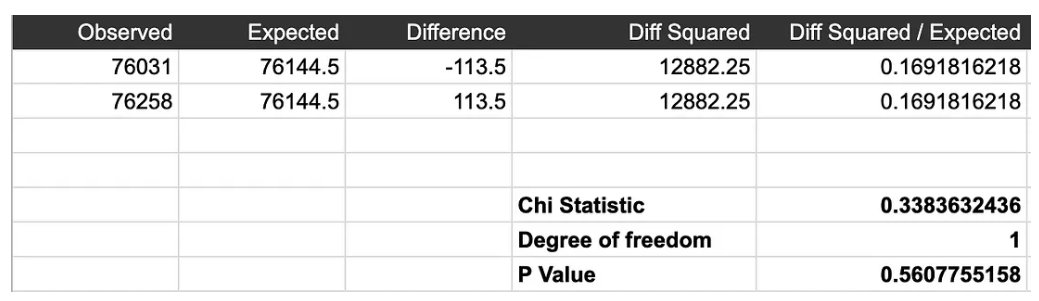

SRM is similar to a symptom revealing an underlying issue in A/B testing. Similar to a doctor recommending tests for a patient, a chi-square test can be called a diagnostic tool for confirming SRM. A p-value below 0.05 shows that there is SRM in the test. In some cases, the differences in ratios are so pronounced that no mathematical formula is needed to identify the problem.

Where to check for SRM?

Once you’re sure there’s an SRM in your test (which happens in about 6% of A/B tests), you need to know where to find it. Microsoft’s report highlights the stages where SRM can occur:

Experiment Assignment

Issues could occur if users are placed in the wrong groups, the randomization function malfunctions, or user IDs are corrupted.

Experiment Execution

Variations might start at different times, causing discrepancies, or delays in determining which groups are part of the experiment.

Experiment Log Processing

Challenges may arise from automatic bots mistakenly removing real users or delays in log information arrival.

Experiment Analysis

Errors may occur in triggering or starting variations incorrectly.

Experiment Interference

The experiment might face security threats or interference from other ongoing experiments.

What is the role of segment analysis?

Sometimes you can find the SRM hidden in one of your visitor segments in the A/B test. Let’s understand with an example.

Let’s say you’re testing two different discount banners on your grocery website. The link to one variation has been circulated through newsletters, leading to more traffic for that variation and less for the control and the other variation. When you delve into segments, you notice SRM in the user segment from the email source.

You can exclude this segment and proceed with the test results with properly adjusted users. Or if you think the segment is too important to let go of, consider starting the test anew. We advise you to discuss this with your stakeholders before making a decision.

Therefore, segment analysis helps you make important optimization decisions, a task not possible with just a chi-square test. While the chi-square test identifies SRM, it doesn’t really tell you why it happened.

Can SRM affect both Frequentist and Bayesian statistical engines in A/B testing?

Yes. Regardless of the statistical approach used, SRM can jeopardize the authenticity of any A/B tests. Addressing and correcting for SRM is crucial to ensure the reliability of the test results, whether you are using Frequentist or Bayesian statistical engines.