A false positive happens when a test or experiment wrongly shows that a variant is a winner or a loser when actually there is no impact on the target metric. It’s like getting a wrong answer on a test, making you think you’re right when you’re actually wrong. In testing or experiments, false positives can lead to mistaken conclusions and decisions.

Please note: False positives show up as Type-1 errors in A/B testing.

What is a false positive rate?

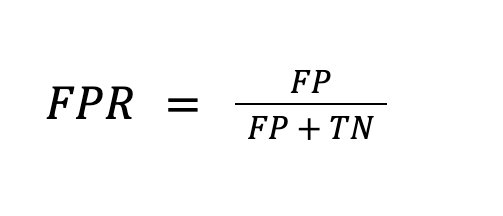

The false positive rate (FPR) is a critical metric that reveals how frequently a phenomenon is mistakenly identified as statistically significant when it’s not. This measure is vital as it indicates the reliability of a test or outcome. A lower false positive rate signifies higher accuracy and trustworthiness of the test.

Where:

- FP represents the number of false positives.

- TN represents the number of true negatives or the number of winners received among all the tests that did not have any improvement.

Example of false positive rates

Imagine a newly developed diagnostic test aimed at detecting a rare genetic disorder. To gauge its accuracy, 1000 seemingly healthy individuals from diverse demographics and geographical areas undergo the test. Upon analysis, it’s discovered that out of these 1000 individuals, the test incorrectly identifies 20 as having the genetic disorder. This results in a false positive rate of 2%. Despite being healthy, these individuals are wrongly flagged by the test. Such simulated assessments offer vital insights into the efficacy of medical tests, aiding healthcare professionals in assessing their real-world reliability and effectiveness.

Why is evaluating the false positive rate important?

The accuracy of the statistical model is heavily reliant on the false positive rate, making it imperative to maintain a careful balance.

In medical diagnostics, a high false positive rate can erroneously categorize healthy individuals as having a disease.

Within finance, false positives manifest in fraud detection systems and credit scoring models. Elevated false positive rates can result in legitimate transactions being flagged as fraudulent.

Cybersecurity tools are susceptible to false positives, which can inundate security analysts with alerts, leading to alert fatigue. Excessive false alerts may cause analysts to overlook genuine threats.

False positives within quality control processes may lead to the rejection of acceptable products, escalating manufacturing costs and diminishing efficiency.

The ramifications of false positives vary across these domains, contingent upon the specific context and repercussions of inaccurate outcomes. Broadly, a heightened false positive rate can squander resources, impair efficiency, undermine trust in systems or models, and potentially yield adverse consequences for individuals or organizations.

False positive rate in A/B testing

The false positive rate poses a significant risk in A/B testing scenarios, where businesses compare different website or app versions to determine which performs better. When the false positive rate is high, the A/B test takes longer to conclude and get statistical significance.

To bolster the reliability and effectiveness of A/B testing software while minimizing false positives, it’s prudent to lower the false positive rate threshold. Typically set at 5% in A/B testing, reducing it to 1% can enhance test accuracy and reduce false positives. Platforms like VWO utilize the Probability to Beat the Baseline (PTBB) to control the false positive rate, if the PTBB is 99% then the FPR is 1%.

Conclusion

In conclusion, the false positive rate is a critical metric that impacts various domains, including medical diagnostics, finance, cybersecurity, and quality control processes. High false positive rates can lead to erroneous decisions, squander resources, and undermine trust in systems or models.

Platforms like VWO leverage PTBB to mitigate the threat of false positive rates. If you want to know more about it, grab a 30-day free trial of the VWO platform to explore all its capabilities.