Vendio Tested A Counterintuitive Idea To Increase Sign-ups Using VWO

About Vendio

Vendio, an Alibaba.com company, specializes in providing free e-Commerce stores to its merchants. They have special landing pages for free store sign-up. They used VWO for testing their webpages.

Goals

The main objective was to increase sign-ups on one of the special landing pages.

Tests run

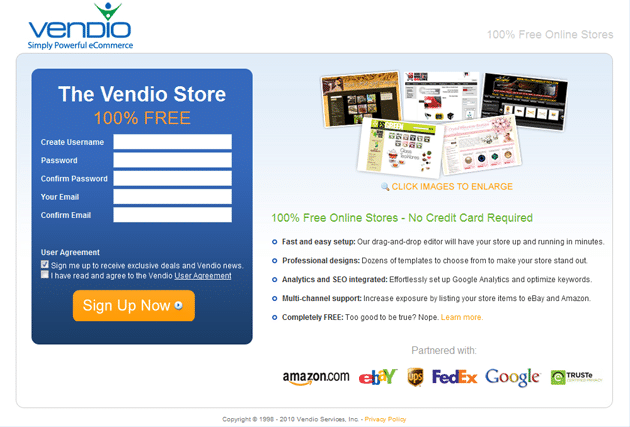

Their original landing page (control) combined marketing content and registration fields in an attempt to reduce the number of clicks for a successful registration.

This is how it looked:

Original Landing Page (with the embedded sign-up form)

Note that it uses the so-called “best-practice” of embedding the sign-up form in the landing page itself. They had long been using the layout of the original page; because as a best practice, they presumed that reducing the number of clicks for registration increased the conversion rate. Although the page was performing relatively well, they wanted to make sure the included registration fields weren’t too aggressive or limiting in any manner.

So, the variation that they tested had somewhat similar imagery and content, but the page didn’t include any registration fields and had slightly different styling. Clicking the Signup Now button took the visitor to a page with sign-up form.

In other words, Vendio added an extra step in their conversion funnel. Not a smart move, huh?

This is how the variation looked like:

Variation – 60% Increase in Sign-Ups

Conclusion

Guess what? The page without the registration fields performed better – much better – to the tune of a 60% increase in conversions!

Here is what Vendio had to say about the results:

Best practices are NOT always true! It’s still hard to believe, but the numbers don’t lie.

If you do changes on your website or landing pages without A/B testing them, you are actually flying in the dark. Another lesson here is that it is worth testing radically different ideas – which, on the first glance, may appear not-so-smart (like removing the sign-up form from the landing page).

On their part, Vendio gave a positive testimonial for VWO:

VWO was extraordinarily valuable. It was far easier to use than other solutions (some of which we couldn’t even get to work properly after implementation). Without VWO we would still be missing out on all those registrations, and we are continuing to see great and other surprising results with VWO.

A/B testing can have a real impact on lead generation. Take a free trial with VWO and check this out for yourself!

Location

New York, NY (US)

Industry

Software

Impact

60% increase in sign-ups