How VWO Helped VisitNorway.com Improve Click-Through Rates on Its Website

About Visit Norway

VisitNorway.org, one of our customers, published their experiences using VWO tools for A/B testing and the outcomes in their original blog post, which was in Norwegian. This success story draws upon that blog.

VisitNorway uses VWO tools to run A/B tests to quickly and effectively measure the expected impact of changes to text, images, design, and functionality on its website.

Goals: Improve Website Design and Functionality to Make These Better for Users

As part of its efforts to improve visitnorway.com, Visit Norway’s team uses analytics and usability tests runs and surveys to assess the needs of its visitors and accordingly customize the site for content, look and feel, functionality, and other features.

The team started using VWO, an easy-to-use A/B testing tool that quickly and effectively helped measure the impact of various changes the team wanted to make on the site based on input from users, experts, and other optimization hypotheses.

When it was decided to conduct a test, the necessary variation was made and the test was set up on VWO. Performance of the variation was compared against the original for defined goals (such as click-throughs, CTA clicks, conversions, and others).

The test results and the associated confidence levels helped the team decide whether or not to implement the variation permanently on the Vistnorway.com website.

Tests run: A/B Testing Ensures Objective Resource Utilization by Providing Objective Data for Proper Decision-Making

Here are the results from some of the tests VisitNorway conducted:

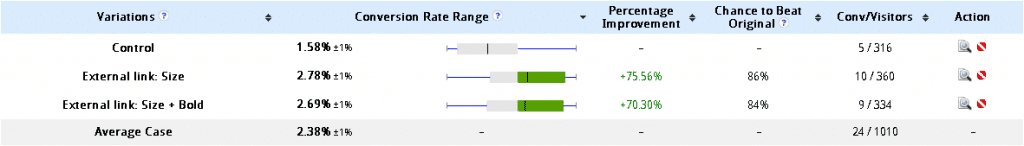

1) Click to destination company websites from landing pages

We wanted to find out about simple design change on landing pages to increase the number of clicks to the destination’s websites. At first, we only increased the size of the link text and converted it into bold.

The test was done on VisitOSLO pages of visitnorway.com. We got a positive increase of 70–75% in the CTR by changing the size and using bolder font, as may be seen from the screenshot below.

We, therefore, proceeded with this change on the landing page and increased visits to the destination company website.

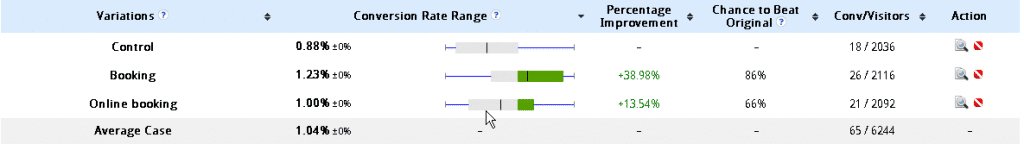

2) What should “Booking” be called in the top menu?

We tested different names for the menu item “Booking” in English, Norwegian, and Spanish, hoping to lead even more of those who were interested in booking a holiday to the booking section.

For English, the text Booking won with an improvement of 39% against Book Online, as it originally stood.

Online booking had a 14% improvement to the original.

The Norwegian word for Order with an improvement of 114% (!) won against the original Book travel.

See the screenshot below for an overview of the other variants.

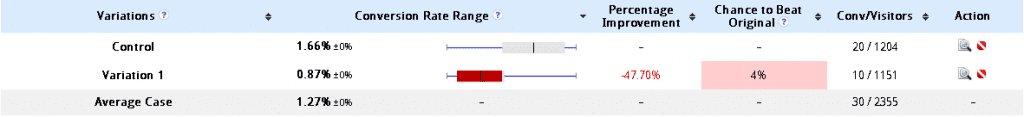

In the Spanish menu, we wanted to see if it was better to write Booking in Spanish or English. Since Book Norway site did not have a Spanish version, we were curious about the bounce rate.

With regard to how many people went to booking section, Booking had a decrease of 48% in English (Variation 1) in relation to Reservas (Control).

See the screenshot below.

The bounce rate was similar for both, so we eventually chose Reserve in the Spanish version.

Based on the above results, we changed the text in the menu to the “winners” of the three tests.

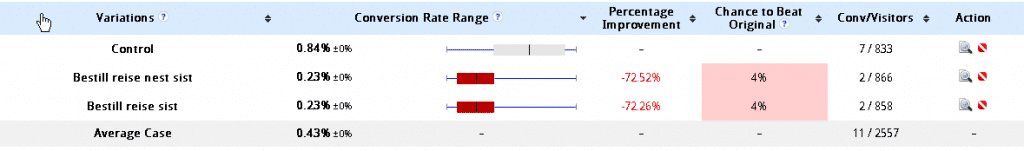

3) Moving the Order link in the main menu for the Norwegian edition

We wanted to see how much change there was in the number of clicks to the Book Norway CTA when we moved the Order from the second position to the second last and last position in the main menu.

We noted a decline of 72–73% in the number of clicks, as may be seen from the screenshot below.

Based on the data, we did not move the Order CTA link.

Conclusion: A/B testing provides objective data to back up decisions around what website elements to change to achieve sustainably optimal results

To quote VisitNorway:

“A/B testing is an important method for us to determine whether it is wise to move forward with new concepts or changes, before we spend a lot of time and money on design and development. We will use it actively to get real decision-making data. Our findings show that one must be careful of what you call “booking” in various languages- words are tremendously powerful”.

“We chose VWO because it was easy to use and had enough functionality to allow us to carry out the tests that we wanted”.

Location

Oslo, Norway

Industry

Travel

Experiment goals

Increase in CTR on the website

Impact

114% increase in Click-through rate