To improve conversions and keep up with the ever-changing customer behavior, enterprises are increasingly embracing the culture of experimentation to take the guesswork out of their decision-making as much as possible.

However, once you’ve run tests with winning results, it’s a tad difficult to wrap your head around the failed ones.

Download Free: A/B Testing Guide

But is experimentation all about winning?

If you’re expecting each of your tests to lead to a revenue uplift, you’re wrong, my friend.

The reality is a far cry from it, and you can’t simply expect experimentation to be a magic wand that fixes all your business metrics overnight.

Instead, experimentation serves as a process that helps you find answers to your questions and validate your hypotheses to help you achieve strategic business goals. It is an exercise of trial and terror that takes time and consistency.

If you understand this, that is half the battle won right there.

So, how do you win even when your test results are grim? You win when you change your approach to experimentation and your expectations from it. Below we discuss a methodology that can get you started thinking on this line and focus on the result that matters.

How to learn and improve from A/B test results

Conversion uplifts can’t be guaranteed every time you run a test. So, why would you bother running tests? Why do you think global giants like Amazon, Netflix, and Facebook run tests? Not every test is a winner for them too. The ultimate objective behind testing is to see which ideas can help and which can’t help improve user experience. Testing is an aid in your journey to user experience innovation.

When your focus is on long-term wins, you’ll walk away with great learning nuggets even from A/B tests that don’t look so successful on paper. Below we tell you some steps to adopt a fresh and refined approach to experimentation as a whole.

Brainstorm ideas

Motivate everyone in your organization to contribute testing ideas without staying limited to certain roles and designations. You can get the next best testing idea from someone you never expected to contribute.

Have some winning tests in your kitty already? Share the results with your stakeholders. Show them how optimizing a web form increased freemium subscriptions by 20% or 30% for example. Or if you have a test where you tested iteratively that led to a big winner, share how some tests can be patience-testing yet totally worth the end results.

That way, you will also be able to motivate your team members to take an interest in experimentation and make it an integral part of their work. This mass-level participation is necessary to make a shift towards an experimentation culture.

VWO can assist you in this ideation journey. How? VWO Plan is a useful capability that makes team collaboration a cakewalk. It offers a centralized repository where you can add all notes, observations, insights, and ideas pertaining to a particular test. This facilitates a structured way of building data-backed hypotheses where every team member remains aware of finer details related to all tests.

Plus, you can refer to our checklist anytime to see all the things you need to get right to uphold the quality of your experiments. The more finesse you show in pulling off the tests, the better position you’ll be in to tell your people to follow suit.

Strengthen your hypotheses

Experimentation either validates or refutes your hypothesis. What if your hypothesis is wrong and your status quo is still a strong performer? Is it not a win in a way? You are an A/B test away from validating the hypothesis behind your control and averting the shipping of a bad idea.

Suppose you remove discount code and promo code fields from the checkout page to reduce distractions for users, in the hope of making checkout seamless for them.

But contrary to your expectations you see from the data that removing these fields resulted in an increased drop-off from the checkout page. This will lead you to believe that users might have wanted to avail of discounts and therefore expected to see these elements throughout their journey on the website.

Use all knowledge that you’ve acquired about customer behavior on your website through A/B tests to inform your hypothesis creation process. Going back to our example, seeing your users drop off to search discount codes or compare products on third-party websites in the previous test, you can build a test idea around making such information available on the website to improve checkout and purchase completions.

The key lies in seeing experimentation as an integrated process and not treating every A/B test as a silo.

VWO Plan comes in handy as it allows you to jot observations from user behavioral analysis, create hypotheses based on those observations, and manage and prioritize testing ideas. With all necessary details in one place, you can keep track of all hypotheses through a progress view on the hypotheses dashboard.

Choose the right sample size

To get statistically significant results out of your A/B tests, you must run these tests on pages that get enough traffic. With a smaller sample size, you will have to give your test a lot of time to be statistically significant.

We suggest you sort this out before starting your testing campaign. How to do it? Try the VWO A/B testing calculator. It’s free!

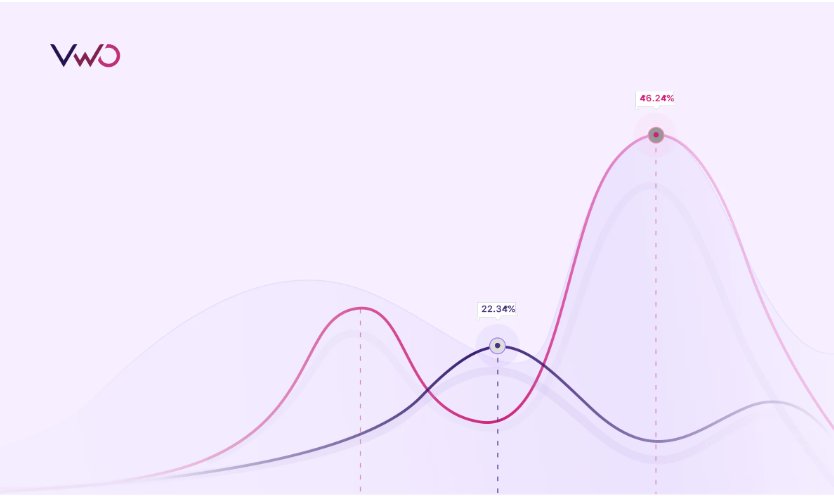

Besides this, founded on Bayesian inference, VWO SmartStats saves you from the hassle of needing a minimum sample size to a large extent. Simply enter the number of visitors and conversions for control and variation to calculate significance.

Get the most out of platform features and capabilities

It’s often seen that marketers don’t utilize all the features on their CRO platforms to their fullest potential.

For example, in VWO, you can use custom dimensions for report filtering post-segmentation. This gives you deeper insights into how different user segments behave in a testing campaign. But if you didn’t know its use case and purpose, you would not be able to unlock the magic it can cast on your CRO KPIs.

If you’ve chosen VWO, you must also know that one of its capabilities is also visitor behavior analysis. You should not restrict yourself to just testing; instead, you must explore other VWO capabilities to take your entire optimization program a notch higher.

Evaluate reports

Free your mind of ‘winning’ when you evaluate your A/B testing results. You must see experimentation as a journey to discover and learn how to optimize the performance of your future campaigns. And deriving great learnings only becomes possible when you read or evaluate experiment results in the right way.

Here are a few questions you can ask yourself after running a test:

- How did different user segments react to the variation?

- Which variations worked the best for existing users or new ones?

- How is this hypothesis different from the previous one?

- Have we uncovered any other testing opportunities for the future?

Answers to these questions should guide your next steps – whether you want to run an iterative test to get more granular-level data or you need to make room for new ideas in your larger marketing strategy.

And if you’re using VWO for your A/B tests, it’s easy to drill down to the details. Not only can you see the performance of all variations by studying specific metrics for each of them, but you can also compare their conversion rates using graphs. Even if your A/B test has failed, you can use the feature of custom dimension to see if this result is consistent across all user segments or if any particular segment has responded favorably to the test. This can form the base of your next personalization campaign too – where you target this specific segment with the changes validated by this test. Since VWO is an integrated platform, all these findings feed into each other and everything is managed via a single dashboard. Take an all-inclusive free trial to see this in action.

Let us explain how custom dimension works with an example.

Imagine, you want to highlight the top brands that have gotten listed and started selling their unisex sneakers on your eCommerce store.

You believe that adding a carousel showcasing unisex sneakers by top brands on your website homepage will pique visitors’ interest in this collection and even nudge them to purchase.

As a result, you want to run a test and track clicks on the ‘Shop Now’ CTA button in the carousel.

However, you see the test has not performed well because maybe the carousel images are not attractive, the radio buttons in the bottom are not prominent, or the CTA copy is not persuasive – the reason could be anything.

But then you decide to slice and dice the report and want to study visitors’ interaction with the element at a granular level. It is now that you see the variation performed better for females between the age of 22-28.

Based on this observation, you can decide to send personalized email newsletters offering discounts or promo codes to female visitors from the said age group that seemed interested in the sneakers. Or you can personalize the landing page with the carousel only for this particular customer segment.

The saying – When one door closes, another opens – comes alive in such situations with the help of VWO custom dimensions.

Companies that adopted the experimentation mindset and succeeded

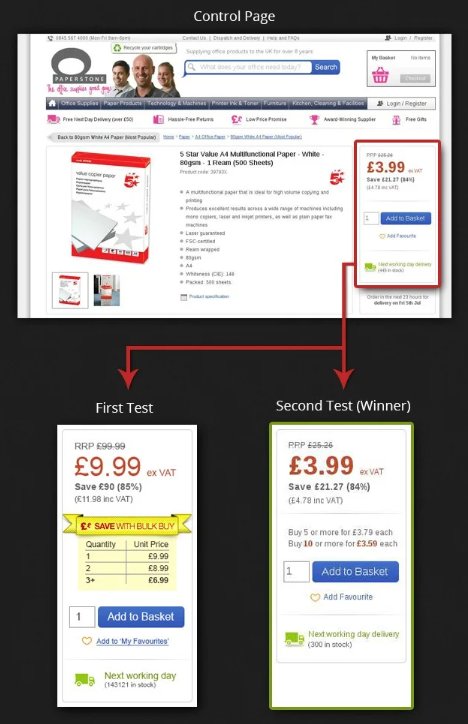

Learn how Paperstone’s ‘don’t-give-up’ approach to testing increased their revenue

This UK-based office supplies company competes against many dominant and small companies selling similar products. Paperstone used VWO to optimize its website and deliver top-notch user experiences to stay ahead of the competition.

Through a user survey, the company found that their customers and prospects expected bulk discounts. But considering the competitive nature of the business, profit margins were already very slim. Bulk discounts would work only if the average order value increased.

So, they came up with a hypothesis that offering discounts on certain products could improve the profit margin from their website.

While the control showed no bulk discounts, the variation displayed discounts on bulk purchases of popular products.

Although it was expected that the bright-yellow bulk-buy ribbon would garner users’ gaze, they completely ignored it. This initial failure made Paperstone rethink its entire bulk discount testing strategy. But they ultimately moved ahead with another iteration where they decided to run the test for a longer time, testing more visitors, for statistically significant results.

Using the same hypothesis, this time the company used VWO to track revenue. Interestingly, the variation outperformed the control, increasing the AOV by 18.94%, the AOV for bulk discount offers by 5%, and revenue by 16.85%.

Download Free: A/B Testing Guide

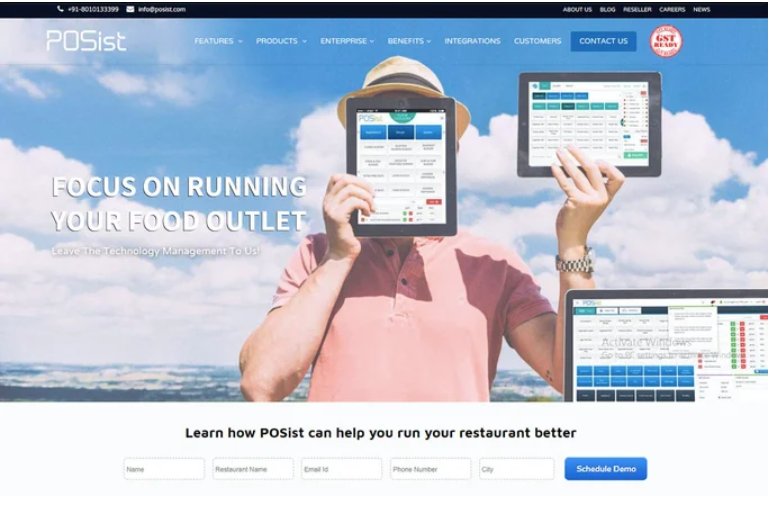

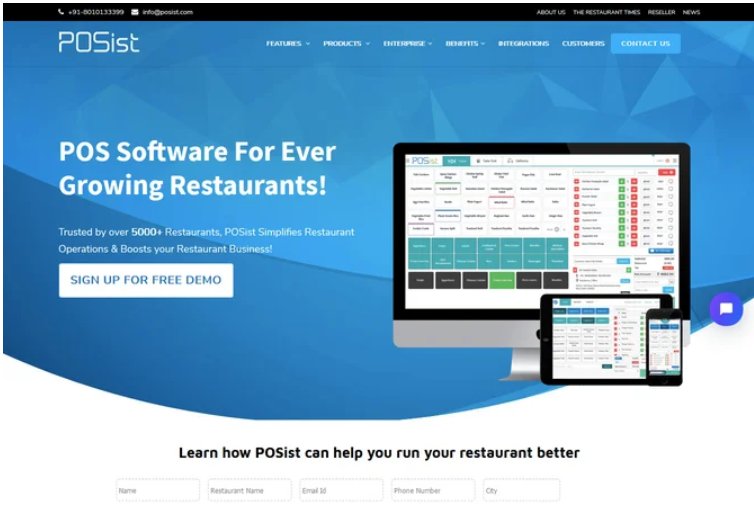

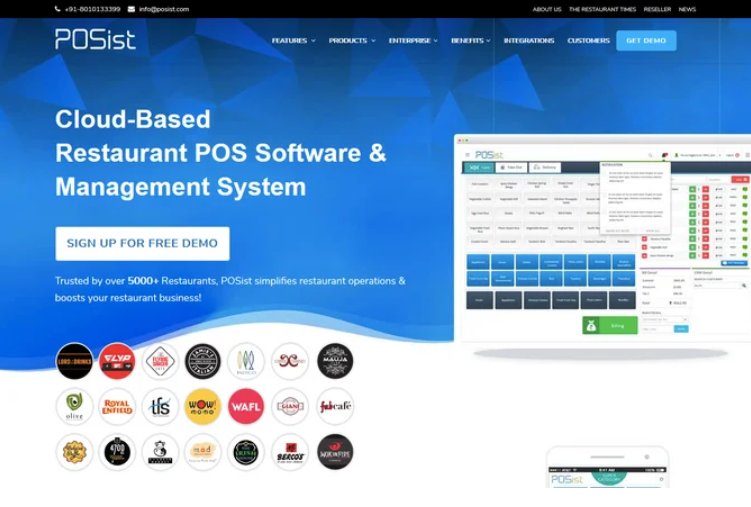

POSist’s iterative testing strategy led them to discover the best of the best winning variation

As a SaaS-based restaurant management platform, POSist’s objective was to increase the number of sign-ups for free demos. The team wanted to control drop-offs from the website’s home page and contact page because these two pages played key roles in their website conversion funnel. Given these reasons, POSist turned to VWO and kick-started its optimization journey.

POSist ran 2 home page tests using VWO. In the first test, the team revamped the above-the-fold content on the homepage by including catchy headlines and reducing the scroll length. The variation increased visits to the contact page with a conversion rate of 15.45%, an uplift of 16% from the control’s 13.25%.

For the second test, they added custom logos, testimonials, and the ‘Why POSist’ section. Plus, they bifurcated their offer – upgrade and make the transition – for prospects switching to a point-of-sale (POS) software application and first-time buyers.

Taking an iterative approach to testing, POSist tested this variation against the winning variation from the previous test. The new variation increased visits to the contact page by 5% in just one week! Consequently, this home page variation was made live on their website.

After sorting their home page, the team studied quantitative data from VWO Form Analytics and inferred that reducing the width of the contact form could improve sign-up conversion. The variation yielded an 11.26% of conversion rate, which is an uplift of 20% from the control’s 9.37%.

But as they did with the home page, POSist created a more conversion-focused contact page and tested it against Variation 1. This test led to a conversion uplift of 7%, while the two contact page tests improved the conversion rate by 12% combinedly.

This way, the company got 52% more leads in a month! This shows that iterative testing helps you optimize every small gap that contributes to improving user experiences. And it is this commitment to iterative testing that bakes experimentation into the culture of an organization.

Don’t stop but pivot your testing plan

All the ‘never give up’ quotes about successes we’ve grown up reading about should be put to practice in experimentation as much as you do in real life. Provided you wish to focus on big business wins in the long run. Therefore, deriving meaningful insights should be the primary objective behind every test you run, irrespective of the outcome it produces.

But to tread that route with confidence, make sure you have got a reliable CRO tool at your disposal. VWO is hands down the best tool that can help foster experimentation in your organization’s value system with its tremendous capabilities yet ease of use. So, next time you test an idea, look forward to learning and making smarter business decisions. Happy testing!