CRO Perspectives by VWO is a series built around community voices—real practitioners sharing the methods, setbacks, and insights that shape experimentation programs. Our goal is to move beyond surface results and highlight the thinking that drives them.

For the 19th post, we sat down with Jono Matla, Founder of Impact Conversion. He has some interesting insights to share on what makes experimentation work in practice.

Leader: Jono Matla

Role: Founder & Conversion Rate Optimization Specialist at Impact

Location: New Zealand

Why should you read this interview?

As Founder of Impact Conversion, Jono Matla helps online education companies improve conversion rates and lower CAC by designing websites that match how people actually make decisions.

His methods focus on uncovering where desire turns into doubt, simplifying the path to purchase, and running systematic tests that build on each other over time. Instead of treating conversion as a traffic or pricing issue, Jono looks at the underlying behaviors and frictions that cause qualified visitors to walk away.

In this interview, Jono talks about how he balances qualitative and quantitative research, and why hypothesis and QA are the cornerstones of effective testing. He also reflects on tests that didn’t hold up after launch, what it takes to compound wins through velocity, and where AI can play a supporting role in CRO.

For anyone running an online education business, or simply looking to make experimentation more effective, Jono’s perspective offers practical, psychology-driven insights grounded in real-world results.

CRO in education vs. destination marketing

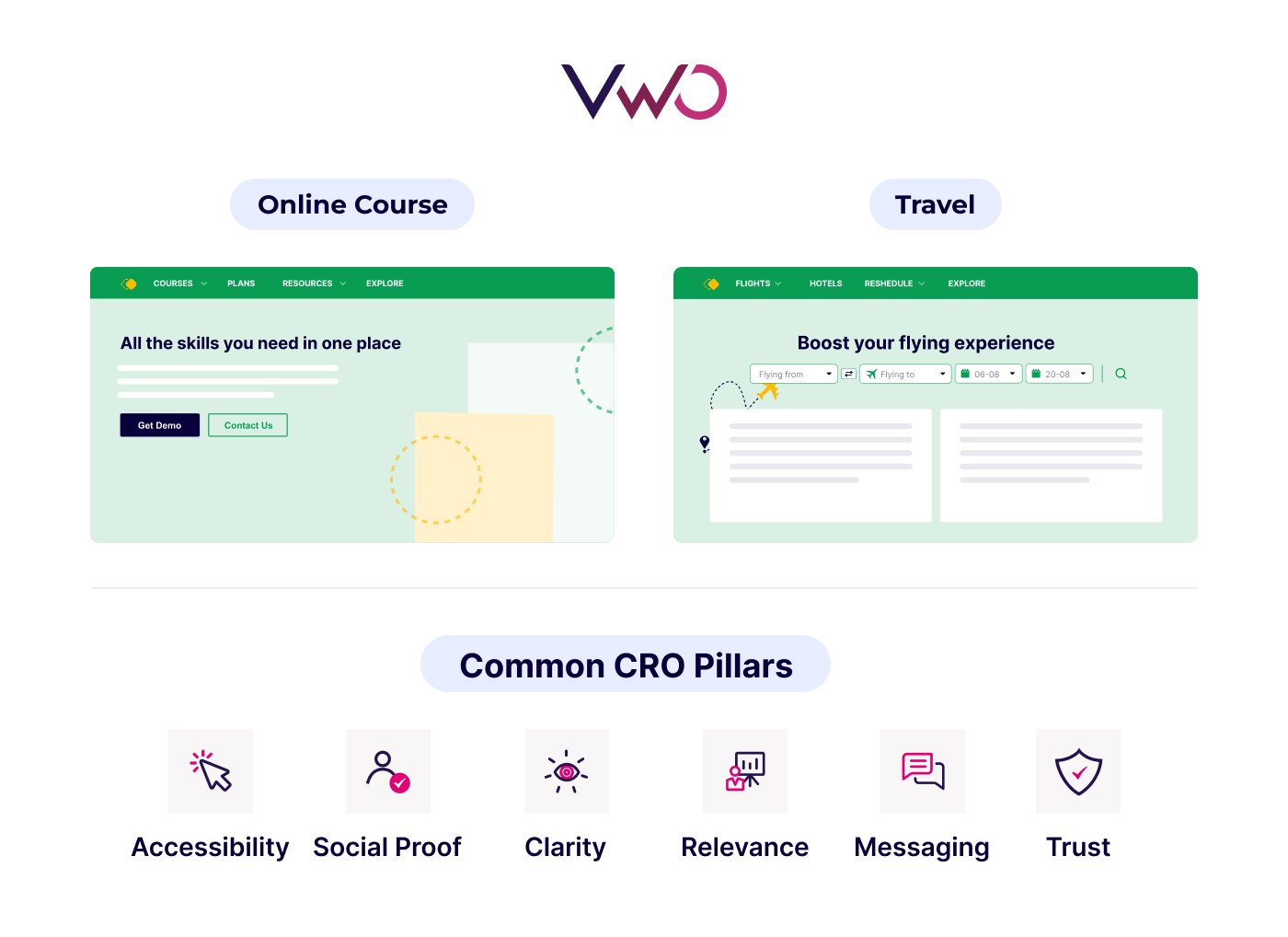

If you take the past 100 years, an online course is still a fairly new concept, especially in some niches more than others, compared to destination travel. So the stage of awareness is usually further up (I like using Eugene Schwartz’s 5 stages of awareness for this).

The fundamental drivers and motivations are different. Courses can often be pitched around a very specific pain point, angle, or problem. Travel and destinations are about transformation, experience, and visuals. So while the pillars of CRO like trust and social proof still stand, at the messaging level it becomes quite different between the two.

One of the bigger experiment wins we had for an education business was a 30% uplift in conversion by iterating on homepage messaging to match the user’s level of awareness. We ran four different tests and ten variations in total, refining until the final messaging clearly explained what the company does and who it serves.

Another test we ran for an education company delivered a 15% uplift by simplifying checkout—removing the ability to navigate back and keeping everything above the fold. This test, along with subsequent checkout wins, suggested to me that any friction in the process for an education company gives people a reason to back out.

In the travel industry, however, I’ve seen far more inconclusive results when trying to reduce friction. Intent seems much higher by the time people have decided to reach checkout. We even tested a completely new checkout with significant friction reduction, and the outcome was inconclusive.

Combining qualitative and quantitative research

I have my standard research methods, which include email surveys and on-site surveys, to analyze objections, motivations, and fears or anxieties. In the tools, I let qualitative insights spark the questions and quantitative data size the impact. I watch sessions to spot unexpected behaviors, then check analytics to see how common they are, who does them, and whether they affect conversion.

For example, in session recordings I noticed people arriving from paid traffic, seeing the offer, and then using the navigation to explore more about the business and its other offerings. The problem, qualitatively, was that many of them never returned to the original offer page.

So I jumped into GA4 and built custom reports to see whether these visitors who navigated away converted more or less. What I found was that those who explored other offerings converted almost 50% less, while those who navigated to reviews actually converted about 30% more. This directly shaped our test pipeline, where the next steps were to remove the navigation and bring more reviews onto the landing page higher up.

Pre-test checklist

My pre-test checklist looks like this:

- Hypothesis tied to a real observation

- Metrics: primary KPI, guardrails, success/failure thresholds

- Stats: MDE/power, sample size, runtime

- QA: tracking, cross-device/browser, overlapping tests

- Test plan: dates, decision rules

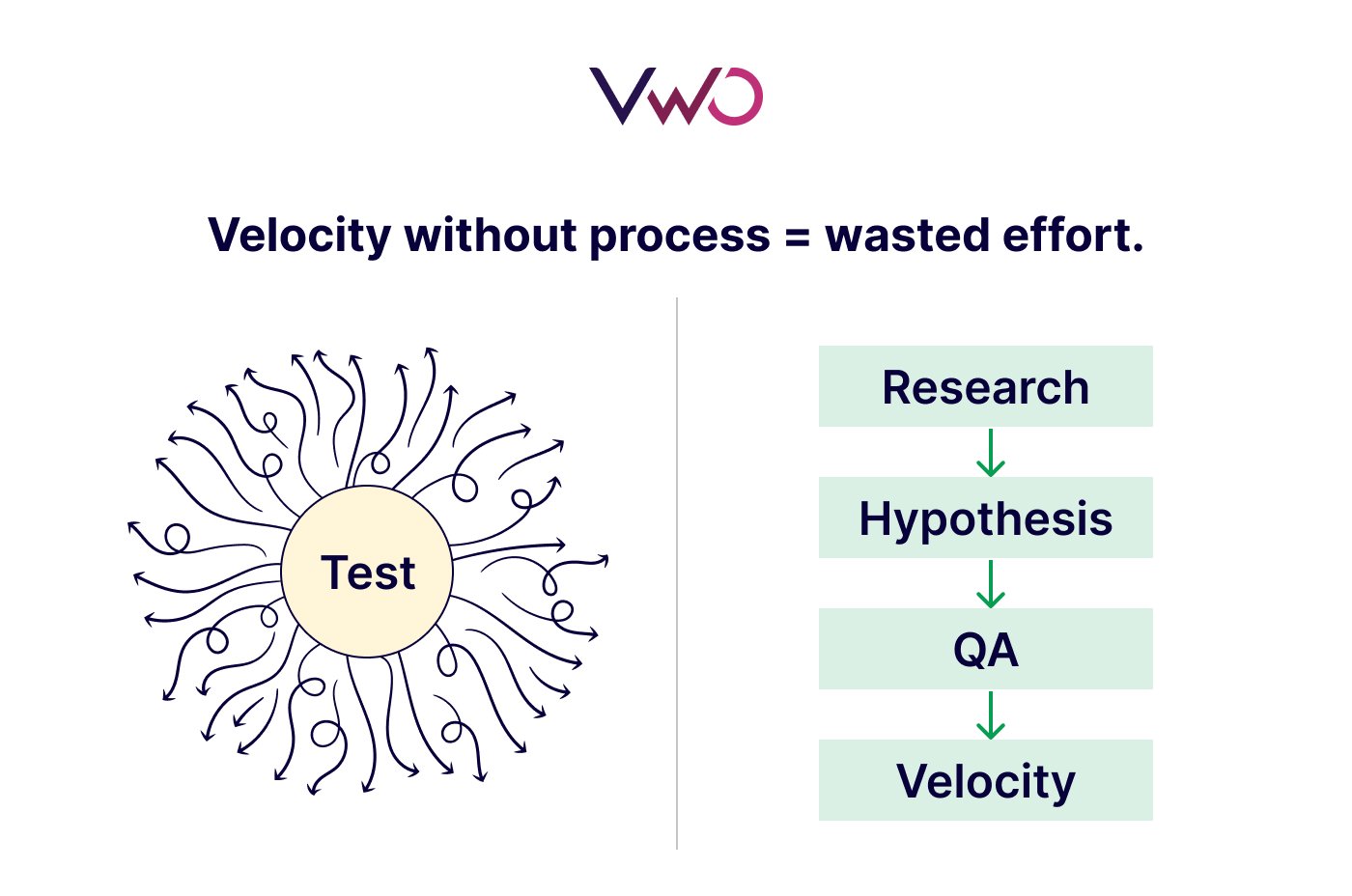

I do think the steps you take before launch largely determine whether a test succeeds or fails. All of the steps are important, but the two most important are the hypothesis and QA.

Without a solid, measurable hypothesis pulled from research, you’re asking for your testing program to be derailed. I believe there is room for curiosity in testing and experimentation.

The foundation of the testing program should be the linear path of research, observation, hypothesis, and solution, in my opinion. QA is obvious—if you don’t do it right, you could have great tests that fail simply because they weren’t implemented properly.

When tests underperform after launch

I have definitely seen tests that boosted revenue metrics during experimentation, but underperformed after going live. I think there are many possible reasons, but the most common are:

- The sample doesn’t match the population. For example, you ran a test at the same time a sale was happening, or a high-intent channel was being tested, and so the uplift fades once it hits the real world.

- Measurement gaps or problems: client-side revenue, attribution windows, refunds/returns, etc. Post-test analysis definitely helps catch this, and I always go by the principle: if you’re unsure, test it again.

With VWO, slice and dice your test results—even when they lose. Segment analysis breaks down whether control or a variation worked better for each audience group. Combine this with session recordings and heatmaps to see where friction occurred and why users struggled. These insights guide corrective steps for your next iteration.

Tests impacting AOV and LTV

Here are four examples of tests that improved key business metrics across different clients:

- Targeted upsell for high-intent existing customers: We offered a package ~5× average LTV and it drove a +46% revenue lift for that cohort. It was the right message, at the right moment.

- Labeling “Most Popular” in the nav: Adding this label toward a higher-AOV bundle increased RPV +17% by steering clicks to a more profitable product.

- Checkout upsell (digital products): We added a SKU-matched add-on bump for popular products at checkout. It was a one-checkbox offer at 25% off, shown after payment details and before submit. Over 14 days and 24k sessions, AOV rose 15%, with no significant change in conversion. Offer take rate was ~26% on carts, at 96% significance.

- Logged-in cross-sell (education): The logged-in course library/dashboard was one of the most viewed areas. We tested a dynamic “Next step” cross-sell on the library and post-lesson pages, with a light incentive. This lifted RPV 30% for logged-in users over 4 weeks and improved 90-day LTV. Lesson completion was slightly down, but it was a cut we were willing to take.

Why I think these worked: In the checkout example, the voice of the customer showed that when people found the add-ons organically (templates, formula cheatsheets), they loved them and thought they were great value. However, they often got lost in the main navigation. Mapping a single relevant bump offer to the cart item, priced below the main product, captured extra value without adding friction.

In the learning area, prompts met students at high-intent moments and nudged them to the next logical rung in the product ladder, which lifted immediate RPV and downstream LTV.

Documenting learnings

I track everything. Page, test category, hypothesis, result, iteration, and links to evidence (screens, heatmaps, interviews) all go into Airtable. Each entry includes a one-line learning: “When we did X for Y, Z happened—likely because ___.”

That way insights are searchable and reusable. Down the line, we can look back and see the levers that really drove uplift, sharpen the focus of the testing program, and ensure we have iterated enough. It also helps massively with onboarding new team members and showing others in the company how the program works.

You’ll always invest more in ads than CRO. That said, with rising ad costs and growing investment in creative production, margins are being eroded. The ad agency will say budgets need to increase, the creative agency will say you need more creativity—and they may be right. But the website is often the last place companies look, and I think that does them a disservice.

Why velocity matters

Testing velocity is very important in terms of the experimentation program actually driving real business outcomes, and those wins are compounding. If you’re only running a few tests here and there, you might be better off focusing elsewhere.

However, I think you have to be careful setting targets and KPIs around test velocity. If you’re just running tests to hit a target, without a basis, you’ll waste effort. My advice is: move quickly, but follow the process. Don’t skimp on details, and start with thorough research. That way you can test fast and still maintain a good win rate.

Also, schedule quarterly experimentation program reviews. Zoom out, look at everything you’ve run, and make sure tests align with company goals.

AI in CRO

I currently utilize AI in many of my processes, most notably in ideation, data synthesis, and sanity checking. I don’t use it for copy.

I do think AI is having a significant impact in digital education, particularly when it can be trained on one’s own expertise and content. It’s like having 24/7 tutor support, which has traditionally been a constraint.

Some people say AI agents will take over everything and you won’t even need to navigate a website. That may be true, but I’m bearish on that claim.

In markets where the product isn’t a commodity, people will still crave choice, genuine experiences with a brand, and trust—which will be even more important.

As the cost of producing content approaches zero, the companies that survive will be those that can still identify real behavioral insights from increasingly noisy data.

Conclusion

What Jono’s experience makes clear is that CRO isn’t one-size-fits-all. In online learning, even small bits of friction can derail a sign-up, while in travel, intent runs higher but success depends on trust and inspiration. Yet across these differences, the core principles remain constant—research-driven hypotheses, strong processes, and rigorous QA are what ensure experiments deliver meaningful results, no matter the context.

With VWO, you can design experiments that work with user behavior, not against it, and turn small wins into lasting growth. Book your personalized demo today to see how VWO can fit your business needs and help you achieve your goals.