In CRO Perspectives by VWO, we aim to build a growing collection of ideas, lessons, and lived experiences from the experimentation community—not just the polished outcomes, but the twists, blockers, and breakthroughs that shape how testing works inside real teams.

This 17th post features a practitioner who doesn’t just optimize experiences. She shares where she faltered, what those moments revealed, and how she helps make CRO a continuous growth function. She speaks clearly about which KPIs matter, why marketing shouldn’t operate in silos, and what beliefs have actually driven results for her clients. Her perspective is refreshingly candid, grounded in experience rather than industry trends.

Without further ado, let’s dive into the insights shared by Jin Ma, Director of Experience at Dentsu Singapore.

Leader: Jin Ma

Role: Director of Experience, Media, dentsu, Singapore

Location: Singapore

Why should you read this interview?

Jin Ma brings a sharp, cross-functional perspective to experimentation—rooted in hands-on experience across SEO, paid media, CRO, and marketplace optimization on platforms like Shopee and Lazada.

As Director of Experience at Dentsu Singapore, she’s led the development of new service lines, driven business growth through integrated strategies, and helped brands scale performance across owned and third-party channels. Her progression from Senior Manager to Director reflects her ability to innovate, lead, and deliver results in a fast-moving digital landscape.

In this interview, Jin discusses how a form test gone wrong uncovered valuable insights about user trust, how qualitative data like heatmaps and session recordings shape her testing roadmap, and why she sees AI as a creative partner in scaling ideas. She also explains why Customer Lifetime Value is the KPI that connects teams across channels, and how she encourages clients to move beyond “safe” ideas to unlock greater impact.

Whether you’re building an experimentation program or trying to align CRO with broader business goals, Jin’s perspective offers practical guidance grounded in real-world experience.

When a failed test reveals unexpected insights

One test that didn’t go as planned but ended up being incredibly insightful was when we simplified a lead generation form for a B2B client.

Our hypothesis was simple: fewer fields = less friction = more submissions.

We removed optional fields like ‘Industry’ and ‘Budget Range’ to streamline the experience. But instead of improving, the conversion rate dropped.

When we looked at session recordings and ran a quick feedback survey, we realized those fields weren’t friction points. They were confidence builders. By indicating their industry or budget, users felt they were more likely to receive a personalized, relevant response from the brand. These inputs helped build trust by reinforcing that their enquiry would be taken

seriously and routed appropriately. Removing those fields made the form feel generic, as if their request might disappear into a black hole.

That experience taught me a key lesson: not all friction is bad. Some elements we assume are blockers are actually signals of relevance or trust. Since then, I’ve become more intentional about evaluating form fields for their psychological utility, not just usability. Every field must be assessed through the lens of trust and user motivation, not just convenience.

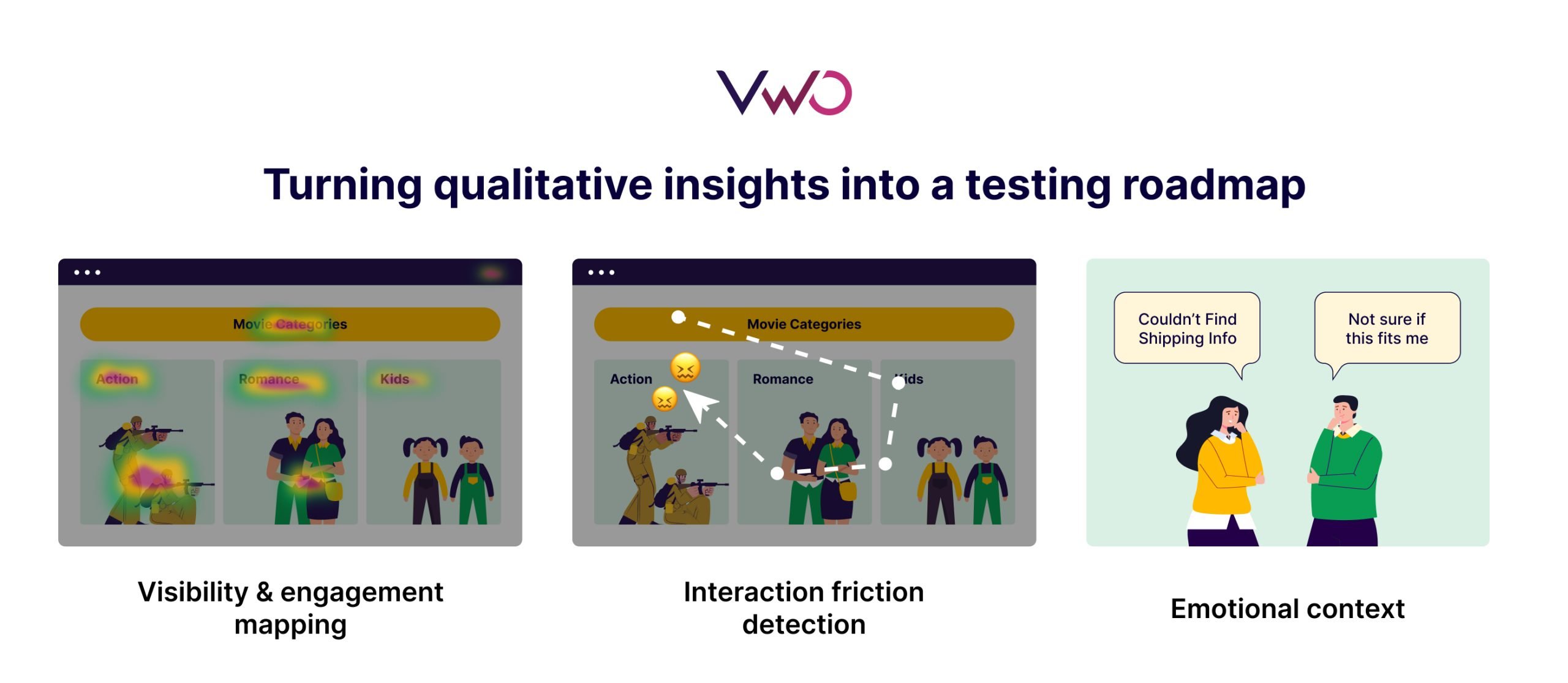

Turning qualitative insights into a testing roadmap

Qualitative insights are foundational to my experimentation roadmap. They reveal the “why” behind the numbers, allowing me to create informed, user-centric hypotheses instead of relying on guesswork or best practices alone. I typically structure my process into three key layers:

Visibility & engagement mapping

Heatmaps help visualize how users engage with page elements across devices. For example, if a CTA is placed above the fold but consistently ignored, it signals a potential issue with visual hierarchy or copy clarity. I’ve seen cases where users fixate on banner images or decorative elements instead of functional content, prompting us to test layout changes that improved CTRs.

Interaction friction detection

Session recordings reveal micro-frustrations that heatmaps alone can’t show. Hovering without clicking, rage clicks, or repeated back-and-forth behavior are all signs of friction. For instance, in one case, users kept clicking on product thumbnails expecting a zoom function. That insight led to the introduction of a zoom feature and image carousel, which significantly increased product engagement.

Emotional context

I often look into exit surveys, chat logs, and post-purchase forms to understand user sentiment. Phrases like “couldn’t find shipping info” or “not sure if this fits

me” have directly informed tests around content visibility, icon labelling, and copy tweaks. These seemingly small details often lead to meaningful uplift.

I maintain a qualitative insights repository, tagged by user segment, device type, and funnel stage. This database serves not just CRO, but also product and marketing teams looking to align messaging with user expectations. By grounding our roadmap in real user behavior and sentiment, we ensure that our experiments solve actual pain points, not just hypothetical ones.

Using AI to scale and enrich experimentation

AI has become an essential part of my CRO workflow. It not only improves efficiency but also enhances creativity and makes experimentation more scalable. One of the most valuable ways I use AI is to generate and diversify test ideas. Rather than relying solely on team brainstorming or playbooks, I use AI to explore new headlines, UX copy variations, and value proposition reframes.

These are often based on behavioral personas or insights from past tests. For example, when a test variant performs well, I input that version into an AI tool and ask it to suggest multiple alternatives. Some build on the same persuasive strategy, while others take a completely different approach. This allows us to test a broader range of hypotheses in a shorter time frame. What used to take weeks to develop manually can now be prepared in days, enabling a higher volume of quality tests.

AI has not replaced strategic thinking. Instead, it acts like a creative partner, helping us move faster and think wider. The result is more agile, insight-driven optimization that remains deeply connected to real user behavior.

Why CRO needs to be an ongoing growth function

Many brands, especially across APAC, still view CRO as a reactive fix rather than a continuous growth driver. It is often brought in when performance drops or before a revamp, with the focus placed on solving a few obvious issues. Once the biggest problems are addressed, testing stops. This short-term mindset significantly limits growth potential.

One common reason is the lack of clear ownership. CRO sits at the intersection of multiple teams, including UX, marketing, product, and web. Without a dedicated owner internally, it tends to fall through the cracks and is not sustained as a long-term initiative.

Another misconception is the belief that once top-performing pages are optimized, there is nothing left to test. In reality, experimentation becomes even more valuable over time, especially when insights are fed back into other functions such as paid media, SEO, and product development.

Conversion optimization is not a one-time project. It is an ongoing process of refining how your brand meets changing user expectations. Products evolve, competitors adapt, and customer behavior shifts. Assuming CRO is complete after a few wins is like thinking product development ends at launch. The most successful brands know that growth comes from continuous iteration.

Challenging safe ideas and building trust with bold strategies

When clients suggest safe ideas, I avoid dismissing them outright. Instead, I reframe the conversation around opportunity cost. I ask, “If we use our limited testing resources on this idea, what higher-impact opportunities might we be delaying or missing?” This helps shift the focus from what feels comfortable to what could deliver more meaningful outcomes.

I also propose a dual testing approach where it makes sense. If a client feels strongly about a safer variation, such as a minor copy tweak or visual adjustment, I suggest testing it alongside a bolder hypothesis. This allows us to honour their input while still exploring ideas with greater potential.

Another effective technique is grounding the conversation in user behavior. Rather than debating the merit of an idea in theory, I share how users are actually interacting with the experience. For example, if users are dropping off before reaching the test area, we may need to shift focus to earlier-stage friction.

I do not believe there is such a thing as a 100% right or wrong idea in CRO. It is always a matter of how much weight we assign to each hypothesis and how we prioritize and balance experimentation within the broader roadmap.

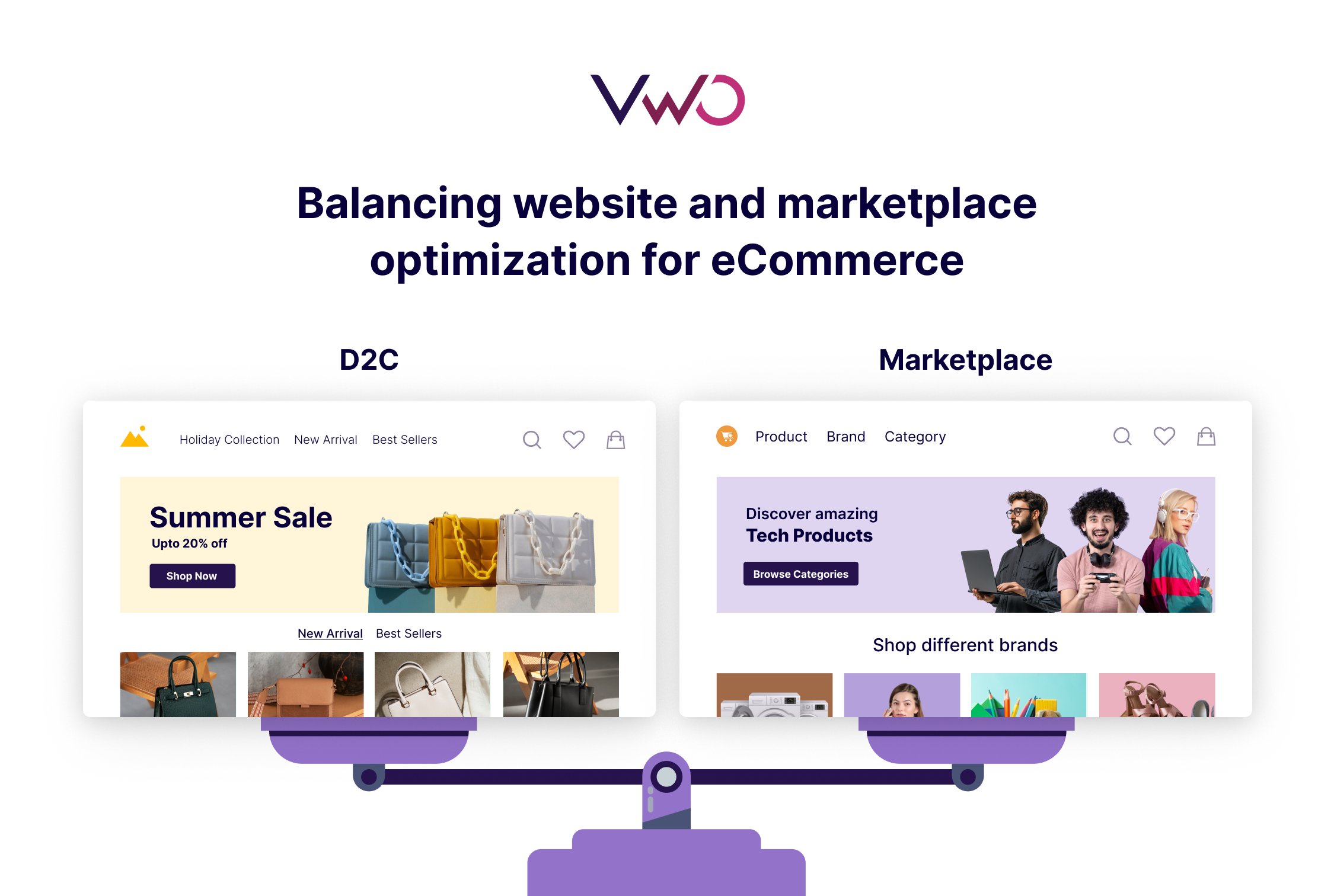

Balancing website and marketplace optimization for eCommerce

Website and marketplace optimization require different strategies, but they should inform each other. Websites offer greater control and creative flexibility. You can experiment more freely with layout, storytelling, and messaging. In contrast, marketplaces come with stricter formatting guidelines, where success often depends on visibility, pricing, and concise content.

That said, marketplaces are not limited to static optimization. We run A/B tests on product images, headlines, and promotional messages directly within platforms like Lazada and Shopee. These tests often uncover valuable insights about what drives clicks and conversions. For example, a benefit-focused product title that outperformed a technical one on Shopee was later adapted into the hero message on the brand’s homepage, resulting in improved on-site engagement.

When deciding where to focus, I encourage teams to align with both business priorities and audience behavior. If the goal is brand building or lifetime value, the website may take precedence. If immediate sales volume or new customer acquisition is key, marketplaces can lead.

The best-performing brands treat both touchpoints as connected ecosystems. When insights are shared across teams, messaging becomes sharper, content becomes more consistent, and the entire funnel performs more effectively.

Unifying fragmented marketing efforts

Integration remains a challenge because many organizations are still built around channel specific silos. SEO, paid media, CRO, and commerce teams often work with separate KPIs, tools, and timelines. Even if everyone agrees that integration is important, they often lack the structure or processes to make it happen. As a result, teams optimize their individual touchpoints without a clear view of the full customer journey.

We have seen that one of the most effective ways to drive alignment is by shifting the focus from team-based KPIs to shared business metrics. Setting a consolidated KPI, whether it is revenue per visitor, cost per acquisition, or customer lifetime value, creates a unified definition of success. Without this, collaboration remains vague and integrated actions struggle to gain momentum.

We also recommend that our clients build a habit of knowledge sharing across teams. Test results from CRO, SEO insights, and marketplace data should be shared regularly. Even if the information seems irrelevant at first, it might provide a crucial piece of the puzzle for another team.

Marketing integration does not need to begin with complex workflows. It starts with clear goals, open communication, and a willingness to connect the dots across teams.

Once, I worked with a furniture brand that sold through both its eCommerce site and marketplaces. Each channel was being optimized in silos, but once we consolidated insights from SEO, paid media, CRO, and marketplace data, we uncovered a broader opportunity to improve the entire customer journey.

From SEO, we identified a growing trend in non-branded, need-based searches like “space-saving dining table” or “comfortable desk chair for long hours.” These reflected specific lifestyle needs rather than general product categories. At the same time, paid media campaigns were bringing in solid traffic, but conversions were falling short in terms of return on ad spend.

CRO insights from session recordings showed users were highly engaged with product visuals and specifications but often missed messaging around practical benefits—like ease of assembly, fit for small spaces, or comfort for long use. Meanwhile, marketplace data revealed that listings with solution-focused descriptions and lifestyle imagery saw higher click-through and add-to-cart rates.

Using these cross-channel insights, we aligned messaging across platforms. Product pages were updated to highlight real-world benefits, ad creatives were refreshed to match search intent, and marketplace listings were optimized for clarity and usability. The result? Stronger conversion rates, better engagement, and more consistent performance across all channels.

CRO myth that holds brands back

A common myth is that what worked for one brand will work for another. We often get comments from clients asking us to apply the same tactics they see on competitor websites. While it is natural to look at others for inspiration, copying a test or UX element without understanding the context can lead to misleading results.

Every website has different user behavior, value propositions, and conversion barriers. What we can do, however, is use competitor insights as a starting point to build hypotheses. They can inform what to test, but not what to assume.

Our approach is always to validate ideas through user data and structured experimentation. Winning ideas come from understanding your own users, not replicating someone else’s playbook. The brands that grow through CRO are the ones that stay curious, test with intent, and learn from their own audience.

CRO is not about blindly adopting what others are doing. Just because something worked for a competitor does not mean it will work for your audience, product, or brand experience.

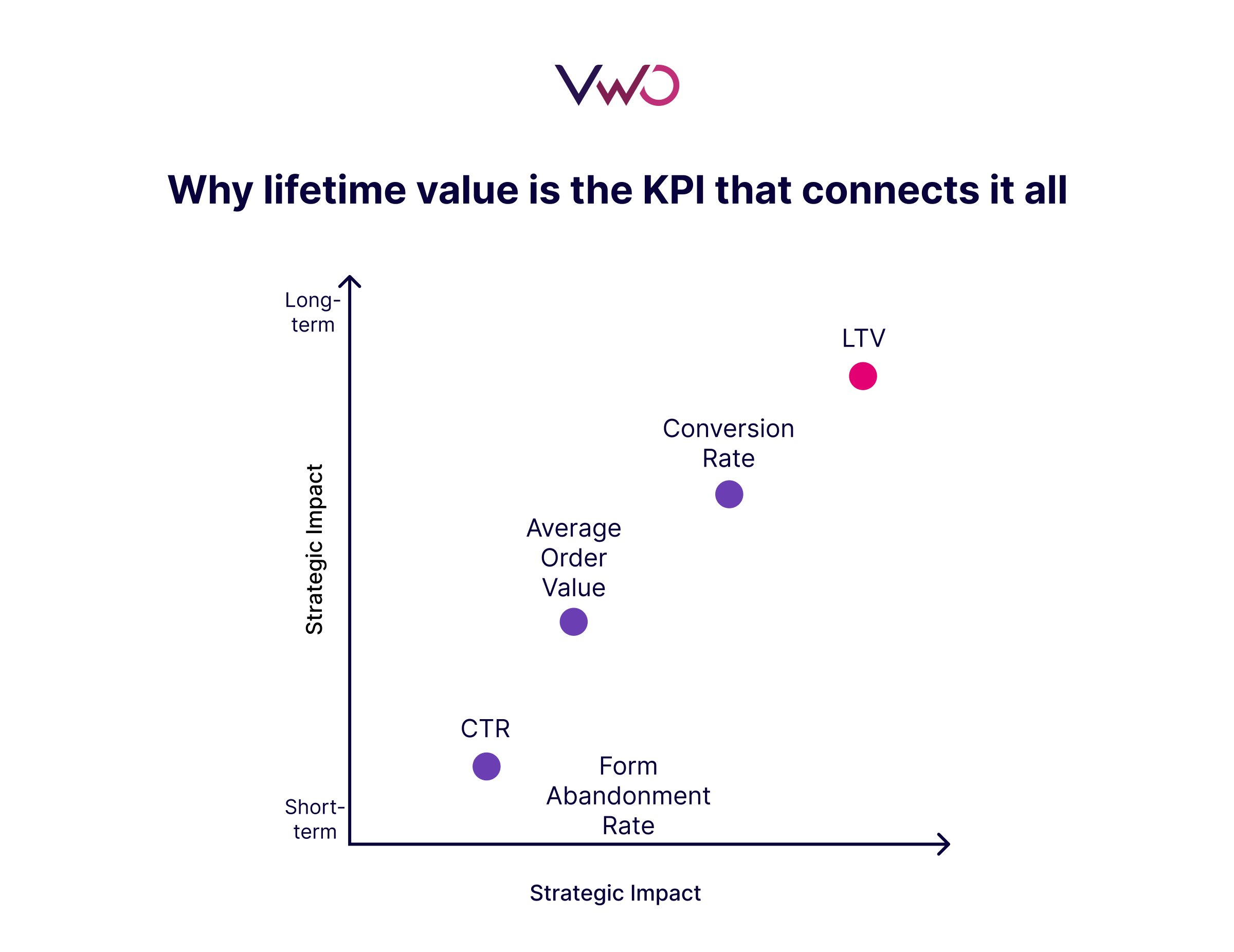

Why lifetime value is the KPI that connects it all

If I had to choose one KPI, it would be Customer Lifetime Value. LTV reflects the total value a customer brings over the course of their relationship with a brand. It helps marketers look beyond short-term wins and focus on the long-term quality of customers acquired. In a landscape where acquisition costs continue to rise, measuring LTV allows brands to prioritize sustainable growth rather than chasing conversions at any cost.

LTV is also a powerful way to unify marketing efforts across channels. It reveals, for example, that users acquired through paid media might convert quickly but churn faster, while those coming from organic search might deliver more consistent value over time.

However, to fully leverage LTV across owned, paid, and marketplace channels, the right attribution model is essential. First-click and last-click models often distort the true contribution of supporting channels. A data-driven or multi-touch attribution model helps surface how different touchpoints contribute to customer acquisition and retention, making LTV more accurate and actionable.

When brands adopt LTV with proper attribution, they gain clarity on which experiences drive not just conversions, but long-term value. This enables smarter budget allocation, refined targeting, and more meaningful experimentation across the entire user journey.

Test ideas that directly impact LTV

When considering lifetime value, I look beyond the initial conversion and focus on the entire customer experience.

Here are three areas where we’ve seen testing drive strong results in retention, repeat purchases, and long-term engagement:

Segment-based homepage personalization

Tailoring the homepage experience based on user segments is one of the most effective ways to drive long-term value. We test variations in messaging depending on whether the visitor is new, returning, or a former purchaser. For first-time users, the focus may be on brand reassurance or product credibility. For returning customers, we emphasize loyalty perks, previously viewed products, or exclusive restock alerts. These subtle shifts help make the experience feel more relevant, increasing the likelihood of repeat engagement and deeper brand interaction over time.

Personalized thank you pages

After a user completes a purchase, we frequently test personalized “thank you” pages that go beyond confirmation. These variations may include product care tips, referral incentives, or suggested next steps. This reinforces the value of the purchase and keeps the customer engaged even after the transaction.

Post-purchase upsell offers

We also experiment with bundled post-purchase offers using phrasing like “shop the full set” or “complete your collection.” These bundles can increase average order value and encourage customers to return for complementary items—a simple yet effective way to drive repeat purchases and enhance perceived value.

Leverage AI-suggested metrics in VWO to automatically track key campaign events like clicks, form submissions, and revenue conversions. These smart metrics, powered by AI, ensure consistent and insightful tracking across multiple campaigns.

What drives Jin’s approach to experimentation

One belief that shapes how I approach marketing is that there are no permanent answers, only better questions and better validations. In many organizations, there is pressure to present strategies as absolute solutions. But in reality, the strongest strategies come from teams that are willing to test, learn, and adapt quickly based on evidence rather than assumptions.

I also believe that many of today’s so-called “best practices” were once uncertain or even unpopular ideas. What feels like common sense now likely started as a hypothesis that someone had the courage to test. Early results may not show dramatic uplift, but they lay the groundwork for future decisions. Small learnings accumulate into patterns, and those patterns eventually define what becomes the new standard.

With AI evolving rapidly, user behavior constantly shifting, and newer generations bringing different digital habits and cultural contexts, we can no longer rely on fixed norms. What worked last year may already be outdated. That is why I believe every idea should be tested and every assumption validated. The brands that stay curious and committed to continuous learning are the ones that stay relevant

Conclusion

We hope this conversation helped answer some of the questions you’ve had about building a sustainable experimentation program—whether it’s how to handle failed tests, how to align teams across channels, or how to use LTV as a guiding metric. Jin Ma’s experience offers a grounded, practical view into what it really takes to scale CRO with impact.

If you’re looking to apply these ideas in your own organization, VWO gives you the tools to turn insight into action—from behavior analysis to A/B testing and continuous growth tracking. Start your experimentation journey with VWO.