The World Has Moved On From Plain Vanilla A/B Testing. Have You?

10 years ago, when our CEO Paras wrote about A/B testing on Smashing Magazine, it was a hit! A/B testing was still a lesser known term then. Today, it is something data-driven marketers swear by. In one of our user surveys, someone also went on to call it “the bread and butter of his job”.

But do we do it right? Are we chasing the right metrics? Are our hypotheses strong enough?

And importantly, are we still doing those plain vanilla A/B tests? Tests such as changing button colors or tweaking some copy and waiting to see what happens. And the worst of all, testing a change with a view that since another website increased their conversions by making that change, it should work for us as well.

Download Free: A/B Testing Guide

No wonder, many of our A/B tests fail and don’t get us the desired results.

This is partially because of the not-so-persuasive changes that we test. And partially because we still don’t play it like a pro. To help you get better at A/B testing and run tests that really affect the bottom-line of your business and not just your website, I am outlining 6 tips here:

1) Have a well-thought-out hypothesis to test

Begin by looking at data from your analytic solution. Find out where most people are dropping off. Additionally, look at the pages where people are spending the most time and yet not completing the goals. These pages could be your starting points. To get even more insights, you could perform usability testing and get real people to complete some tasks on your website and see where they are getting stuck.

Once you have found out some pain points that your visitors are facing, form hypotheses that will solve those problems.

You can read this excellent post on how to form a winning hypothesis for A/B testing.

2) Consider segmenting your traffic before testing

One common practice among marketers is to run tests on the entire website traffic. While for certain tests, this could be a necessity, most of the times, it’s just setting up your tests for failure. Keep in mind that different people coming to your website have different motives and will thus behave differently.

For example, people coming from search ads are very different from those coming from banner ads. While the former were actively searching for something, the latter found you when they were in the middle of another activity (like reading their favorite blog).

To strengthen the point further, let’s say you are an eCommerce store about to test the shiny new trust seals above the “Buy Now” button. While your loyal customers might just be indifferent to this change, what you really need to understand is, if adding the trust signals was able to improve the conversion rate of new visitors into customers. Thus, setting up such a test for everyone coming to the website will just skew the numbers and not give you accurate business results.

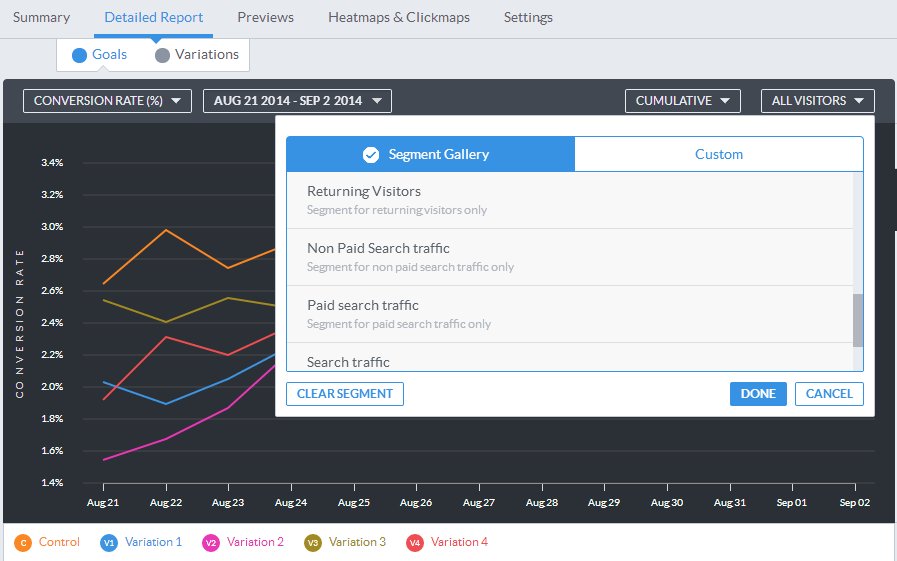

Hence, it’s smart to segment your traffic before setting up A/B tests. With VWO Testing, you can easily segment traffic based on 15+ options like location, referring URL, type of traffic and so on.

Don’t worry if you think segmenting traffic prior to running a test would lead to less visitors to test and hence the need to wait longer for results. With VWO Testing, you can even analyze your test results post completion (for enterprise customers) by various segments such as new visitors, returning visitors, visitors from mobile, visitors from desktop among others.

3) Test one “business” element at a time

It’s generally considered a best practice to test one website element at a time to know the exact effect of the change.

I would like to present a different perspective here. Most businesses don’t have the time to change one element at a time, wait for the results and then test another change. This approach could take forever and put pressure on your development team to code such changes every now and then.

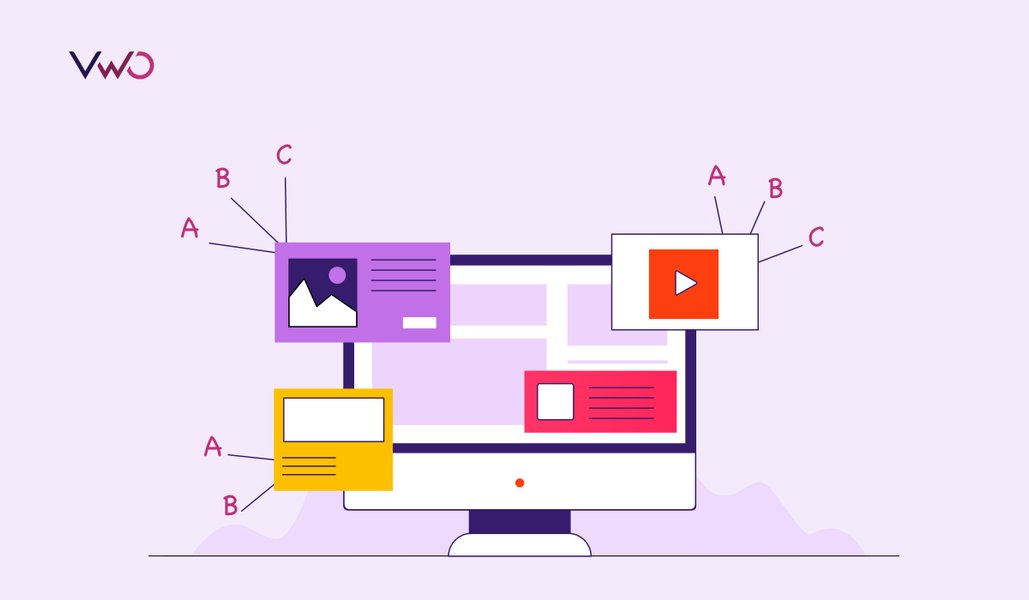

Instead, focus on changing one “business” element at a time.

Let’s suppose that you conduct a survey for the abandoning visitors on the checkout page of your eCommerce website by asking the question, “What’s stopping you from making this purchase?”. Going through the responses, you find out that new visitors are not completing the purchase due to a lack of trust. It makes complete sense to test a customer testimonial, trust seals, and quote a number and say something like “5896 orders completed this month”. These are three website element changes, but only one business element change. This will help you understand the effect of one factor (trust, in the example above) on your goals.

4) When choosing test goals or metrics to track, focus on those that move the needle

Often we chase goals that don’t really affect our business. Or track two or more goals. It’s quite likely that one of them will perform better in the variation than the control and vice-versa. Such tests end up being inconclusive and misleading.

The goals you track determine what is of most importance to you. If you are an eCommerce store, I am assuming it’s a purchase. Similarly for a SaaS business, tracking engagement (say, from homepage to pricing page) is a good goal, but measuring actual number of sign-ups is better.

Since you are spending time, money and effort, why not measure what really matters.

Of course, measuring many smaller goals (micro-conversions) doesn’t hurt. But if these don’t lead to changes in your business graph, you are wasting your time. Boils down again to the need for stronger hypotheses and smarter goals to track.

Key is: if you choose smarter metrics that really affect your business, you are more likely to test changes that will help you move the needle.

5) Wait for your tests to achieve statistical significance

This is important. I will explain this with a very simple example. Let’s say you toss a coin 10 times, 8 times out of which it shows head. Will you be able to confidently say if it’s loaded? The answer is no.

Let me scale the numbers up a bit: let’s suppose I toss it for 100 times, and 70 times heads showed up. Now? Is it loaded? I am still not confident of a yes or no.

Let’s say 10,000 tosses and 7,800 heads. Now? Yes, I can confidently say it’s loaded!

If you notice clearly, the percentage of times heads showed in all the three cases was always between 70 and 80. But I was only confident of the results when I had enough data points. That is what makes statistics interesting.

The more data points you have, the more trustworthy are the conclusions that you can draw out of them. However, sometimes interesting data points can turn out to be wrong.

The same is true for your A/B testing results. It is more trustworthy when it has a good amount of data. The maths though, is slightly more complex than in the above example. But the good part is you can leave it to the tool you are using for A/B testing. In VWO’s test reports you will always see a value called Statistical Significance (or the chance to beat original). Let this value achieve a minimum of 95% to trust the results (Bonus learning for those who hate maths: 95% statistical significance means that there is only 5% probability that the increase in conversions you see in the test is by chance). Or best would be to pre-calculate the number of days for which you want to test using our simple A/B-split testing duration calculator.

This way, you save yourself from making incorrect judgement by obsessively looking at the data each passing day.

Download Free: A/B Testing Guide

6) Analyze the bigger picture out of your test results

Often, we attribute the success of our tests too literally to the changes we make. For example, if reducing form fields increased our conversions, we fail to look at the bigger picture that people are still not ready to shell out too much information or share a specific piece of information. And take an incomplete learning, such as less fields equal to more submits, out of it.

What you should really evaluate here is why removing certain fields made it easier to fill the form. In a later test, you could even swap the current fields with the removed fields and test the impact in a similar scenario to get stronger learning.

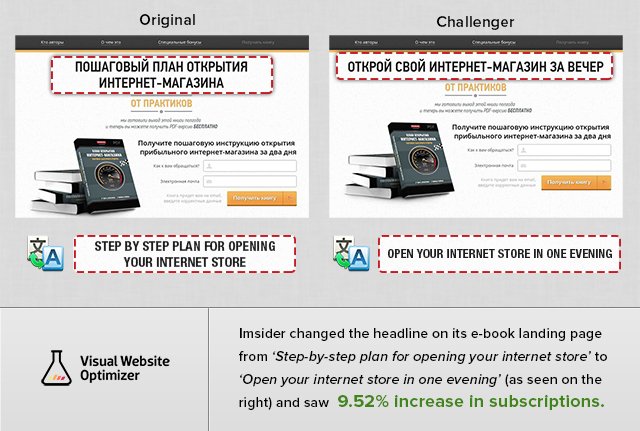

Hence, always try to analyze test results more holistically. If it was a headline change, compare the control and test messages and not just the wordings. One of our customers A/B tested the headlines, “Step by step plan for opening your internet store” and “Open your internet store in one evening”. The second headline won and increased the leads by 9.52%. The learning to derive from this test is that visitors on the website were more enticed by the fact that they could open an internet store so quickly, something which they might have previously thought of as a lengthy process.

Understanding your test results also gives you a direction for further tests. For example, suppose adding a testimonial on a SaaS website that reads “The product paid for itself in less than 3 months. I love it.” increased conversions. It is important to understand that this just doesn’t increase trust (as expected from a testimonial) but also takes into account the cost of the product. The next test in such a scenario can be based on the hypothesis that displaying the ROI of the product clearly on the website would lead to increase in conversions.

The key here is to always aim for a learning from every test that we run, even from the failed ones.

That’s it for now. Now that you know these 6 tips, I will say, test often and test smarter!

Let me know if you liked this post or if there is something I missed. Feel free to reach out to me at marketing@vwo.com!