As 2026 begins, many businesses set experimentation as their top goal. The challenge is turning this resolution into actionable, sustained progress.

It’s easy to start strong, but running full-fledged optimization campaigns is anything but straight-forward. The key to lasting success lies in perseverance. And when the inevitable roadblocks hit, you don’t have to figure it all out on your own. That’s where our CRO Perspectives series comes in.

We bring you insights from experts who have been in the trenches, sharing their stories, answering your toughest questions, and inspiring you to keep pushing forward.

In the twelfth installment of CRO Perspectives, we feature an exclusive interview with Phillip Wang, Head of eCommerce, APAC at Kaspersky, who shares his journey and valuable advice on how to approach experimentation effectively for lasting results.

Leader: Phillip Wang

Role: Head of eCommerce, APAC, Kaspersky

Location: China

Speaks about: Customer Experience • eCommerce • Digital Growth • Analytics • Strategy

Why should you read this interview?

Phillip Wang’s journey in the marketing industry spans over a decade, with extensive experience working at leading companies in China.

For the past four years, he has been with Kaspersky, a global leader in cybersecurity, where he progressed from a Conversion Optimization Manager to his current role as Head of eCommerce, APAC. In this capacity, he drives digital transformation and growth strategy for the company across markets in South East Asia, Greater China, and Korea.

With his knowledge of CRO and eCommerce, Phillip explains important CRO segments that will guide you toward effective optimization and better outcomes.

Discovering key insights from Phillip’s journey into CRO

My entry into CRO began with the optimization of affiliate landing pages and gradually expanded to include other key touchpoints in the customer experience. From email messaging to website flow to shipping product features, being the only person with “optimization” in their title in a newly funded tech start-up definitely offered me a range of responsibilities.

While nothing replaces getting your hands dirty to quickly build up a memory of what works and what doesn’t, immersion in the craft through other ways also helped me immensely:

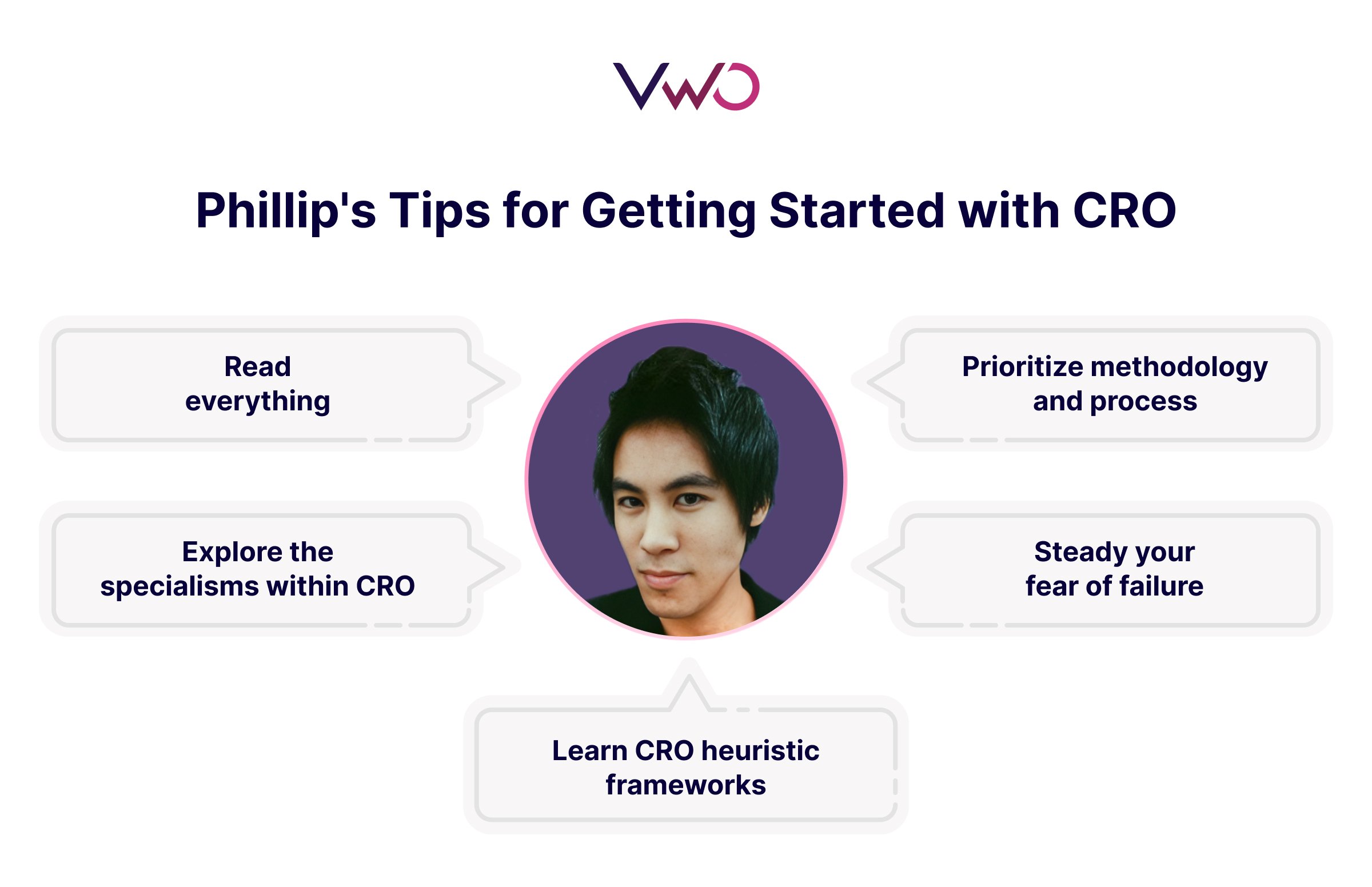

Read everything

CXL, Baymard, Making Websites Win—improve your understanding by devouring any relevant resource you can. You’ll be learning from experts who have already gone through what you’re trying to do now. Sometimes you’ll need a book, not just a 5-minute article, to uncover the main message with enough depth to truly understand it.

Explore the specialisms within CRO

UX, data analytics, digital marketing, consumer psychology—each deserves independent study, which can feel overwhelming. Instead, find the area that aligns with your natural aptitude and professional interest. For the most part, it’s enough to understand each domain’s role in the optimization of the customer experience and become adept at collaborating with subject-matter experts towards that end goal.

Learn CRO heuristic frameworks

Tools like LIFT, Lever, or the Conversion Sequence Heuristic are helpful to move from point A to B without getting stuck in analysis paralysis. They provide a fresh lens to assess problems quickly, but you still need the knowledge from other business areas (like the specialisms mentioned above) to use them effectively. Don’t do it alone—these frameworks work best as collaborative exercises.

Prioritize methodology and process

Without a deliberate approach to optimization, you’ll often feel like you’re swimming in the deep end. Reflect on the steps that make up your approach as well as what can be improved. Improving clarity around your system of operations will help in the long-run, not least to demystify the work involved in CRO for your team at large, but also as you scale and seek stakeholder buy-in.

Steady your fear of failure

Fear inhibits change, which in CRO could mean staying within the comfort zone of button color or hero image testing to avoid tarnishing a “winning” streak.

It’s natural to feel like each experiment reflects on your abilities, but real learning and innovation come through experimentation—and failure is a vital part of that process.

Building an in-house experimentation team

An important consideration when establishing an in-house experimentation team is, of course, the structure of the team itself. It’s rare to find someone who has deep expertise across statistics, product design, web development… the list goes on, and it would also be unfair to expect all of this from one (super) human being.

However, finding someone with a strong growth mindset is much more attainable. CRO is a cross-disciplinary field, so it’s important to recruit people who understand this and are passionate about development. For your first hire, look for someone with experience using data to drive business impact and with a strong focus on the customer’s perspective—that’s a winner.

By now, we’ve all seen the data showing that companies with well-executed online experimentation programs outperform those that don’t. A key, often overlooked detail is the ‘well-executed’ part—simply launching experiments without impactful results or with measurement issues won’t get you ahead.

To position yourself for success, consider some of these questions:

● What profiles will we prioritize for hiring?

● Should the team be centralized or decentralized?

● How will this team complement adjacent functions?

● What’s our plan for cross-functional collaboration?

● How do we secure top management buy-in?

● What is our current/future MarTech stack?

● Is our measurement and tracking reliable?

● What internal training should we provide?

● How is experimentation viewed within the company?

Planning CRO tests that boost revenue and conversions

We should first note that the level of urgency to ‘move the needle’ will affect your execution. Are we operating under calmer BAU (Business As Usual) times, or are these all-hands-on-deck times?

Depending on the state of the business, your approach to optimization should adapt—not just in terms of the level of effort or risk tied to each approach, but also the communication required with stakeholders. Think of low contrast (“to learn”) versus high contrast (“to earn”) experimental design: these concepts aren’t mutually exclusive, but the complexity of a solution will impact everything from operations to stakeholder expectations. Ideally, a balance between the two helps create a more strategic and robust roadmap.

Another framework that complements roadmap planning is: ‘Quality x Quantity.’ From a high-level perspective, the success of your experimentation roadmap depends on how well you can execute impactful tests (quality) and multiples of them (quantity). With your organization in mind, working backwards from both levers will help you determine the steps you need to follow (and continuously improve on) to maintain your critical outputs: customer-centered insights that fuel your experiments and intentional testing velocity.

To truly ‘move the needle,’ a strong focus on the roadmap is necessary, as it’s clear you’ll need a series of tests, not just one, to achieve it. Thinking about how to sequence testing to take advantage of internal resource capacity (research, design, development teams, etc.) while keeping the concepts mentioned above in mind will support this goal.

Build a robust pipeline of AI-generated optimization ideas with VWO Copilot. It generates tailored campaign ideas aligned with your optimization goals. You can add them directly to your hypothesis bank, ensuring a continuous pipeline of testing opportunities.

Building a collaborative vision for growth and experimentation

Recognizing that we don’t have all the answers is an important starting point. It’s crucial as we strive to apply the scientific method toward problem discovery, business growth, and digital transformation at large, while keeping ourselves grounded with this sometimes lofty mission.

Knowing that we can’t rely on individual experience alone to guide us with critical business decisions, and that we must go back to the basics by creating our own data, is a key part of this too.

The end result is hopefully a more nuanced view of experimentation as we carry out our day-to-day, helping us think beyond singular, isolated testing and instead become proponents of change and growth within the business.

Opening up this discussion with the team to collaboratively build out a shared vision also grounds our efforts on purpose and aligns everyone on the bigger picture.

Turning a challenging problem into a meaningful impact

A critical server problem meant we had to forgo an evolutionary design approach and instead replace a locally adapted website with a radically different, generic template—without testing.

Unsurprisingly, performance dropped, and pressure was high.

Not defaulting to a solution-first response but investing time and effort in diagnosis to get beyond surface-level assumptions is CRO 101, and this approach saved us time, effort, and impact in the long run. By first building a decent understanding of the problem, we were able to effectively treat it.

By consolidating user findings from multiple research methods—web analytics (“what and where is the problem?”), behavioral analytics and preference testing (“why is it a problem?”), as well as guidance from behavioral economics (choice architecture)—we could identify the root of the issue and take corrective action.

Spending time (and remaining calm) to define the scope of the issue was helpful, especially in the face of mounting pressure. This improved clarity and confidence in our decision-making, as well as enabled easier development of hypotheses to address the central problem and, thus, create meaningful impact.

Sometimes quick decisions and intuition are necessary, but a well-informed approach pays off when navigating complex situations.

Winning stakeholder buy-in for CRO

When engaging with stakeholders, are you communicating with the mindset that you are The Expert, or are you entering that conversation with an open mind? Do both parties fully understand each other’s positions, or is the conversation built on misaligned foundations?

I like the simple expression, ‘strong opinions, loosely held,’ as it underscores how we should do our due diligence but also welcome and respect counterarguments. This way of approaching stakeholder alignment helps set the tone in communicating with both authorities (based on substance) while still remaining approachable.

More specific to CRO, another principle I value is “show, don’t tell.” A 50% conversion rate uplift or a 20% RPV uplift all sound amazing and, without proper context, equally implausible. Rather than just telling your winning numbers to an audience, walk them through it: show your stakeholders the insight that led to the win. This could be the new way you planned your roadmap, designed your experiment, collaborated across functions, triangulated your research, or something else.

In my experience, this can carry far more weight in your professional relationships since you are now inviting stakeholders into the conversation and making CRO relevant to them, rather than just reading off a test results report.

Scaling experimentation efforts

Start small and prove your value. Value doesn’t need to equal money in the bank—at least not immediately. It could be introducing a new way of thinking, improving problem-solving approaches, or deepening customer understanding.

But always think: how can this be substantiated? How can I connect this back to top business priorities that my manager—and my manager’s manager—care about? This is especially critical when full buy-in is still lacking.

Even if the team is still getting familiar with CRO, there should always be a clear takeaway from the investment (time, effort, money…) you put into your program. Reflect on it and demonstrate your role—what else can be optimized? Whether it’s in program operations, hypothesis development, communication of learnings, etc.

Only through persistent focus on generating value (learnings, ROI, culture…) will you naturally grow towards building a stronger team and experimentation program.

Conclusion

We hope Phillip’s answers provided valuable takeaways and sparked new ideas for your CRO journey. A big thanks to him for offering thoughtful, in-depth insights on each topic. Now, it’s up to you to translate these insights into action.

One area worth exploring further is high vs. low contrast testing, a topic Phillip touched on during the conversation. Our follow-up piece breaks down how to select the right contrast level for different test types and offers real-world examples to guide your choices.

With focus, the right methods, and proven frameworks, progress is within reach. And if you’re looking for a platform to scale your efforts or kickstart your journey, our team is ready to support you—connect with us today.