Welcome to the tenth post in our “CRO Perspectives: Insights from Industry Thought Leaders” series. This time, Ian Fong, a leading name in Australia’s CRO industry, shares his expertise on various aspects of CRO.

Without much ado, let’s get started.

Leader: Ian Fong

Role: Managing Director, Experior

Location: Australia

Speaks about: Digital Strategy • Conversion rate optimization • User Experience • Experimentation • User Research

Why should you read this interview?

Ian brings over 15 years of invaluable experience in Conversion Optimization, helping businesses excel in the digital space. As the Managing Director of Experior, Ian leads a team that consistently drives impressive results in increasing leads, conversions, revenue, and average order value.

Ian started his career as a digital marketer in an agency before moving in-house. In 2014, he co-founded Yoghurt Digital, a digital marketing agency where he successfully led and grew the company from a team of 3 to 35. Given his vast expertise, his insights will be incredibly helpful to anyone looking to improve their CRO strategies.

Ian’s journey with testing and key lessons learned

My very first experiment was as an eCommerce manager. I fell for all those shiny case studies you see online about “changing button colors” and you’ll see big results. Of course, the first test was inconclusive, and it led me down the path of finding out how to do optimization properly.

The perception of Conversion Rate Optimization (CRO) is mostly grounded around growing revenue, but we often overlook the fact that it also helps save costs, reduce waste, and guide business strategy. Experiments are a true measure of whether a business is “data-driven” or not.

Beyond just increasing conversion rates, I believe the true power of experiments lies in helping businesses make lower-risk decisions.

Testing alternatives for businesses

We often associate a “test” with Randomized Controlled Trials or A/B tests. RCTs are the best form of evidence, for sure, but there are other ways to “test” if you don’t have enough resources.

Businesses that don’t have resources for RCTs can do a few things:

- Start with customer research like interviews or user tests

- Formulate ideas based on research insights

- Perform prototype testing on the new changes, or do a before/after analyses

I also think “resourcing” isn’t as much of an issue as businesses can also run low “effort” tests like copy tests. Copy changes don’t require a fully fleshed-out design to get developed, so they are less resource-intensive. The next step involves designs and developments that require proper planning. This includes tests that change the layout and tests that introduce or change existing functionality.

Securing stakeholder buy-in for experiments

Start small and prioritize low-effort (low-resource) experiments to prove your case. Once you’ve got a few runs on the board, it’s easier to get buy-in for the bigger stuff.

Get stakeholders involved in ideation. There are an infinite number of ways to solve a problem, and getting their buy-in early will help your entire program. It’s always fun when their assumptions are challenged.

Adapting to changing customer behavior

Customer research needs to be performed on an ongoing basis to keep up with trends and shifts in buyer behavior. It’s not enough to just do research and then run tests; research must be run in parallel. At Experior, we suggest 90-day sprints that prioritize research and then rapid testing. We adjust our roadmap based on new insights frequently, not just from tests, but from research.

We start every engagement with thorough customer research. We prioritize rapid research techniques that will allow us to get a test up and running within two weeks. Whilst the tests are running, we also focus on deep qualitative research and run the two tracks side by side.

New insights from either testing or research inform and dictate an overall insights roadmap. This roadmap allows us to know the top priority for research and testing. We run this for one sprint length (in our case 90-days) and we end with a retro with the client to review what worked and what didn’t. This allows us to plan for the next 90-day sprint and we continue this system going forward.

Within our sprints, we always have our clients involved (if they want to be) in the ideation session. There are so many ways to design a treatment for a hypothesis, and getting clients involved earlier means they have more buy-in and ownership of the treatment and outcomes. When there’s a difference of ideas, we suggest an A/B/C test where both the client’s preference and our preferences are tested with traffic

The more mature an organization is, the more it thinks in terms of risk mitigation and overall product strategy. Less mature organizations will fixate on immediate wins. This isn’t necessarily a bad thing but there’s obvious room for growth.

VWO enhances CRO efficiency with examples

Yes, VWO is my first point of contact for companies that are new to testing. The platform has helped introduce a lot of organizations to the power of experimentation.

Drive faster, smarter data-driven decisions with VWO Copilot, streamlining your testing process. The AI-powered engine generates tailored testing ideas, automates variation creation, provides in-depth heatmap and recording analysis, and more.

Test program delivering outstanding results

The most recent project was for a financial services company that heavily relied on its website to generate leads and sales. They redesigned their website due to the assumption that it was outdated. They followed all the typical best practices—lots of white space, succinct copy, and beautiful imagery. When they launched, they were getting twice as many leads, but they were highly unqualified compared to the previous site. I was asked to investigate and found 30-40 insights into what happened.

We reverted the website to the previous state, performed a few tests based on the insights, and the final result was a 45% increase in qualified leads compared to the previous site.

Statistical significance is an important guardrail metric, but I would never recommend it as a strict rule that must be hit. The context of the business, its sales cycles, traffic, and current conversion rate—everything plays a part. I am on the side of the speed of learning. Thus, sometimes, it is acceptable to reduce this number to ensure you learn fast.

Top experimentation mistakes to avoid

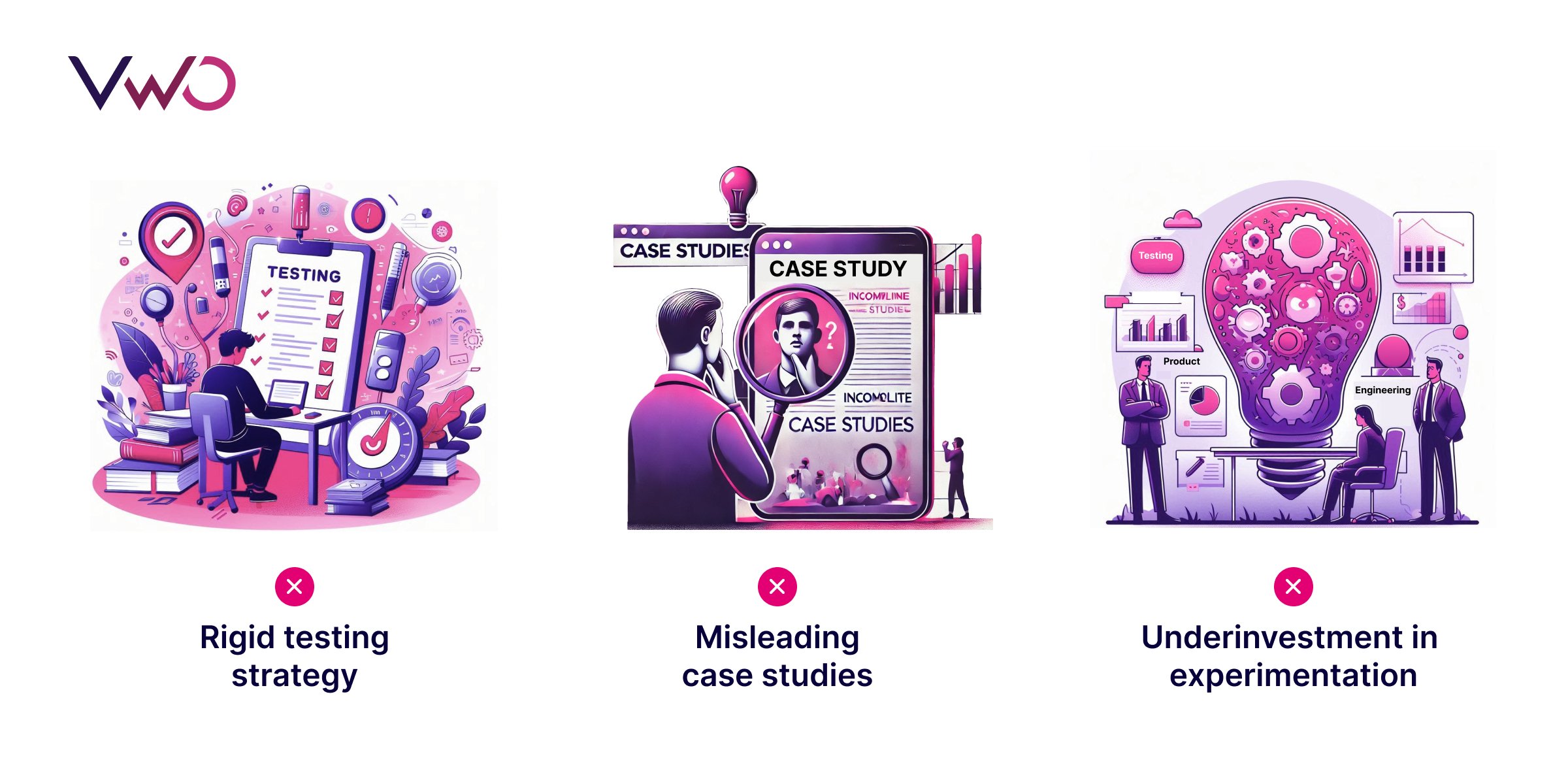

- Not having a testing strategy that allows for reprioritization when needed. I see a lot of testing programs using a shotgun approach, without the bigger picture learning.

- You see a lot of A/B test case studies online, particularly on LinkedIn, with wins, but they never mention the research/insights, and they always hide the stats. These tests are then copied by companies wishing to see the same results, unaware that the hidden stats paint a different picture.

- Not committing resources to experimentation. Experimentation works best culturally when driven top-down by executives. If the organization isn’t that mature yet, at least stop siloing it with marketing budgets. It is better served under product/engineering teams that can use experimentation strategy to prioritize and validate their roadmap.

Conclusion

Did you enjoy this interview? Ian Fong shared valuable insights on a range of topics in the world of CRO. He covers even more in his full interview with us on CRO Wizards. Check it out below.

And if you’re looking to elevate your conversion optimization journey, VWO is the all-in-one platform you need to drive better results. Take a demo today and start optimizing smarter!