Why Guardrails Matter: Protecting Revenue, Trust, and Brand in Testing

Modern businesses move fast, and with that speed, the importance of guardrails in testing has never been higher.

Growth experiments today don’t just need to deliver results — they need to protect revenue, customer trust, and brand credibility while doing so.

This blog is for teams who want more than the technicalities of setting guardrails or a list of their generic benefits. It’s for those who want to see how guardrails play out in real use cases, across industries, markets, and circumstances, so they can apply them directly to their own experimentation practice.

We’ll take you beyond the ‘what’ of guardrails into the ‘how,’ with real use cases, risks, and lessons.

Domains of guardrails: revenue, experience, trust, and ethics

Early on, teams mostly treated guardrails as a financial safety check. Today, experimentation has matured to demand a broader lens. Guardrails now span multiple domains, each addressing different risks that can make or break long-term success.

Revenue guardrails: protecting profitability and scale

Revenue guardrails are critical checks that protect profitability and revenue quality beyond raw conversion. Common revenue guardrails businesses track include:

Gross margin

A spike in orders isn’t a win if costs rise faster. Track gross margin as (revenue − COGS) ÷ revenue to see whether each order stays profitable. If margin falls by 1–2 percentage points versus control, that “win” may actually be hurting unit economics.

Refund requests and returns

Flash sales or steep discounts may spike order volume and make dashboards look great. But if customers feel misled by hidden conditions or disappointed with product quality, return rates climb and profits vanish. A return-rate guardrail shows whether today’s sales spike could become tomorrow’s margin loss.

Churn and cancellations

Increasing prices or making renewals stricter can lift revenue per customer for a while. But if loyal users feel pushed away, they cancel, and overall lifetime value drops. A churn guardrail highlights when short-term revenue gains are actually customer loss in disguise, protecting long-term business health.

Case in point: Oda, Norway’s leading online grocer, shifted to business guardrails for experiments, explicitly tracking revenue, profitability, and short-term retention alongside success metrics. They use a decision table to gate rollouts: if a variant hurts profitability, it won’t ship unless there’s a very strong positive effect on retention or revenue, in which case an accountable leader must approve. In a real test showing cart total in the header, revenue and profitability improved, so the feature rolled out—an example of “do no harm” validated by financial guardrails. (Source: Medium)

Experience guardrails: preventing friction from driving users away

Conversions in one area may mask poor usability elsewhere. Guardrails ensure the user journey isn’t compromised.

Latency and performance metrics

Eye-catching visuals or interactive elements may improve engagement, but if they slow page load by even a second, users drop off before engaging. A performance guardrail ensures growth experiments don’t sacrifice speed for style.

Frustration clicks (“rage clicks”)

A new CTA design or navigation layout might drive more clicks, but rapid clicks may also mean users are stuck on broken links, unresponsive buttons, or misleading labels. A frustration guardrail surfaces this hidden friction before it undermines the experience.

Abandonment in checkout or sign-up

Adding extra verification steps can improve security, but too much friction causes genuine customers to quit mid-flow. An abandonment guardrail balances protection with usability so conversions reflect real adoption.

Case in point: In 2021, Airbnb shared how they built an Experiment Guardrails system to ensure growth experiments don’t cause hidden harm. For instance, a test to remove “house rules” at checkout boosted bookings (the success metric) but lowered guest review scores. Since review ratings were tracked as a guardrail metric, the experiment was flagged and escalated instead of being rolled out blindly. Airbnb’s framework uses multiple guardrails, such as impact thresholds, statistical checks, and exposure limits, to make sure short-term revenue gains don’t erode long-term trust and experience. (Source: Medium)

Trust guardrails: ensuring fairness, transparency, and compliance

Even profitable experiments can backfire if they feel manipulative or non-compliant. Trust guardrails keep brands credible.

Pricing changes

A discount structure or bundling experiment may drive more immediate purchases, but if customers later feel locked in or overcharged, the result is refund requests and eroded trust. A pricing guardrail, such as monitoring refund rates or negative feedback, ensures that higher order volume reflects sustainable growth.

Consent and privacy signals

Nudging “accept all” can lift consent rates in the short term, but customers who feel pressured are more likely to opt out later, churn, or even escalate complaints. A privacy guardrail monitors opt-out rates and retention to ensure compliance and long-term trust are preserved.

Accessibility compliance

A polished new design may impress most users but unintentionally block those relying on screen readers or keyboard navigation, exposing both usability gaps and legal risks. An accessibility guardrail keeps inclusivity front and center so progress benefits all customers.

Case in point: In 2022, Zara began charging online customers in the UK a £1.95 return fee for mail returns, a policy later extended to other European markets such as Spain. While this move helped curb logistics costs, it triggered customer backlash and backlash over fairness and convenience. (Source: Reuters)

A pricing guardrail, such as monitoring sentiment and repeat purchase behavior during such tests, would spot trust erosion early, allowing teams to adjust before long-term loyalty is compromised.

Ethical guardrails: safeguarding fairness and safety

Ethical guardrails protect fairness and safety, ensuring brand identity and trust remain strong over time.

Fair lending and algorithmic transparency

In financial experiments, a credit model may lift approval rates or reduce defaults, but without fairness guardrails, it could systematically disadvantage certain groups. Guardrails here enforce bias testing across demographics before rollout.

High-risk AI usage

In healthcare pilots, a symptom checker feature can streamline patient intake by reducing wait times and guiding next steps. But it risks misclassifying conditions or overlooking red flags. Guardrails like urgent action tracking (checking if users follow “seek care” prompts), drop-off monitoring (spotting exits after risky outputs), and safety filters (blocking unsafe guidance) help protect patients while improving efficiency.

Health information accuracy

On health portals or wellness apps, A/B tests that promote trending articles or videos may lift clicks and watch time. But if those variants surface unverified remedies or misleading medical claims, the “growth” is harmful. Accuracy guardrails, such as requiring content from authoritative sources or monitoring fact-check flags, ensure experiments don’t optimize engagement at the expense of public well-being.

Case in point: In 2021, YouTube’s recommendation system came under scrutiny when the platform acknowledged it had surfaced misleading COVID-19 vaccine content. YouTube responded by expanding its medical misinformation policy and prioritizing authoritative health sources in recommendations. (Source: Reuters)

If accuracy guardrails had been part of the recommendation tests from the beginning, such as monitoring the prevalence of fact-checked or flagged content, misinformation could have been caught before reaching scale.

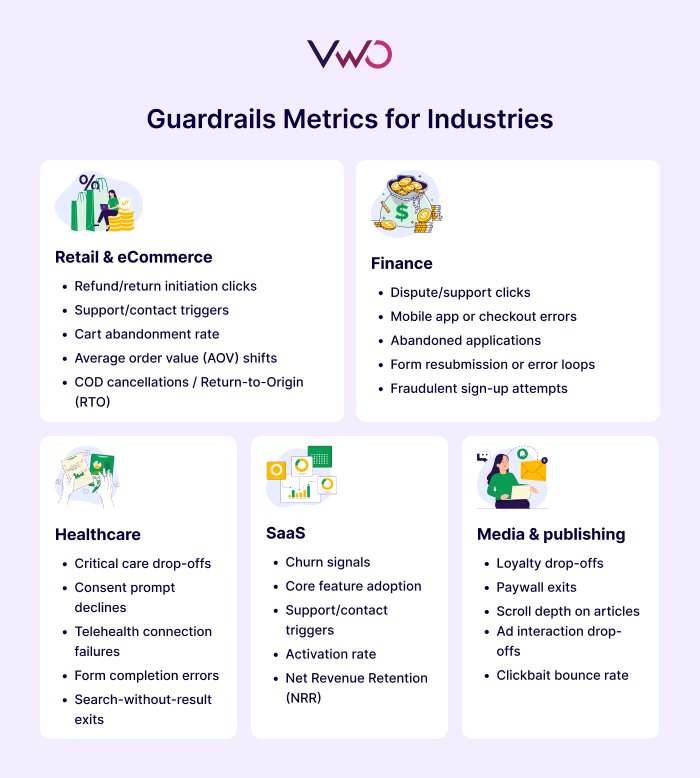

Industry-specific guardrails: Protecting growth across sectors

Guardrails must adapt to each industry’s realities. By tailoring guardrails to sector-specific risks, businesses ensure growth that lasts. Find below how this plays out across industries.

Retail & eCommerce

Retailers and eCommerce brands often optimize for conversions, revenue per visitor, and average order value. But high checkout numbers don’t guarantee lasting profit. Here are the guardrails that surface these risks early.

Refund/return initiation clicks

Spikes here mean the variant may be creating post-purchase regret. Set an alert if this rate rises meaningfully vs. control for several days.

Support/contact triggers

A jump in “Contact us,” chat, or help-center searches usually means the new version confuses people.

Cart abandonment rate

If more shoppers leave before paying, something in the offer or new flow (fees, forms, surprise steps) is turning them off. That’s a signal to rethink the change.

Average order value (AOV) shifts

Discounts or upsell changes may increase conversion but shrink basket size. Tracking AOV ensures short-term wins don’t erode revenue per customer.

COD cancellations / Return-to-Origin (RTO)

In markets like India, many placed orders never convert into cash if customers cancel or are unavailable. Monitoring RTO rates keeps “growth” grounded in actual fulfilled revenue.

Sunita Verma, an experimentation specialist with experience at H&M, FairPrice Group, and Zalora, spoke with us in a CRO Perspectives interview and shared an interesting perspective on setting guardrails in eCommerce.

To pick the right guardrail metrics, she suggests playing the devil’s advocate. Say the team tests a ‘Similar Product’ recommender on the product page to give users more options and increase the chance they find something they like.

The key is to ask how this change might backfire. A useful guardrail here could be the cart-out rate or the time between adding to cart and checking out.

This helps reveal if giving users more choice upfront triggers the paradox of choice—where too many options delay decisions or even cause abandonment.

SaaS

For SaaS companies, growth depends on recurring revenue and consistent usage. Experiments may lift trial-to-paid conversions or engagement, but without retention, those gains don’t last. Guardrails ensure experiments strengthen long-term relationships instead of chasing short-term spikes.

Churn signals

If more new users cancel early or click “downgrade” during onboarding, your sign-ups may not stick. That’s a warning that short-term wins from your latest test won’t last.

Core feature adoption

Once the variant is live, if engagement with key workflows (dashboard, reports, collaboration) reduces compared with control, it means the change drives clicks, not value.

Support/contact triggers

Post-launch, a spike in “Help,” chat, or FAQ searches relative to control signals confusion introduced by the variant.

Activation rate

Experiments may boost trial sign-ups, but if fewer users reach the “aha moment” (like creating their first project), growth won’t translate into long-term adoption.

Net Revenue Retention (NRR)

Higher trial-to-paid conversions mean little if downgrades or churn outweigh expansions. NRR as a guardrail ensures growth compounds instead of leaking.

Finance

Financial institutions must balance customer growth with strict risk and compliance obligations. Faster loan approvals or easier sign-ups look great as primary metrics, but they also open the door to fraud, unfair practices, or regulatory breaches if left unchecked. Guardrails here don’t just protect revenue; they protect licenses to operate.

Dispute/support clicks

Spikes in “Report a problem” or “Contact support” during payments expose early cracks in customer trust.

Mobile app or checkout errors

Errors or timeouts during transaction steps directly correlate with lost revenue and abandoned checkouts. Tracking these ensures smooth completion of high-value flows.

Abandoned applications

If users drop off before finishing a loan or account-opening form, the variant may be adding friction. Monitoring completion rates keeps experiments aligned with conversion and compliance goals.

Form resubmission or error loops

Repeated attempts to submit ID, income, or payment details show the new design may be confusing or broken. This guardrail highlights friction before it drives churn.

Fraudulent sign-up attempts

Easier sign-up flows may also attract fake accounts. Tracking flagged or duplicate accounts as events ensures conversion gains don’t invite fraud.

Healthcare

Healthcare experiments are high-stakes. Metrics like portal logins, appointment bookings, or telehealth sessions show adoption and engagement. But these successes mean little if they compromise accuracy, safety, or privacy.

Critical care drop-offs

Abandonment in urgent flows (e.g., booking, prescription refills, telehealth join) flag risks to safety that conversion metrics alone won’t catch.

Consent prompt declines

Patients refusing or abandoning when asked to share health data expose trust or compliance risks.

Telehealth connection failures

Patients repeatedly retrying or abandoning at “Join session” buttons expose reliability gaps that can directly block access to care.

Form completion errors

High error rates in medical intake or insurance forms indicate friction that can delay care and frustrate patients.

Search-without-result exits

If users search for doctors, clinics, or services but exit without selecting an option, the variant may be undermining discoverability of care.

Track user journeys with VWO Funnels to find the exact step where most people leave, whether it’s a button click, form page, or checkout stage. Then watch those moments with Session Recordings to see what users actually do on screen, like repeated clicks, long pauses, or sudden exits. This way, you not only know where people drop off but also what causes it.

Media & publishing

In the media, the tendency is to optimize for engagement, clicks, time on site, and subscriptions. But left unchecked, experiments can push low-quality or misleading content, trigger user frustration with ads, or erode trust in journalism.

Loyalty drop-offs

Declines in repeat visits, newsletter sign-ups, or “add to favorites” actions reveal that traffic growth isn’t translating into audience trust.

Paywall exits

Bounces when hitting subscription or paywall prompts confirm that experiments are scaring readers off instead of converting them.

Scroll depth on articles

Low scroll activity shows readers are abandoning midway, signaling that layout or content experiments may hurt comprehension.

Ad interaction drop-offs

New ad placements may increase impressions, but if users bounce faster or scroll less, it signals monetization is undermining engagement.

Clickbait bounce rate

Headlines or promo ads that boost clicks but drive users to exit quickly show engagement isn’t meaningful. Tracking bounce rates from content teasers ensures growth isn’t built on misleading hooks.

Guardrails in the age of AI-driven testing

AI can now automate experimentation at scale, generating test ideas, designing variants, and rolling them out in minutes. This speed creates opportunity but also magnifies risk. A single flawed change can now affect thousands of users almost instantly.

Automation at scale

AI can generate far more variants without relying on the creative team’s design bandwidth. But without proper checks, flawed changes can slip through unnoticed. An AI-generated landing page might drop a required form field, hurting lead quality even as sign-ups rise. A checkout flow might overlook accessibility basics, unintentionally excluding users. Guardrails, like monitoring lead qualification or running automated accessibility scans, ensure that velocity never overrides quality.

Strategic alignment

AI optimizes for the metrics it’s trained on — often clicks or conversions. Left unchecked, this tilts experiments toward vanity lifts at the expense of user experience and trust. AI-generated copy might overuse urgency, boosting sign-ups but spiking cancellations later. A recommendation model might maximize click-throughs by pushing discounted add-ons, only to drive up refund requests. Guardrails tied to churn, refund rates, or complaint volume keep growth sustainable.

Adaptive guardrails

The future lies in guardrails that learn. Instead of fixed thresholds, they’ll adapt to context — watching churn and support tickets in SaaS, refund rates and fraud attempts in retail, or consent opt-outs in regulated markets. Over time, machine learning will surface which signals matter most — like spotting that one-click checkout boosts orders but correlates with higher chargebacks.

Guardrails have always mattered in experimentation because they keep wins honest and sustainable.

As AI enters the workflow, velocity, autonomy, and opacity rise, making mistakes more likely and harder to spot. In this context, guardrails are the right counterweight, providing always-on checks that protect unit economics, user trust, and compliance. The result is a natural evolution where CRO operates as risk management, so teams move fast without gambling with revenue or reputation.

Guardrails in practice: How VWO enhances experiment reporting

The challenge is not just knowing that guardrails matter, but making them practical to monitor and act on. VWO solves this with built-in Guardrails. See how:

Integrated visibility

VWO treats guardrails as secondary metrics automatically surfaced alongside primary success metrics in reports, ensuring teams spot potential harms just as easily as wins.

Real-time alerts & automatic safeguards

Guardrail metrics in VWO are continuously monitored, and breaches trigger immediate notifications. For high-stakes metrics, VWO can even disable the variant automatically, preventing unseen issues from scaling further.

Contextual, statistically sound insights

VWO’s enhanced Stats Engine supports tuning of statistical power, MDR, and false positive rates, enabling more nuanced metrics tracking that helps teams understand both what and why changes occurred. It also provides health checks, like sample ratio mismatch and outlier warnings, to ensure reliability.

AI-ready safety

As AI accelerates experimentation velocity, guardrails give teams the flexibility to define financial, experiential, or operational checks that run alongside success metrics. This ensures AI-generated changes don’t slip through unchecked, keeping growth fast but also responsible.

With VWO, guardrails aren’t an afterthought. They’re built into the experimentation workflow, giving teams:

✅ Faster decision-making (no waiting for post-test surprises)

✅ Fewer failed launches (risks flagged before they scale)

✅ Less hidden revenue leakage (profits protected while you grow)

Want to see VWO guardrails in action? Watch this quick overview:

If you’re ready to take the next step, book a personalized demo and see how VWO can keep your growth both fast and safe.