How To Build A Culture Of Experimentation?

Culture is critical to business success. Precisely the reason why great companies like Apple and Google are thriving, while companies like Blockbuster and JCPenney are paying a heavy price by losing their focus on culture.

Every organization across the globe is gauged over two primary factors – one, the industry it caters, and second, the culture it follows. While the former defines the formal setting in which an organization typically operates (the product(s) or service(s) it sells, the revenue it makes, and so on), the latter is what defines the fundamental thinking or the foundational stones on which the organization is built.

Download Free: A/B Testing Guide

So, what type of cultural values should an organization have or invest in to be successful? Let’s find out!

What Type of Organizational Culture to Adopt?

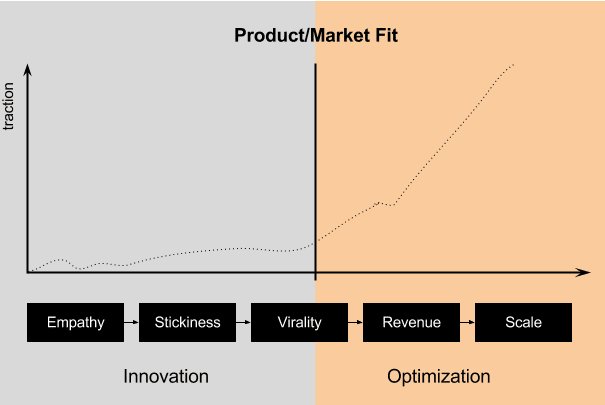

Company owners and decision-makers should heavily invest in a culture of experimentation and innovation during a business’s lifecycle. Experimentation and innovation, as they say, are the two hallmarks of entrepreneurship. In the early stages of the development of a company, creative ideas and innovation alone can bestow promising results. After that stage is crossed and you find a product-market fit, comes the need for optimization. This is because when a product ferries towards maturity, heavily experimenting or coming up with innovative ideas that need much attention can affect a business’s sustainability and continuous revenue flow.

Why Adopt a Culture of Experimentation?

Since the beginning of times, experimentation has served as a critical tool for challenging the status quo business models and driving radical changes. For organizations, it typically means the potential to take bold steps and decisions to improve the visitor experience while minimizing business risks. It also provides an organization with endless opportunities to learn more about its target audience by means of testing. While at the same time, analyzing the outcomes, and implementing the drawn learnings across both organizational and visitor touchpoints.

But, to sustain in a highly dynamic business environment, and maintain a competitive edge in testing and experimentation demands a mature optimization model. Optimization, by definition, is an act, process, or methodology to make something (as a design, system, or decision) as perfect, functional, or effective as possible.

For a mature and well-run organization, the concept of experimentation and optimization must be applied to each of their business processes to gain optimal benefits. Whether it’s your team, the marketing and sales funnel, or your own website, to make a bigger bang for your buck, focusing your energies on these two factors is paramount.

For instance, by optimizing your website, through the process of experimentation, you can get more conversions from the same number of visitors. On the other hand, by optimizing your team and inculcating a culture of experimentation, you can grow their productivity and output manifold, and in turn, achieve business goals.

So, a culture of experimentation and optimization is not just geared toward incremental, consistent, and risk-free improvements coordinated across a company’s platform(s), but to meet executive-level targets as well.

Now that we’ve established the importance of building a culture of experimentation and optimization, let’s look at how to incorporate the two within your organization.

How to Incorporate a Culture of Experimentation?

No matter how small or large, organizations embrace the idea of experimentation today. Here’s a step-by-step guide to incorporating the culture of experimentation.

Be Data-Driven: Before you begin optimizing your business processes by means of experimentation, it’s imperative to take stock of the current standing. You need to assess how you are currently placed and set benchmarks for improvements accordingly.

A good experimentation and optimization program is rooted in in-depth data and research of what we’re optimizing, how to run experiments, and what end goals you want to achieve. When your employees start questioning subjectivity and validate their decisions with both data and insight, it ultimately makes your organization more efficient.

Get Top Management Buy-In: Like a waterfall, company culture too flows from the top to the bottom. To inculcate a healthy culture of experimentation and optimization, it’s imperative that the top management complies.

If they’re on the same page, they’ll realize the importance of experimentation and the need for optimization and automatically allocate sufficient budget for the same. And, this will, in turn, make it easier for executives, especially marketers, to execute thought-through optimization strategies.

The best way to sell the idea of experimentation and optimization to the top management is by highlighting the key benefits. Show how the returns on efforts can outweigh the investment, improve the conversion rate, and strengthen your pitch. Here are some key ways to influence top management:

- Highlight potential improvements.

- Present a competitive analysis.

- Showcase the ROI of experimentation.

If you’re looking to optimize your websites in particular, read this post on getting the top management buy-in for CRO.

Get the Team Involved: Even if the top management decides to allocate budget and resources for both experimentation and optimization programs, success can only come if your employees understand the basic nitty-gritty and embrace experimentation.

Get everyone involved. Walk them through the path and educate them enough that they understand and contribute to the idea.

Invest in the Right Tools: To create a culture of experimentation, having the right tools by your side is paramount. They’ll not only help you channel your efforts but simplify the entire entire process.

Further, the type of tool(s) you should invest in typically depends on the activities your optimization is engaged in. For instance, if you want to optimize the hiring process of your organization, you may want to invest in a good recruiting tool. On the other hand, if you want to optimize your website to get more conversions, you must invest in a good and wholesome A/B testing tool.

Embrace the Power of “I Don’t Know”: With most marketing efforts, we expect a linear model. We expect that for X effort or money we put into something, we should receive Y as the output (where Y > X).

Experimentation, as a process, is a bit different. It may be more valuable to think of experimentation as building a portfolio of investments, as opposed to a machine with a predictable output (like how you’d view SEO or PPC).

According to almost every reputable source, one out of every eight tests fail. You’re not going to be right every time. Your variation(s) (or optimized ideas) will not always outperform the control (the original idea). But don’t let the string of failed experiments stop you. Draw learnings and start over again. That’s what the culture of experimentation preaches out loud.

Make experimentation a habit rather than a side hobby!

Make It a Game: Humans like competition. Competition and other elements of gamification have the prowess to increase engagement and prompt true interest in experimentation.

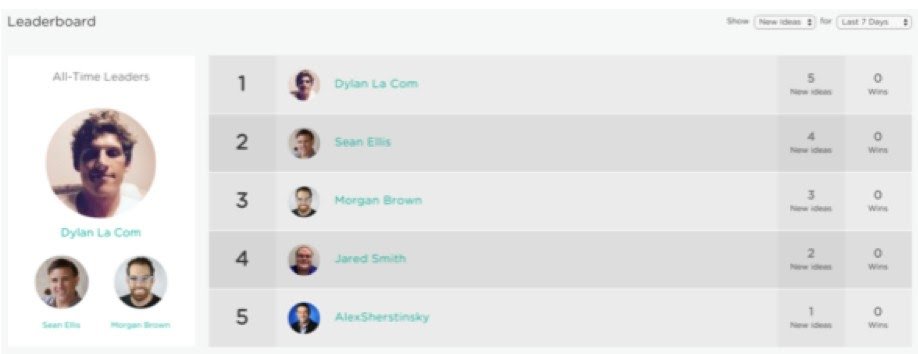

So, how can you gamify your process of experimentation? Some tools, such as GrowthHackers’ NorthStar, embed this competition right into the product with features like a leaderboard:

You can create leaderboards for ideas submitted, experiments run, or even the win rate of each of the experiments. However, as with any choice in metrics, be careful of unintended incentives.

For example, if you create a leaderboard for the win rate, it’s quite possible that people may be disincentivized from trying out crazy, creative ideas. It’s not a certainty, but keep an eye on your operational metrics and what behaviors they encourage.

Download Free: A/B Testing Guide

Adopt the Vernacular: Sometimes, a culture can be shifted by the subtle use of language. How does your company explain strategic decision-making? How do you talk about ideas? How do you propose new tactics? What words do you use?

If you’re like many other companies that talk about what is “right” or “wrong,” what you have done in the past, or what you think will work. All of this, of course, is nourishing for the hungry, hungry HiPPO (who loves talking about expert opinion).

What if, instead, you talk in terms of upsides, risk mitigations, experimentations, and cost versus opportunities instead?

Your team will surely open up to such conversations and show interest in the culture of experimentation. Obviously, you still have to stay grounded to reality – not throw away insane test ideas and hope everyone jumps in – but adopt an approach that works in your favor. Use context like, “What if, something you can test out with an A/B test,” rather than a context that’s blunt.

For more insights on how to build a culture of continuous experimentation, dive into our latest episode with Kevin Anderson on the VWO Podcast.

Evangelize Your Wins: It’s important to stop and take the time to smell the roses. When you win, celebrate! And, when you suffer a string of failures, learn! No matter what the outcome may be, make sure that others know about them.

It’s through this process of evangelization that you both cement the impact and results you’re creating in others’ minds as well as recruit others to become interested in becoming a part of the culture of experimentation.

How do you evangelize your wins? Some smart ways are as follows:

- Have a company Wiki? Write about your experiments there!

- Send a weekly email, including a roundup of the experiments.

- Use social media as a tool to boast about your winnings and/or learnings.

- Schedule a weekly experiment readout that anyone can attend.

- If possible, write external case studies on your blog. This isn’t always possible but can be a great way to recruit interesting candidates to your program.

Define Your Experiment Workflow/Protocol: If you want everyone to get involved with experimentation and optimization, make sure that everyone understands the rules. How does someone set up a test? Do they need to work with a centralized specialized team, or can they just run it themselves? Do they need to pull development resources? If so, from where?

These are some common questions that can cause hesitation, especially for new employees, and this hesitation can really hinder the pace of experimentation throughput. That’s why it’s important to have someone, or a dedicated team, to own the experimentation and optimization process and smoothen out things.

On the off chance that you don’t have someone or a team in-charge of the program, you can still prepare a detailed document or demo videos to demonstrate the entire process and eliminate hindrances. Additionally, you can also create an “experimentation checklist” or add a FAQ section on your site that answers these common questions.

Embed Subtle Triggers in Your Organization: One of the most powerful forces in any organization is inertia. It’s exponentially harder to get people to use a new system or program than it is to incorporate new elements into the existing process.

So, what systems can you use to inject triggers that inspire experimentation and optimization?

For one, if you use internal communication channels like Slack, Trello, or GrowthHackers Northstar to share information about the experiments being run or optimization activities being undertaken. Simply set up notifications to appear when someone creates a test idea or launches one.

By just seeing these messages, you can nudge others to contribute. It also makes the program salient overall.

Whatever triggers you can embed in your current ecosystem—even better if they’re automated—can be used to nudge people toward contributing more test ideas and experiment throughput.

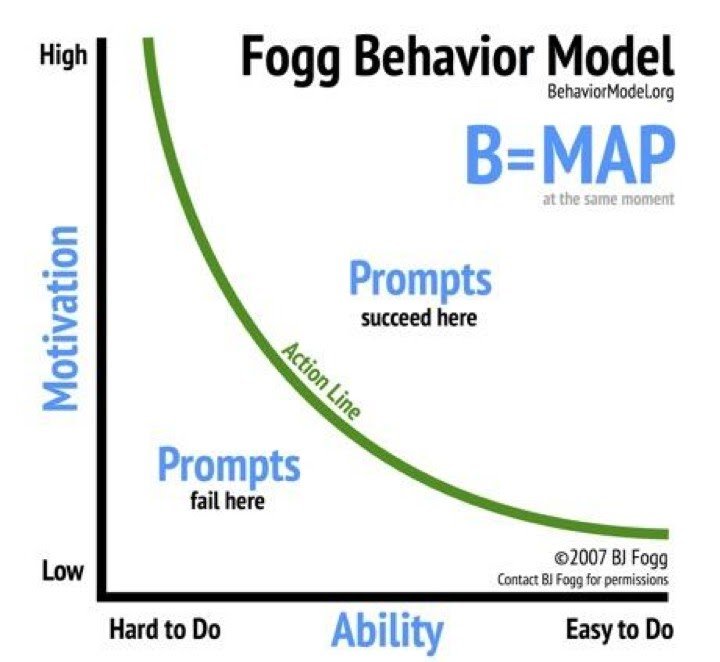

Remove Roadblocks: According to the Fogg Behavioral model

, three components factor into someone taking action:

- Motivation

- Ability

- Prompts/Triggers

The ability, or the ease at which someone can accomplish something, is a lever that we tend to forget about. Sure, you can wow stakeholders with potential uplifts and revenue projects. You can embed triggers in your organization through Slack notifications and weekly meetings to always keep the idea afresh. But what about making it easier for everyone who wants to run a test?

That’s the approach Booking.com has taken. Some of their tips include:

- Establish safeguards.

- Make sure the data is trustworthy.

- Keep a knowledge base of test results.

Watch the webinar to learn how to overcome bottlenecks in creating an experimentation culture

Conclusion

To summarize, do everything you can to onboard new experimenters and mitigate their potential to mess up experiments. Of course, everyone has to go through the beginner phase of A/B testing and would need plenty of practice to perfect the art of experimentation. But, don’t let them take a back seat. The trick is not just to make things less intimidating but also to make it less likely for the newbies to drastically mess up the site.

If you can do that, you’ll soon have an excited crowd anxiously waiting to run their own experiments and be an active part of your culture of experimentation and optimization.