We are back with the second edition of our ‘CRO Perspectives: Conversations with Industry Experts’ VWO Blog series. Through these interviews, we aim to showcase diverse perspectives, learnings, and experiences of CRO experts, fostering growth and learning within the CRO community as a whole.

Leader: Haley Carpenter

Role: Founder at Chirpy

Location: Austin, Texas, United States

Speaks about: Experimentation • User Research • Conversion Optimization • Project Management • Data Analysis

Why should you read this interview?

Haley Carpenter has collaborated with leading organizations in the industry, building her expertise as a strategist and consultant in CRO. With a wealth of experience across various roles, she founded her agency, Chirpy. Specializing in CRO, consulting, execution, and training, Haley focuses on user research and testing. Driven by tangible outcomes, she operates with maximum efficiency and effectiveness. She says that her work goes beyond “Conversion Rate Optimization” or “CRO”; it elevates entire business decision strategies, empowering teams to become confident decision-makers.

Building A/B testing skills and key lessons learned over time

This is a long-winded answer, but I promise it’ll come together. There are some critical components that have contributed to how I work now:

#1: Proper learning and experience

They are critical to success. Improper knowledge is frequently what leads to poor results. There’s so much misinformation in this industry that it’s extremely important to choose your teachers and mentors wisely. With that said, I have very intentionally curated my professional experiences to learn from some of the best. My approaches have been influenced by each experience I’ve had along the way.

#2: Time

I would always get so annoyed early in my career as a younger person that I’d be checking all of the boxes of what a senior person did but not get promoted faster (however, I did get promoted quickly in retrospect). Simply checking boxes is not enough. Going through the ranks and grinding to try to be the best has been critical to where I’m at now.

#3: Broad exposure

I have intentionally worked at two small to mid-sized agencies and one huge SaaS company (where I still operated in an agency-type fashion). I maintained a broad list of clients in every role to ensure I’d continually have broad exposure to all kinds of industries, people, websites, etc. I never wanted to go in-house since that typically commits you to one company only. CRO is niche enough in my opinion. I’ve never thought it made sense to niche down more like being an eComm or SaaS specialist, for example.

#4: Confidence

All of the above components have contributed to my confidence. It seems like confidence in business is half the battle, especially when working with clients. I’ve worked hard to have true confidence that I can back up with my performance.

Now, based on all of that setup, my approach has shifted from just accepting and using what I was taught to being able to build upon that, develop my own opinions, and improve upon it. CRO has a lot of gray areas and there’s no rule book like in the legal field, for example. There’s a lot more room for opinions and different perspectives. Now, I’ve been able to see and understand the full spectrum of all CRO topics so that I can make informed decisions and recommendations to my clients confidently.

My approach has shifted from just accepting and using what I was taught to being able to build upon that, develop my own opinions, and improve upon it.

If anyone wants a more tactical list of some of my important learnings, here are a few:

- Running tests for full significance isn’t the only type of testing (superiority testing). Run tests for risk mitigation only, too (non-inferiority testing).

- Clients often say they know what a lot of CRO stuff is – take that with a grain of salt though no matter their role.

- Aim for impact more than learning precision a majority of the time. By that, I mean lean toward testing multiple changes at once in a test rather than one change at a time. Related, getting big lifts is more difficult and uncommon than a lot of people think.

- Set expectations about everything with everyone as soon as possible almost all the time.

- Don’t be scared to say you don’t know something.

- Test reports shouldn’t be long and take hours to complete. Longer doesn’t equal better.

- Don’t base testing success on the actions closest to the changes you’re making (which are likely vanity metrics). Go for the $$ and don’t let anyone talk you out of that.

- Annualized ROI projections are B.S. Don’t let anyone tell you otherwise and don’t get talked into doing them anyway just because a CEO told you to do it.

- Everyone is human just like you, so don’t let role titles and company logos freak you out.

Don’t base testing success on the actions closest to the changes you’re making (which are likely vanity metrics). Go for the $$ and don’t let anyone talk you out of that.

Crucial steps in experimentation planning

Love this! I actually created a resource for this since so many teams struggle with it. We’re offering it here for anyone who reads this. Pre-test work is one of the biggest challenges and areas of opportunity for nearly every client. It’s one of the things I’m most passionate about because it always makes such a difference in results.

Navigating test results and strategy adaptation

There are really three main outcomes from a test: win, loss, and inconclusive. Some practitioners include a “flat” option; however, that’s never clearly defined and varies from person to person. I rarely use it, and if I do, it’s a subtag of the primary three I mentioned first.

There’s more to a test than just that layer though. That layer (the outcome) needs to be paired with action. If nothing is done with the results, then what is the point of doing it at all? Therefore, there are three main actions that come from a test: implement, iterate, and abandon. I believe this came from some Microsoft content a few years ago. I would call this a sublayer underneath the outcome layer.

Another nuance: was it a superiority or non-inferiority test? To me, this layer is actually above the two aforementioned ones. In many cases, it makes sense to have a hierarchy of analysis and decision layers like this:

- Superiority, non-inferiority (decision layer, pre-launch)

- Win, loss, inconclusive (analysis layer, post-launch)

- Implement, iterate, abandon (decision layer, post-launch)

Some teams consider #2 and #3 separately for superiority tests and non-inferiority tests, which can drastically affect over-program reporting (e.g., win rates, avg. impact per success, etc.). I personally prefer this approach where each bucket (superiority and non-inferiority) is distinct.

As far as how to make a decision, there are nuances to consider on a case-by-case basis. This is a great example of where having a skilled CRO expert is important.

Run multiple iterations – and run multiple variations per test if you have enough data volume. This will increase speed tremendously. Even if you find winners, oftentimes it makes sense to iterate still and try to beat them.

Regarding the analysis layer, that should be more straightforward (kind of). Typically, you’re aiming for 90-95% significance with superiority tests and directional results (that don’t have to reach full significance) in non-inferiority tests. A few major considerations that get missed a lot by teams:

- Are you doing fixed-horizon or sequential testing?

- Have you QA’d your analytics platform recently?

- Have you QA’d the data from any integrations recently?

- Have you checked for SRM?

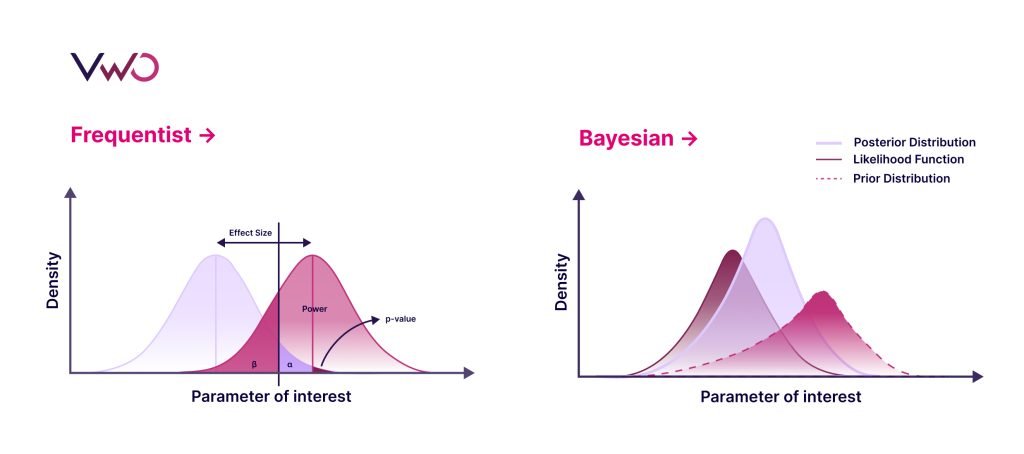

- Are you using Bayesian or Frequentist stats?

- Are you running one- or two-tailed tests?

Don’t get me wrong, there are others; however, this is a solid starting list to go through. Answers to these questions help to guide adaptations post-test – and pre-test as well.

Thoughts and insights on iterative testing

It’s a non-negotiable, must-do in testing programs. Most tests should be iterated upon. Main reason? There are ‘n‘ ways to execute most tactics. Think about it…heroes, creative, journeys, forms, messaging, information architecture, etc. There’s not just one way to design a hero. There’s not just one way to execute a form. Run multiple iterations – and run multiple variations per test if you have enough data volume. This will increase speed tremendously. Even if you find winners, oftentimes it makes sense to iterate still and try to beat them.

Measuring CRO success and priority metrics

Usually, I aim to define success by using metrics closest to money like transactions, revenue during a test, leads, renewals, etc. These are always the ones I aim to prioritize as well. Practitioners still disagree amongst themselves on this topic, which is mind-boggling to me. Some still choose vanity metrics and choose “the action closest to the change.” I completely disagree with that most of the time as I think the correct choice to make is clear. There’s a whole bucket of excuses as to why people disagree, which I’m always happy to chat through and address anytime. I’m very passionate about the topic of choosing primary metrics vs. secondary metrics. Check out my video on this topic here.

Common experimentation mistakes to avoid

Here are some of the top ones from my perspective:

- Don’t get talked into using vanity metrics AKA high-funnel metrics as your primary success metrics – for individual tests or entire programs.

- You must know the difference between fixed-horizon testing and sequential testing, and you need to know enough about each to make sure you’re approaching and analyzing tests properly. It’s also important because if you don’t know about these, that means you don’t know about your testing tool either. This isn’t an optional topic area.

- Don’t freak out about whether you’re 100% on the side of Bayesian or Frequentist supporters. Know that it’s okay if you live in some gray areas. However, just make sure you have the proper knowledge to make educated choices. And again, know about this relative to your testing tool.

- Many people try to demonstrate their expertise by trying to sound technical and complicated. This is a huge mistake. In my opinion, expertise is demonstrated through the ability to simplify concepts and bring people along with you in a way that’s easy and engaging.

- Don’t assume people know about CRO things even if they say they do. Related, don’t overcomplicate things. Remember, most people aren’t CRO nerds at heart and haven’t studied it to the level of someone who specializes day-to-day in it.

- If you’re in a consultative/SME role, you must actually be consultative and authoritative. Too many people are overly timid and submissive out of fear. I would say that’s one of the most common reasons at least.

In my opinion, expertise is demonstrated through the ability to simplify concepts and bring people along with you in a way that’s easy and engaging.

Experience using VWO for testing

I’ve had great experiences using VWO. The visual editor is easy to use, and VWO’s support team is always fantastic!

Key steps for building a culture of experimentation

This needs a short-term, mid-term, and long-term plan. It’s usually a long play that takes a while to fully take hold.

Here’s a quick tactical list with a few ideas:

Newsletters:

Weekly, monthly, or quarterly CRO newsletters (or some combination of those) with pertinent testing and research updates circulate information quickly, easily, and frequently (if you do them weekly). Add your test idea submission form each time to keep it top-of-mind, too.

Structured meeting cadences:

Weekly syncs, end-of-month reviews, and quarterly business reviews are all important and have different purposes. Get these on the calendar with the appropriate team members. Weekly meetings are tactical while monthly and quarterly meetings are more high-level and strategic.

Playbooks:

Every program should have a living document that outlines everything about your CRO program from beginning to end.

Consistent documentation and reporting:

Please have reporting dashboards and documentation templates for testing and research. This is so easy to do, but it’s often skipped.

Test scorecards:

This is related to documentation, and it’s something I’ve heard a number of practitioners use now. Create a one-slide TLDR for every test that can easily be shared with the C-suite (or anyone in a company) in a slide or screenshot.

Chat channels:

This can be a fun way to get more buy-in. Have a CRO Slack channel, for example, where you make test announcements, share recent research learnings, etc.

Test idea submission forms: This allows everyone to get involved in CRO in a really easy way and helps to shift people’s habits toward data-driven decision-making.

Please have reporting dashboards and documentation templates for testing and research. This is so easy to do, but it’s often skipped.

Envisioning the future of CRO and A/B testing

Two huge topics of convo in my circles recently relative to this: AI and the sunsetting of third-party cookies.

On the topic of AI, I’m not someone who thinks a majority of our job as a CRO practitioner will be taken by technology. I think we’ll likely just have new tools appear that we can use to supplement our work in different ways than we’ve had in the past. I think many things get overhyped. Even if AI were able to fully take over CRO roles, I think humans will always prefer to work with humans more than what those overhyping things think.

On the topic of cookies, most people I’ve talked to so far seem to be in a similar position relative to their understanding of the situation and what they’re going to do about it, which is (a) we don’t understand it well and (b) we aren’t 100% sure what we’re going to do about it. There have been one or two anomalies to this, but even then, those companies are still figuring some things out. This seems to be something that we’ll help each other figure out and understand together as the situation evolves in 2024 and beyond.

Relative to other evolutionary topics and my predictions, I’ll just say that the industry moves much slower than most say or think in my opinion. There are always tons of lofty projections in people’s answers to this type of question each year, but the reality is that things almost always move much more slowly and change much less year-over-year than predicted.