SEO A/B Testing: Boost Organic Traffic with Data-Driven Tests

A growth lead at a large eCommerce brand once changed a single word in their product titles.

Just one!!

Nothing fancy. Nothing technical.

All they did was add a stronger intent signal, shifting from “Running Shoes – BrandName” to “Buy Lightweight Running Shoes | BrandName” across 120 product pages.

Four weeks later, those product pages were pulling twice, sometimes even three times, more organic clicks than before.

It wasn’t luck; it was a SEO A/B test.

And it proved something every SEO knows deep down: even tiny, intent-driven changes can move the needle, but only if you test them systematically.

This blog walks you through exactly how SEO A/B testing works so you can uncover similar wins, minus the guesswork.

What is SEO A/B testing?

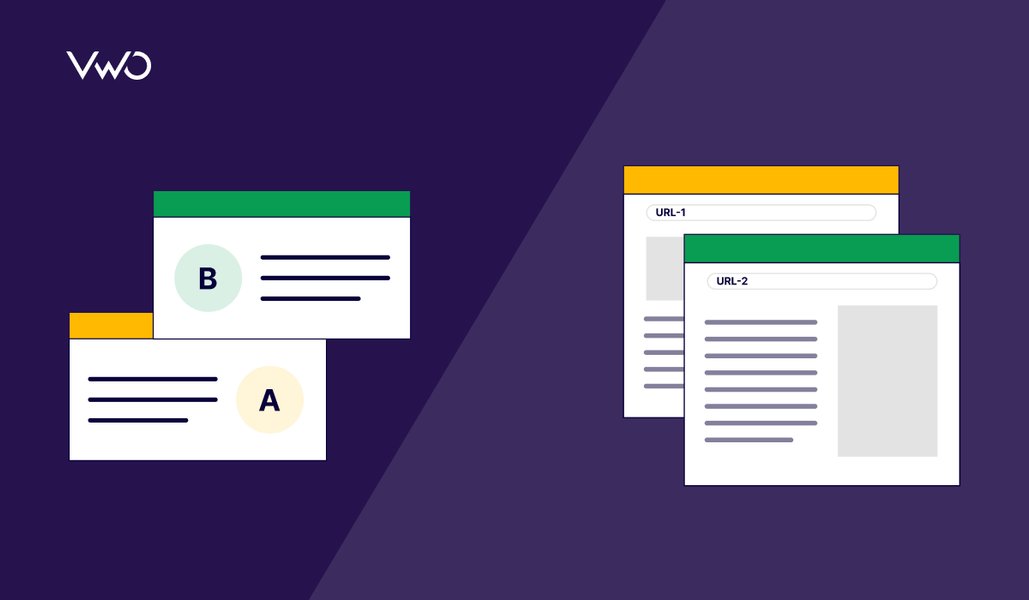

SaaS SEO A/B testing, or SEO split testing, is a page-level experimentation method used to measure how on-page changes influence rankings, impressions, and organic traffic. Instead of assuming what Google might reward, you apply a specific SEO change to a subset of pages and compare their performance against pages that remain unchanged.

Unlike CRO A/B tests, where users see different page versions, SEO A/B testing ensures Google bots consistently see one version per page. This allows search engines to crawl, index, and evaluate the impact of your changes accurately.

A simple example:

Imagine you manage 200 product pages that all follow the same template. The current title tag format is:

“Running Shoes – BrandName”

You believe adding intent-driven modifiers (like “Buy,” “Shop,” or “Lightweight”) might help rankings and CTR. So you:

- Keep 100 pages unchanged-> Control

- Update 100 pages to: “Buy Lightweight Running Shoes | BrandName”-> Variant

Over the next few weeks, Google bots will crawl both sets. If the variant pages begin to show higher impressions, better average positions, or increased organic clicks, you get concrete proof from real data that this title format is stronger for SEO.

This approach offers a low-risk way to validate SEO ideas on a small group of pages before rolling changes out site-wide.

Benefits of SEO A/B testing

SEO A/B testing helps you understand what truly works for your site by validating changes with real performance data. Here’s how this approach strengthens your search strategy:

Make confident, data-backed SEO decisions

SEO A/B testing replaces guesswork with evidence. Instead of relying on assumptions or generic best practices, you learn how Google interprets and evaluates your on-page changes, using real data, not intuition.

This helps you build a more reliable, predictable search engine optimization strategy grounded in measurable signals like relevance, crawlability, indexing behaviour, CTR, and ranking stability.

Identify winning changes

By testing controlled variations of elements such as title tags, meta descriptions, internal links, or content structure, SEO A/B testing shows you exactly which updates drive meaningful improvements in impressions, clicks, and rankings.

Instead of guessing, you get statistical clarity on which version performs better, helping you scale changes that are proven to work.

Reduce the risk of making harmful site-wide changes

Large SEO updates can carry significant risk if applied blindly. With A/B testing, you experiment on a small, controlled set of URLs before making global changes.

If performance drops, you stop the test and avoid rolling out a change that could have negatively affected your entire site.

Strengthen user experience

Many SEO refinements, like clearer headings, improved content layouts, or faster load times, also make pages easier and more engaging for users.

Testing measure engagement signals like:

- Higher Click-Through Rate (CTR): A winning title tag or meta description means more users choose your link from the search results.

- Lower Bounce Rate: An optimized page keeps visitors from immediately leaving.

- Longer Time on Page/Dwell Time: Users spend more time consuming your content.

Better user signals often reinforce ranking improvements, creating a virtuous cycle.

Increase ROI

SEO resources are limited. Testing ensures your team focuses only on initiatives that produce measurable gains. Instead of spreading time across dozens of unproven ideas, you invest in the ones that deliver clear performance improvements, leading to stronger efficiency and higher return on effort.

Understand your audience more deeply

SEO A/B testing also reveals how different audience segments respond to specific page elements. When a variation drives consistently higher CTR or engagement, it gives you actionable insight into user preferences that can guide content, UX, and marketing decisions.

Gain a competitive advantage

Because the tests reflect how your specific templates and audience behave, you uncover insights your competitors can’t replicate from generic SEO guides. Consistent testing helps you adapt faster and win more consistently in competitive SERPs.

Website suitability for SEO A/B testing

SEO A/B tests are most effective when your website has stable traffic and well-structured, scalable page templates. Not every website can run reliable tests, and forcing experiments on unsuitable pages often leads to inconclusive results. Below are the key factors that determine whether your site is a good fit:

You have multiple pages following the same template

SEO A/B testing works best when you can divide a large set of similar, uniform pages into a control group (A) and a variant group (B). For the test to be reliable, these pages should share comparable traffic levels, ranking patterns, link profiles, and content structure.

When both groups start from the same baseline, the only meaningful difference in performance comes from the specific change you’re testing, making your results clean and trustworthy. Some examples can be:

| Website Type | Suitability | Reason |

| eCommerce Sites | Perfect | You can test a change (e.g., adding “Free Shipping” to the product title) across hundreds of product pages in the same category. |

| Publisher/News Sites | Excellent | You can test a content change (e.g., article formatting) across a category of blog posts. |

| Local Business Sites | Good | If you have many location pages (e.g., “Service in City A,” “Service in City B,” etc.) that use the same template. |

| Small Sites (or single pages | Not suitable for traditional SEO A/B testing | Testing just two pages (like your Home Page vs. another page) will give you unreliable results because their starting authority, content, and search intent are too different. |

You receive sufficient organic traffic

Low-traffic pages make it difficult for Google Search Console to produce statistically reliable results, which can weaken the impact of your SEO efforts. To detect uplift in impressions, rankings, or clicks, the test pages need consistent search visibility.

Your rankings are relatively stable

If your pages are experiencing major fluctuations due to seasonality, algorithm updates, or indexing issues, it becomes harder to isolate whether a performance change came from the test or external factors.

The site is technically sound

Technical stability is essential for SEO A/B testing. If Google cannot crawl or evaluate your pages consistently, the test results will be unreliable. Before you launch any test, make sure the following conditions are in place:

- Canonical tags are correct: so Google doesn’t treat variant pages as duplicates.

- Your platform supports selective, page-level changes: allowing Googlebot to consistently crawl the intended version.

- Users and Googlebot see the same content: avoiding cloaking and ensuring the test remains compliant.

You can make controlled changes

Your CMS or tech stack should allow selective updates to a subset of URLs. If your templates can’t be edited in isolation, controlled testing becomes difficult.

How does SEO A/B testing work

SEO A/B testing works by using a controlled, page-based experiment to measure how a single change impacts a large group of pages, allowing you to isolate the effect of that change on organic search performance.

It follows a step-by-step process that is fundamentally different from traditional Conversion Rate Optimization (CRO) A/B testing. Here is the step-by-step breakdown:

Step 1: Define the hypothesis

Before making any change, you form a clear, testable prediction based on data or observation.

- Example Hypothesis: “Changing the product title tag on e-Commerce category pages to include the brand name will increase the organic Click-Through Rate (CTR) by 10%.”

- Key Metric: You define the specific metric you want to measure (e.g., organic clicks, impressions, CTR, or average position).

Step 2: Define control & variant groups (The Split)

This is the most critical difference from CRO testing. You split the pages, not the users.

- You take a large group of similar, templated pages (e.g., all your blog posts, or all your product pages).

- You randomly divide them into two balanced groups:

- Control Group (A): Pages that remain completely unchanged. They provide the baseline performance.

- Variant Group (B): Pages that receive the specific change defined in the hypothesis (e.g., the new title tag).

Step 3: Implement the change

Apply the update only to the Variant Group (B) while leaving the Control Group untouched. This is usually done at the server or template level to ensure that users and Googlebot see an identical version of each Variant page, preventing cloaking and preserving the integrity of the test.

Step 4: Run the Test

Let the test run long enough for Google to crawl and evaluate both groups, typically 2 to 6 weeks, depending on traffic. During this period, the Control and Variant pages continue to compete in search results under normal conditions, allowing you to observe how Google responds to the change over time.

Step 5: Measure and analyze results

Once the test period ends, compare the performance of the Control and Variant groups using data from tools like Google Search Console and Google Analytics, along with your analytics platform. Look for meaningful differences in impressions, clicks, CTR, or average position.

Because the Control Group experiences the same external conditions: seasonality, algorithm updates, competitor shifts, any statistically significant uplift in the Variant Group can be attributed to the change you introduced, not outside noise.

Step 6: Rollout, Rollback, or Iterate

Based on the analysis, you take the next business decision:

| Outcome | Decision | Action |

| Variant Wins | The change improved organic performance. | Rollout: Apply the winning change to all similar pages across the entire website. |

| Control Wins | The change had a negative impact. | Rollback: Immediately revert the Variant pages to the Control version. |

| Inconclusive | The result was not statistically significant. | Iterate: Rerun the test for a longer period, or refine the hypothesis and try a bolder change. |

This rigorous process ensures that every large-scale optimization deployed to the website is backed by clear, measurable evidence of its positive impact on organic search performance.

Not sure where to start? Here’s a curated list of SEO A/B testing tools that can help you set up tests the right way.

Best practices for SEO A/B testing

Running SEO A/B tests requires careful execution to ensure your results are accurate, compliant, and meaningful. These best practices will help you maintain test integrity and avoid common pitfalls.

- Avoid cloaking: The fundamental rule is that Googlebot and human users must see the same content for a given URL, whether it is the Control or the Variant. Never show the search engine crawler one version and the human user a different one.

- Server-side testing: Prioritize server-side implementation. This serves the different HTML versions directly, which is the safest and most reliable way to ensure Googlebot consistently sees the intended test group version without relying on client-side loading delays.

- Test one variable at a time: SEO pages have many interconnected elements. Testing multiple changes together (e.g., updating title tags and headers) makes it impossible to isolate what caused the outcome. Keep each test focused on a single element.

SEO A/B testing examples

SEO A/B testing delivers the best results when you experiment with elements that directly influence how Google interprets your content or how users engage with search results. Here are practical examples of experiments teams commonly run, along with what each test aims to uncover.

Title tag optimization

What you test:

Different formats, keyword placements, or phrasing across similar pages to see which title communicates relevance more effectively.

Goal:

Increase CTR and strengthen alignment with primary and secondary search intent.

Example:

Testing “Buy Lightweight Running Shoes | BrandName” against “Running Shoes – BrandName” to see which variant attracts more clicks from search results.

Meta description rewrites

What you test:

Benefit-driven messaging, clearer value propositions, or richer snippets that highlight key differentiators.

Goal:

Improve organic CTR by making the SERP listing more compelling and user-focused.

Example:

Comparing a generic description “Shop our latest styles” with a more benefit-led version that highlights free shipping, sizing options, or unique product features: “Lightweight running shoes with free shipping and extended sizes.”

Want to go deeper into crafting high-impact copy variations for tests? Watch VWO’s webinar “The Science of Persuasive Messaging Using AI & A/B Testing”, where Brian Massey shares how to design message experiments that resonate with different audience segments.

Header (H1/H2) restructuring

What you test:

Refining the header hierarchy or adjusting H2/H3 formats to make the content clearer and more aligned with user intent.

Goal:

Enhance topical relevance and help Google interpret the structure and purpose of the page more accurately.

Example:

Switching from a generic, feature-led H2 “Product Features” to a more intent-aligned H2 that answers what users search for: “How to Choose the Right Running Shoe.”

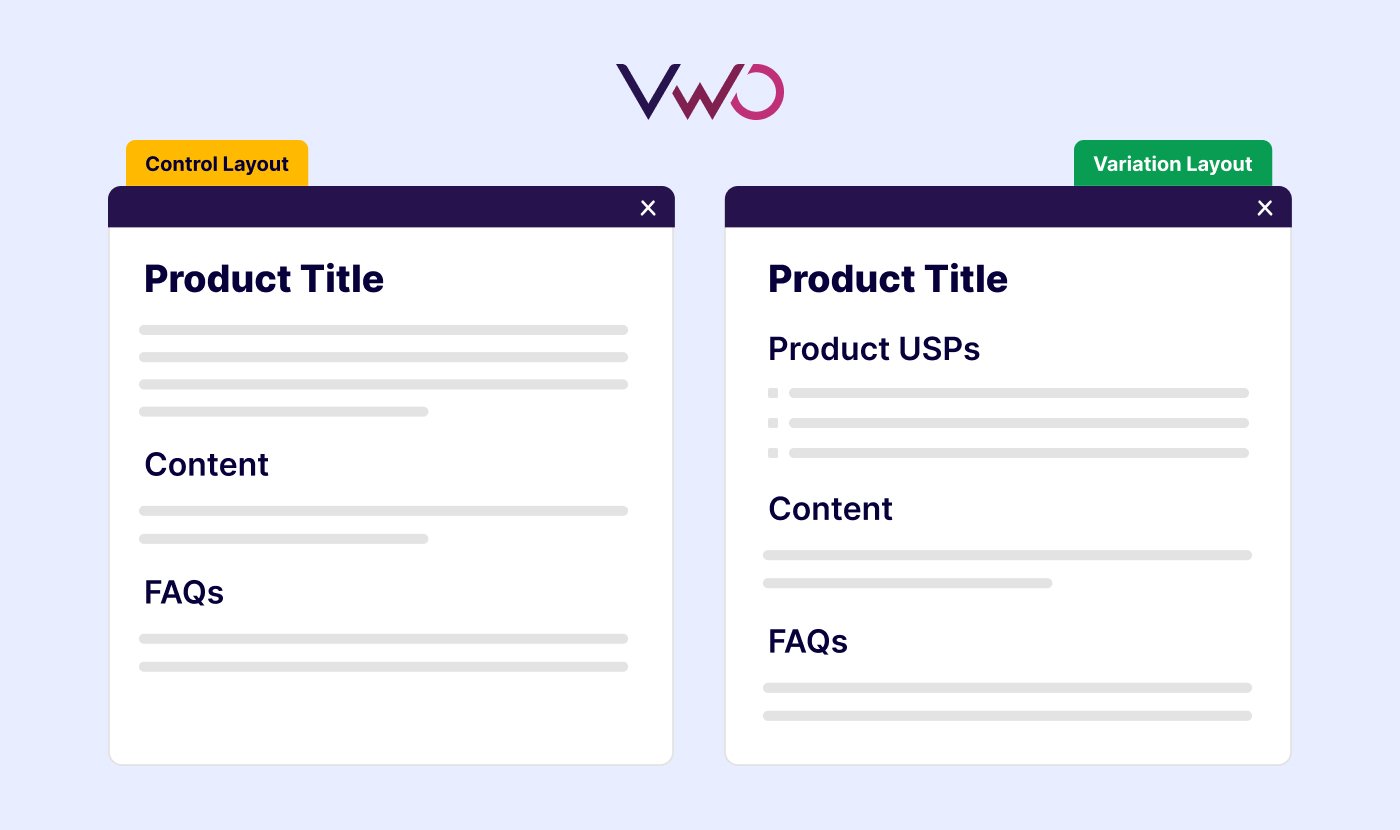

Content depth or placement adjustments

What you test:

Reordering core elements or expanding topic coverage with additional supporting sections.

Goal:

Strengthen topical authority, improve engagement signals, and increase ranking stability.

Example:

Comparing the current layout, where FAQs or product USPs sit lower on the page, with a variation that brings them higher or includes missing subtopics that more directly address user intent.

Internal linking updates

What you test:

Anchor text variations, link quantity, or the strategic placement of contextual links within templates.

Goal:

Improve crawl flow, distribute link equity more effectively, and reinforce relevance for key landing pages.

Example:

Comparing the current internal linking setup with a variation that adds contextual links from product pages to related category hubs using descriptive, keyword-aligned anchor text: “lightweight running shoes” → category page.

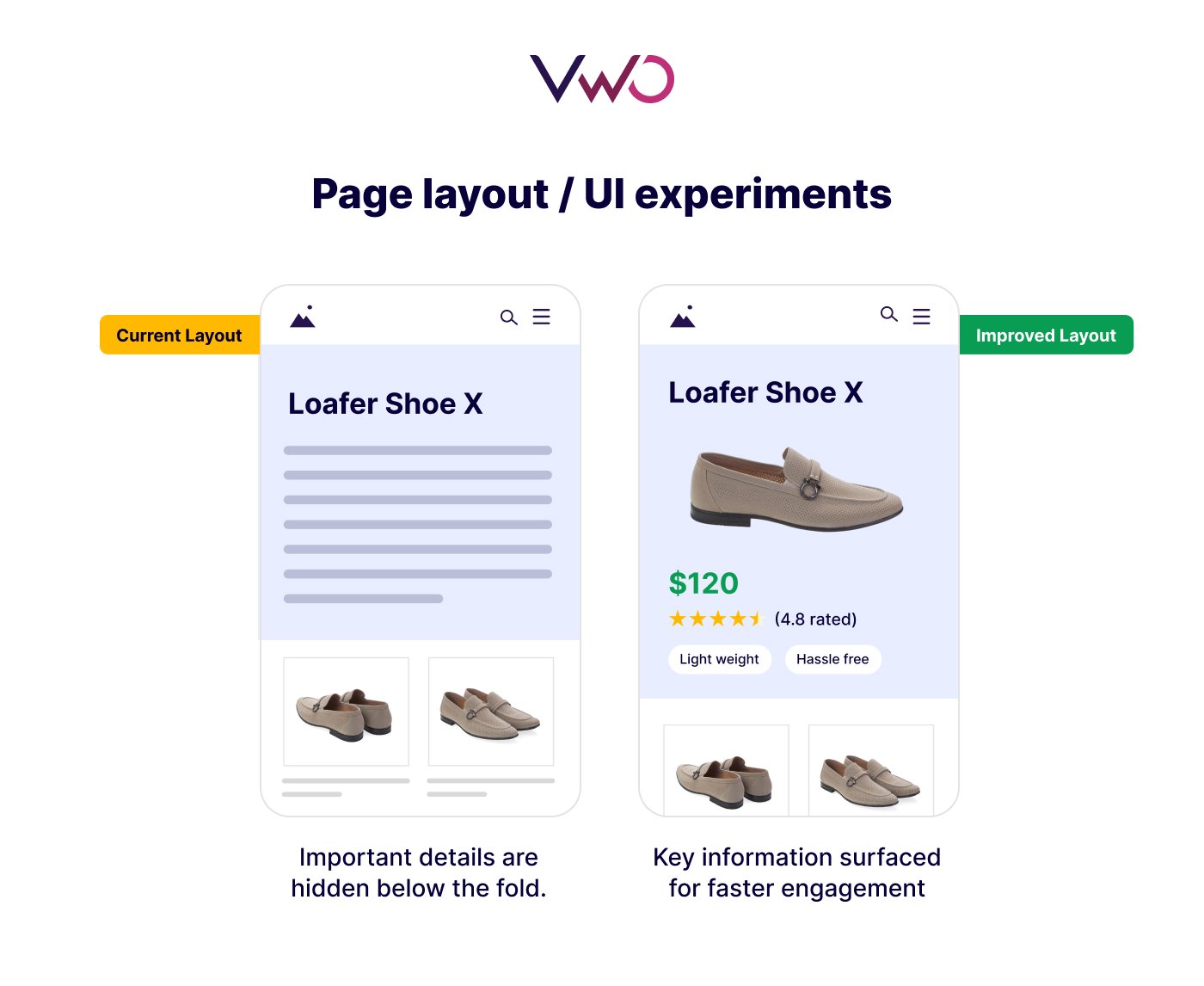

Page layout / UI experiments

What you test:

Adjustments to layout elements that influence readability, clarity, or the prominence of key information.

Goal:

Boost engagement metrics, such as time on page or scroll depth, which often correlate with stronger ranking signals.

Example:

Placing price, ratings, or core benefits above the fold on category pages to improve visibility and engagement (e.g., displaying the product price and star rating immediately below the product title instead of placing them midway down the page).

Navigation or category structure changes

What you test:

Simplifying menu labels, reorganizing hierarchy, or refining category names to improve discoverability.

Goal:

Enhance crawlability, improve user navigation, and help search engines understand site architecture more clearly.

Example:

Comparing intent-focused category names, such as “Trail Running Gear” instead of “Outdoors” in your ecommerce menu, to determine whether clearer naming helps both users and search engines understand your site structure.

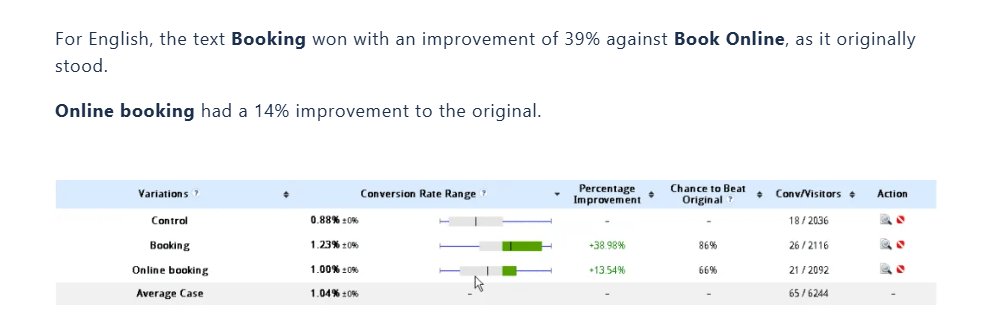

VisitNorway ran A/B tests on its top-menu navigation. For example, they tested different wording for the menu item “Booking” in multiple languages. In English, the variant “Booking” beat “Book Online” with a 39% uplift, while in the Norwegian version, “Order” improved clicks by an impressive 114%.

Beyond the engagement lift, the real insight is how small wording changes sharpen user intent. When navigation labels match the terms people actually look for, they not only improve clicks but also strengthen SEO by clarifying internal linking and helping search engines understand site structure.

In short, VisitNorway’s test shows how UI copy tweaks can double as SEO gains, making navigation clearer for users and more meaningful for search engines.

Page speed and core web vitals optimizations

What you test:

Performance improvements across mobile and desktop, focusing on load speed and visual stability.

Goal:

Evaluate how improvements in Core Web Vitals impact rankings, bounce rates, and user satisfaction.

Example:

Evaluating whether performance enhancements, such as compressing large images, activating lazy loading, or removing blocking JavaScript, lead to faster mobile load times and better ranking signals.

Image optimization tests

What you test:

Enhanced image metadata, such as alt text, file names, captions, or structured data for visual assets.

Goal:

Enhance image search visibility and enhance overall page relevance by providing more accurate contextual signals.

Example:

Updating a generic file name like “IMG_1234.jpg” to a descriptive version, such as “lightweight-running-shoes-blue.jpg”, and enhancing the alt text (“blue lightweight running shoes with cushioned sole.”) to better match the product.

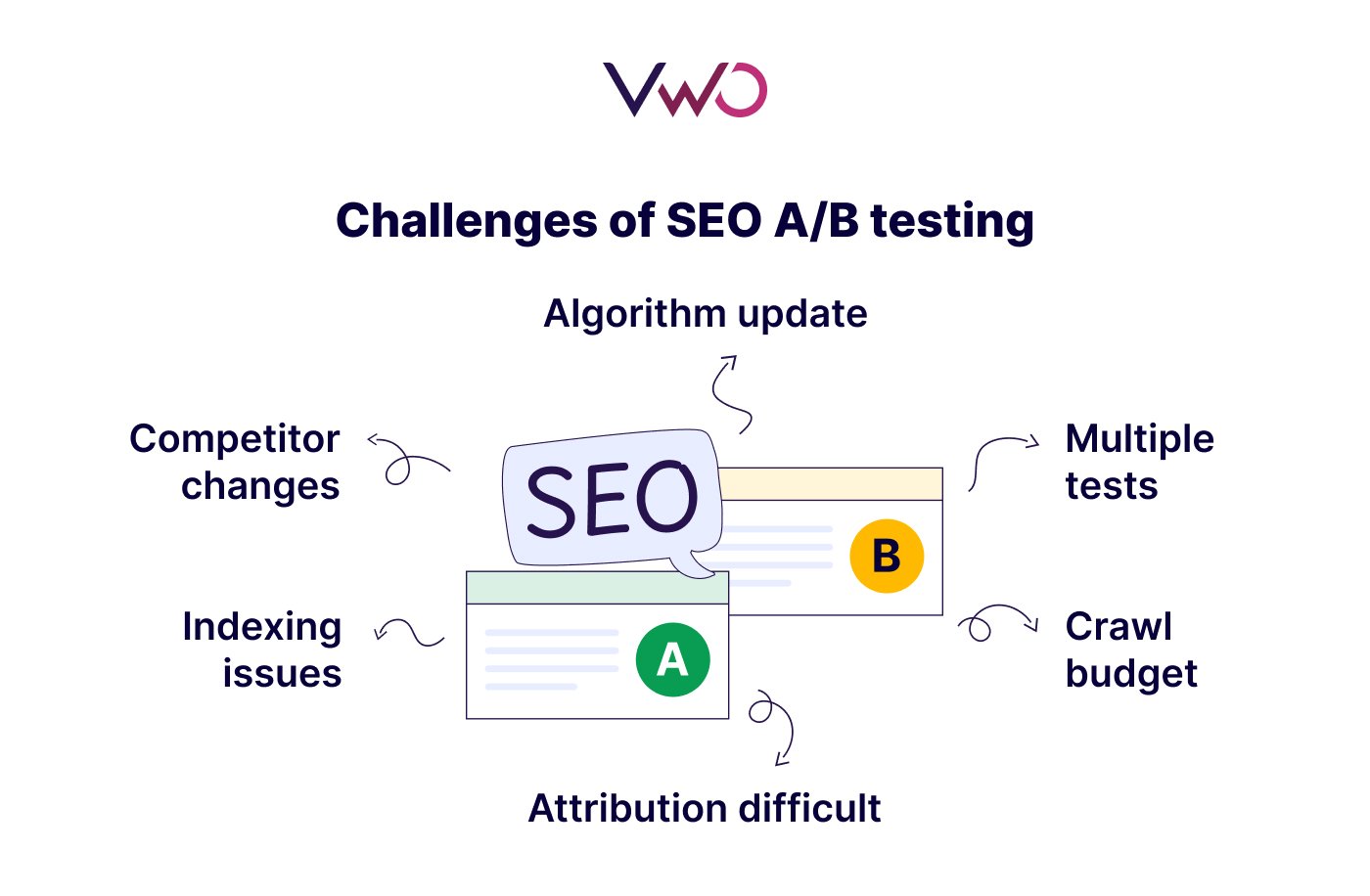

Challenges of SEO A/B testing

SEO A/B testing delivers meaningful insights, but it comes with its own set of complexities. Because experiments depend on how search engines crawl, index, and interpret changes, even small missteps can influence rankings or distort results. Here are the key challenges to be aware of:

Slow, unpredictable testing cycles

Unlike CRO tests, where user data accumulates quickly, SEO results depend on how often Google crawls your pages. Variant pages may be crawled at different times, and performance can take weeks to stabilize, making tests naturally slow and sensitive to timing.

Technical pitfalls that affect crawlability and indexing

SEO tests rely on consistent, crawlable page versions. Even minor implementation issues can mislead search engine bots, causing them to evaluate your pages inaccurately. Any of the below can weaken indexing signals or distort test outcomes.

- Accidental cloaking from inconsistent server-side setups

- Duplicate content caused by missing or incorrect canonical tags

- Page speed drops from added scripts or template changes

- Broken internal linking if navigation or link modules shift during testing

Difficulty isolating the true cause of performance changes

Even with strong control groups, SEO experiments are vulnerable to external noise, such as:

- Google algorithm updates

- Competitor content changes

- Seasonal search demand shifts

- Paid campaigns influencing branded queries

Disentangling these factors from the test’s true impact requires careful analysis and multi-metric evaluation.

Limited sample sizes on small or non-templated sites

SEO AB testing relies on large sets of similar pages. Websites with limited pages, inconsistent layouts, or low search traffic usually lack the volume needed to produce statistically significant outcomes, often resulting in inconclusive or misleading results.

Content consistency risks

Experiments that modify copy, headers, or content hierarchy can unintentionally affect keyword relevance or topical clarity. A well-intentioned variant may improve UX but dilute relevance signals, causing search engine rankings to fluctuate for targeted queries.

Overlapping changes disrupt test clarity

When too many experiments or updates happen at the same time, they create “noise” that reduces Google’s ability to accurately read and classify your site. This leads to three main issues:

- Crawl budget strain: Google has a limited time to check your pages. Extra versions or unnecessary duplicates make it spend that time on the wrong pages, slowing down how quickly your test pages get evaluated.

- Indexation inconsistency: If Google sees different versions of the same page, it may get confused about which one should rank, causing unstable or shifting results.

- Attribution difficulty: When lots of changes, algorithm updates, or competitor moves happen at once, it becomes tough to tell whether a performance change happened because of your A/B test or for some other reason.

Beyond SEO: A/B Testing for CRO

SEO A/B testing helps you understand what drives better visibility: stronger rankings, improved crawlability, and more qualified traffic. But once visitors land on your website, a different question becomes far more important:

Are they engaging, exploring, and converting?

CRO testing evaluates how real users respond to different versions of content, layouts, CTAs, and experiences, so you can systematically improve engagement and drive measurable business outcomes.

VWO offers you a unified experimentation platform to understand user behavior, test hypotheses, and optimize experience across your entire website. It blends behavioral analytics, A/B testing, AI assistance, and segmentation, making it easier for teams to uncover what drives conversions and why.

VWO Insights validates the quality of your SEO traffic by showing how visitors behave on your site, through heatmaps, session recordings, form analytics, and on-page surveys, and whether they move closer to conversion.

Meanwhile, with VWO Testing, you can do the below:

- A/B & multivariate testing: Experiment with different page elements, layouts, and CTAs to identify which combinations deliver the greatest improvement in conversions and user engagement.

- Split URL testing: Compare two different versions of a page hosted on separate URLs.

- Server-side or code-based testing (via code editor): Use code-based setups to deliver the variation HTML directly, ensuring Googlebot sees the correct version consistently without relying on client-side rendering.

- Custom URL grouping/targeting: Target experiments across sets of similar pages (e.g., all PLPs, blog categories, regional pages). SEO tests often run on page groups, not single pages, and grouping maintains consistent signals and improves the significance of results.

- Custom URL targeting: Run experiments on pages that don’t have consistent URL patterns, making it perfect for SPAs and websites with dynamic or uniquely generated URLs.

- Use pre- and post-test segmentation for deeper insights: Define key audience segments before launching your test: by device, visitor type, or behavior, so the right users enter each variation. After the test ends, compare outcomes across those same segments to see which groups reacted differently. This approach reveals patterns that aggregate results often hide.

Use VWO Copilot to automate the intensive tasks behind experimentation. It crafts hypotheses, builds experiments, segments audiences, and interprets results, freeing you up to concentrate on the bigger goal: growing conversions quickly and confidently.

Could this work for your team? Request a demo and watch it in action before you take the plunge.

FAQs

SEO a/b testing is the process of modifying elements such as titles, meta descriptions, headers, or on-page content across a set of pages to see whether those changes improve organic visibility, click-through rates, and search traffic.

Properly run SEO tests help improve rankings and traffic without harming indexation. But poorly implemented tests, such as running variants too long or showing conflicting content, can confuse search engines and dilute ranking signals.

Yes. Google runs thousands of a/b tests internally across products. It also supports a/b testing for websites (for CRO), and importantly, Google allows SEO a/b testing as long as it avoids cloaking and follows search engine guidelines like using consistent user-facing content.

SEO a/b testing measures how changes to page content or structure impact organic traffic and rankings, while CRO a/b testing measures how design, copy, or UI changes influence on-site behavior and conversions. SEO tests rely on server-side changes and must prevent duplicate indexing; CRO tests generally run on the client-side and focus on user experience.