Step Into Your Users’ Shoes: How Session Recordings Decode A/B Test Results

Your A/B test results are in.

Variation A shows a slightly higher bounce rate.

Variation B sees more clicks on a secondary element, but there’s no meaningful lift in final conversions.

You’re staring at dashboards and scanning charts, but the ‘why’ element is still a blur. The behaviors are measured, but the user’s motivations remain hidden.

It’s a familiar disconnect for anyone deep in A/B testing.

Quantitative data tells you what happened on a macro level, but it often leaves you guessing about the individual experiences that shaped those outcomes.

Without clarity into what users truly experienced during the test, teams risk misreading results and making misguided decisions.

This gap is widespread. In fact, a Forrester study found that 6 in 10 businesses struggle to analyze timely data and uncover the insights needed to understand customer behavior. Even with structured experiments like A/B tests, many teams lack the behavioral context to interpret outcomes confidently.

The solution lies in complementing A/B testing with qualitative insights like session recordings that reveal the human story behind the data.

Session recordings: Your window into the real user experience

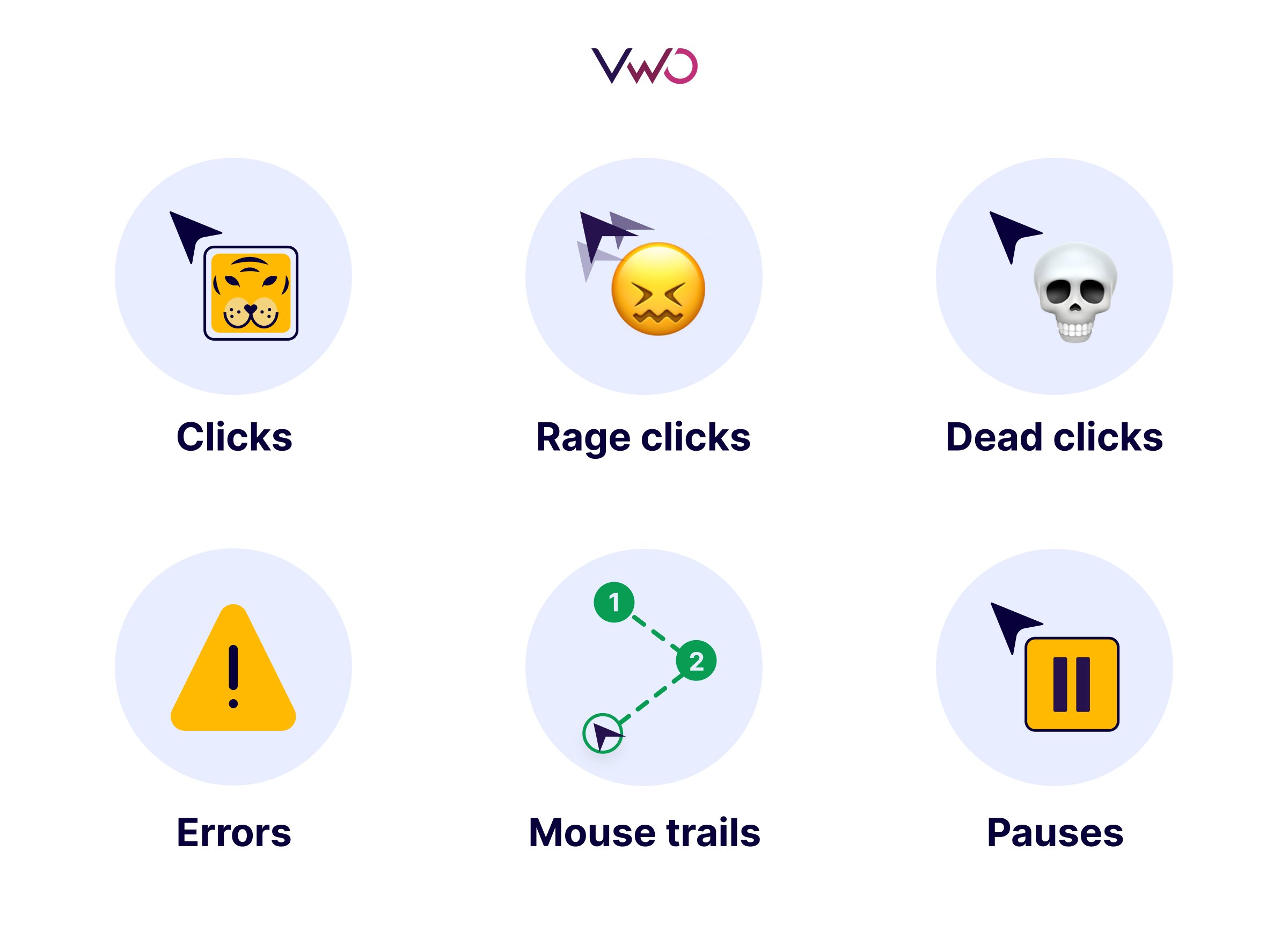

Session recordings or session replays are video-like recordings of real user interactions. They capture every mouse movement, scroll, click, form fill, and page transition, along with deeper behavioral signals like rage clicks, dead clicks, scroll depth, mouse trails, and even user pauses, capturing exactly as they happened.

This unfiltered view reveals users’ thoughts and feelings through every action. It’s the closest you can get to sitting beside a user as they explore your test variations, stumbling, succeeding, or silently dropping off. These first-person insights bring empathy back into experimentation.

Decoding the ‘Why’: What session recordings reveal about your A/B tests

1. Pinpoint usability issues and friction points

Session recordings surface subtle signs of user struggle that often go unnoticed in aggregate data. These micro-moments of friction, if ignored, can silently erode conversions.

A/B test insight:

You run an A/B test to improve your checkout experience. Variation B introduces a cleaner and more modern layout. Soon after, the numbers show a dip in conversions. On the surface, nothing looks broken. The layout seems fine.

Session recordings reveal the true story. A user is placing a grocery order on their phone. Everything goes smoothly until they reach the checkout. They need to schedule a same-day delivery. They tap the calendar field to pick a time slot, but nothing happens. They try again. Still no response. A few more taps, a few seconds of hesitation, and then they give up. The order is abandoned.

What seemed like a minor interface glitch turned out to be a major conversion blocker. Without session recordings, this moment of friction would remain hidden beneath the numbers.

What session recordings show:

- Users rage-clicking an unresponsive element in rapid succession

- Cursors hovering over form fields for extended periods, signaling confusion or hesitation

- Repeatedly scrolling up and down in search of submit buttons or key navigation links

- Users opening new browser tabs mid-process to find alternative solutions

- Abrupt exits right after encountering error messages or dead ends

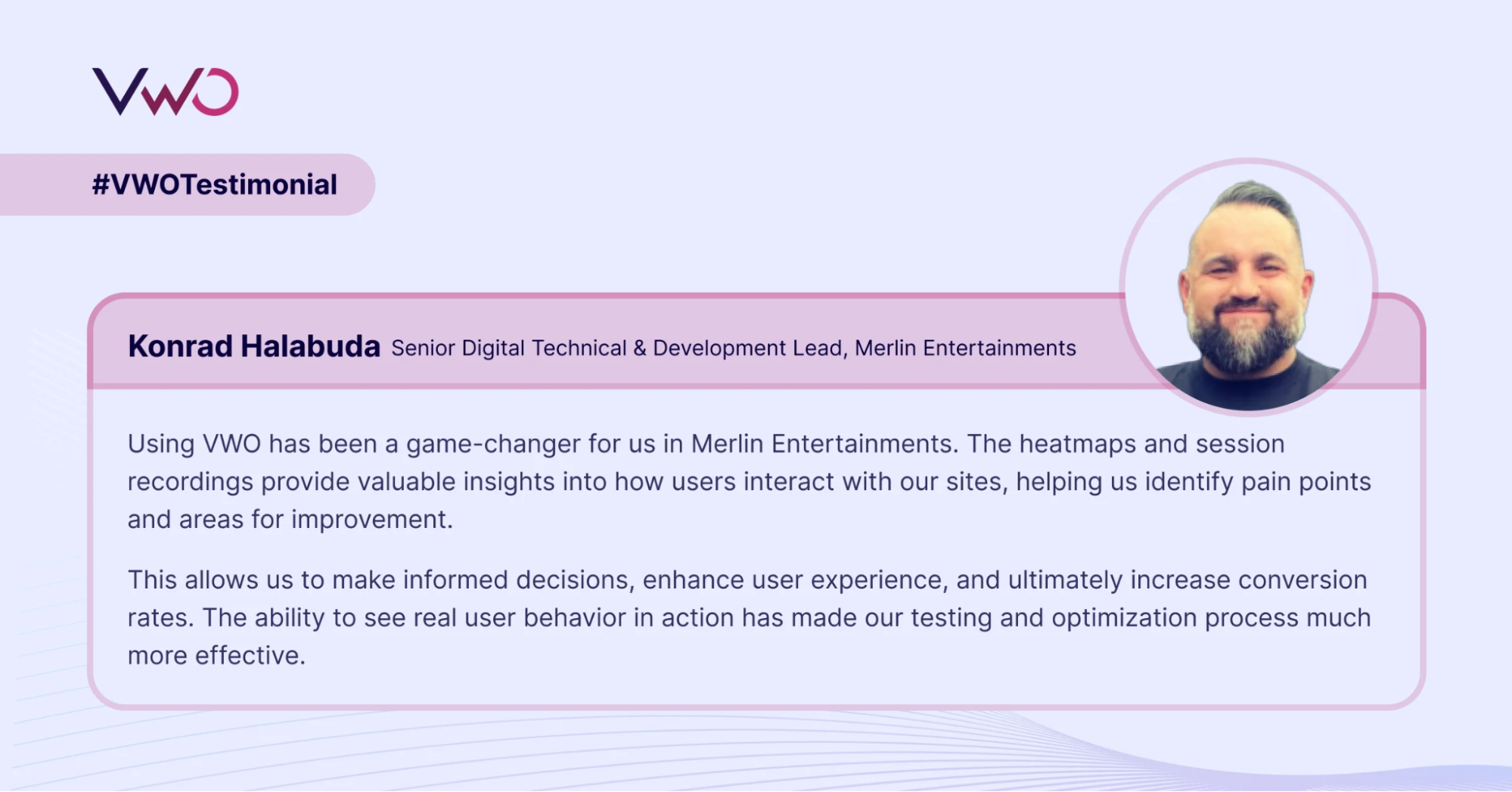

Here’s what our customer had to say:

2. Discover what’s actually working and why

Session recordings don’t just highlight friction. They also reveal moments of ease, clarity, and delight. These positive behaviors are just as important to reinforce and replicate.

A/B test insight:

For instance, in a B2B SaaS company running an A/B test on their pricing page, Variation A is winning on conversion rate. Session recordings show users confidently engaging with the new “Why Choose Us” section, navigating pricing tiers without hesitation, and completing the signup flow faster than before.

This qualitative feedback confirms not just that the design worked, but why it worked, making it easier to replicate that success across other high-intent pages.

Positive signals to watch for:

- Users complete primary actions quickly after the page loads

- Direct navigation to key elements without scanning or hesitation

- Seamless progression through multi-step processes without backtracking

- Users engaging with secondary features after completing primary goals

- Smooth scrolling patterns that follow the intended visual flow

3. Understand different user segments

Not every user interacts with your test variation in the same way. Session recordings help you observe behavioral differences across devices, user types, traffic sources, and geographies, giving you a more nuanced understanding of test outcomes.

A/B test insight:

Desktop users are progressing easily through your new variation, but mobile users are abandoning it entirely due to layout issues. This discovery opens the door to segment-specific rollouts or targeted optimization insights that the raw test data couldn’t surface on its own.

Critical segments to examine:

- Mobile users struggling with spacing, touch targets, or dropdowns

- New users hesitating at jargon-heavy copy or unexpected flows

- Desktop users gliding through multi-step processes

- Returning users skipping over repeated content or taking shortcuts

- Logged-in users using navigation differently from guests

4. Get early insights before statistical significance

You don’t have to wait for weeks to uncover insights. Session recordings give you an immediate window into user behavior, often surfacing critical issues or standout moments within the first few hours or days of a test launch.

A/B test insight:

Your test has just gone live. Variation A isn’t performing as expected, but it’s too early to draw statistical conclusions. Meanwhile, session recordings show users missing the call-to-action because it’s now positioned below the fold. This insight lets you fix a major issue before it affects your entire test population.

Immediate red flags:

- Users overlooking a new CTA or not scrolling far enough to see it

- Confusion caused by layout shifts or redesign elements

- Users attempting to interact with non-clickable elements

- Unintended navigation paths that break user flow

- Users bookmarking pages, sharing content, or returning multiple times

5. Understanding the cross-session journey with a test

User decisions, especially in complex funnels, don’t always happen in one session. With session recordings, you can trace how a user interacts with your test variation over time, across multiple visits and touchpoints.

A/B test insight:

A user encounters a glitch on Variation A and leaves. Based on that session alone, you’d assume the variation failed. But recordings show the user returning the next day, skipping past the glitch, and converting. That second visit changes the narrative and highlights the importance of looking at the full user journey, not just isolated sessions.

What session recordings reveal:

- Users abandoning a variation mid-flow, then returning hours or days later

- Sessions that begin with exploration and end in conversion

- Multiple interactions with the same feature across visits

- The influence of earlier sessions on final conversion behavior

VWO Copilot surfaces critical user insights in minutes, saving you hours of manual review. It identifies key behaviors, navigation flows, and interaction patterns across individual or grouped sessions, then converts those insights into testable hypotheses so you can move quickly from discovery to experimentation.

The missing piece in your A/B testing

You have the metrics and the winning variations. But if your test data is leaving you with more questions than answers, you’re missing a crucial layer of insight. Session recordings help you:

- Empathize deeply with users by witnessing their behavior, not just measuring it.

- Validate winning variations by understanding the behaviors behind the numbers.

- Build better hypotheses by grounding them in real user behavior.

With VWO, you’re not just testing. You’re running a connected optimization program. Session recordings are built right into your A/B test reports, so you can watch user journeys segmented by variation, device, or outcome and clearly see what made a difference.

Start your free trial with VWO and bring your A/B tests to life with session recordings!

![9 Best Session Recording Tools & Software in 2026 [CRO Expert Picks]](https://static.wingify.com/gcp/uploads/sites/3/2024/10/Feature-imageTop-9-Session-Recording-Tools-for-Websites-_-Expert-Guide.jpg?tr=h-600)