Our CRO Perspectives series captures lessons from practitioners and industry leaders who are reshaping experimentation in real time.

In this 20th post, you’ll hear from a strategist who identifies not only as a CRO strategist but also as an AI-powered CRO strategist, bringing a unique lens to the conversation.

Leader: Anwar Aly

Role: AI-powered CRO Strategist / Founder at Zaher.AI

Location: Egypt

Why should you read this interview?

Anwar Aly is a seasoned CRO strategist with experience spanning both agency work at Invesp and entrepreneurship with his startup Zaher.AI.

At Invesp, he worked as a Senior CRO Strategist (Part-time), where he applied behavioral psychology, data-driven audits, and structured testing to improve conversion funnels for eCommerce brands.

His role involved diagnosing funnel leaks, running A/B tests, and guiding clients toward building long-term optimization maturity.

Earlier, as a Conversion Optimization Trainer, he led a one-month program to train CRO interns on fundamentals, AI advancements in experimentation, and Invesp’s structured optimization process.

Building on this foundation, Anwar founded Zaher.AI in 2025, where he now serves as Founder & CEO. Zaher.AI pioneers Generative Engine Optimization (GEO), helping brands — especially across the Arabic web — stay visible in AI-driven platforms like ChatGPT, Gemini, Claude, and more.

What being an AI-powered CRO strategist means in practice

Being an AI-powered CRO strategist means integrating AI not just as a tool, but as a collaborator in every stage of the optimization lifecycle — from research and audit to ideation, testing, and continuous learning.

In practice, this means:

Using AI to amplify capability

I build dedicated AI agents (e.g., custom GPTs) per client that ingest their site data, customer personas, test history, and analytics—allowing me to audit like a senior data analyst and strategize like a full-stack optimization team.

Accelerating execution

By leveraging AI for structured data parsing, heuristic audits, and even messaging variant generation, I compress workflows from hours to minutes.

Improving quality through feedback loops

I don’t delegate blindly to AI. I guide it with context, prompt frameworks, and examples. Then I use it as a reviewer to catch gaps, biases, or missed opportunities.

For example, in every client engagement, I create a dedicated project-trained GPT that understands their brand voice, customer behavior, and past experiments. It becomes their 24/7 CRO assistant, guided by my frameworks and logic, which unlocks insights at scale and enables better collaboration between stakeholders.

Traditional CRO relied on manual depth — slow, meticulous analysis by senior practitioners. AI-CRO, when done right, enables scalable depth, providing mid-level strategists with senior-level reach. It’s no longer about being a technical expert on every platform. It’s about having the right decision mindset and building the right AI workflows to support that mindset.

How CRO is evolving into a risk-mitigation strategy

AI is pushing CRO beyond surface-level quick wins into the realm of strategic risk mitigation, and that shift is already happening in my work.

Quick wins (like tweaking button colors or headlines) are now commoditized. Anyone can ask ChatGPT for best practices on improving cart conversion. The real differentiator now lies in how deep you go and how dynamically you adapt based on actual behavior and prior test learnings.

Here’s what it looks like in practice:

- I build custom AI assistants for each client — trained on their past A/B test logs, personas, analytics segments, and even internal goals. These assistants evolve into knowledge bases that respond in real-time to client prompts, such as:

“What learnings from our last 6 landing page experiments can I apply to this new Facebook campaign?”

- That turns experimentation into a living, contextual asset rather than a disconnected series of one-off tests.

It also influenced how I built CROVA, an AI-powered CRO virtual agent. What started as a custom GPT for internal use has become a product others now use to scale their experimentation thinking without needing deep CRO expertise. By embedding test reasoning, historical learnings, and hypothesis mapping, CROVA helps teams make better decisions before they even launch tests, effectively de-risking major product or UX changes.

How to protect test quality while accelerating with AI

Faster doesn’t mean sloppier if you approach AI as a reviewer, not just a creator. Here’s my approach to preserving quality while speeding up execution:

Step 1: Expert first, AI second

I start by doing a full analysis myself — analytics, heuristics, JTBD, etc. Then I feed those findings into AI (like ChatGPT or CROVA) to get feedback, challenge my assumptions, or reframe them using clearer hypothesis logic.

Step 2: Guardrail prompts

I provide structured prompt templates that enforce hypothesis frameworks, sample size logic, and instrumentation cues. For example, I might prompt:

“Based on the uplift we’re targeting and historical CVR variance, is the current sample size sufficient to reach 80% power?”

Step 3: AI as validator, not executor

I always ask AI to reason its answers — why it suggested a specific test setup or sample size. This prevents blind execution, hallucinations, and improves learning for both me and the client team.

Step 4: Instrumentation automation

I use automation tools like Make.com to fetch test logs, analyze variance over time, and check instrumentation tags before any launch, ensuring data integrity is maintained.

Step 5: Transparency and labeling

I never ship an AI-generated test plan without validating every element. Reports clearly distinguish:

- Insights directly backed by user data

- AI-extrapolated insights

- Confidence tiers for each conclusion

AI doesn’t replace critical thinking — it amplifies disciplined workflows. The mistake is using AI to skip steps; I use it to strengthen each step.

Scaling qualitative insights without losing authenticity

AI is absolutely reshaping how we leverage user behavior data, but not in the “replace real users with synthetic ones” way many imagine. Instead, I see it as a force multiplier for amplifying real qualitative data, and not substituting it.

Here’s how I apply it:

- When analyzing JTBD interviews or open-ended survey responses, I use AI to extract patterns from actual scripts, but only after tightly controlling the process.

- I never bulk-dump all transcripts and ask for “themes.” That’s where hallucination creeps in. Instead, I walk the AI through step-by-step review, always enforcing that:

- Each insight must cite the exact quote or timestamp it was derived from. I verify each finding manually before considering it valid.

A real use case:

In one project, I was analyzing 7 long-form interviews. Rather than run a traditional affinity mapping, I fed the AI one transcript at a time, tagged user jobs, struggles, and desired outcomes, and then mapped them back to funnel friction points. This allowed me to go from raw data to actionable hypotheses in <1 day, without compromising the integrity of the insight.

Don’t trust AI to “fill the gaps.” That’s where bias creeps in. Always segregate insight tiers: what’s real user input vs. what’s AI inference or extrapolation. I flag those clearly in my client reports to maintain transparency and trust. AI can scale qualitative synthesis, but only when anchored in data integrity and human validation.

Use VWO Copilot for behavioral analysis to automatically scan heatmaps, session recordings, and surveys for recurring friction points and behavioral patterns. You can manually validate these insights and add them to your testing pipeline, allowing you to focus on strategic decisions and experimentation priorities rather than repetitive analysis.

CRO adoption challenges in Arab markets

Through years of auditing both global and Arab eCommerce brands, a few systemic gaps consistently emerge, especially in MENA:

Blind imitation of global giants

Many regional brands try to copy design patterns from global players like Zara or ASOS, thinking “if it works for them, it’ll work for us.”

But those brands have brand equity and user trust. Local brands, by contrast, need to earn that trust first, which requires totally different UX flows, persuasion techniques, and social proof strategies.

Underestimation of digital psychology, especially in Arabic

Despite Arabic accounting for over 50% of searches in MENA, the psychological and persuasive frameworks used are often English-first, then directly translated. That kills performance. Language structure, word order, and emotional triggers differ in Arabic — meaning true persuasion requires native-first copy, not translation.

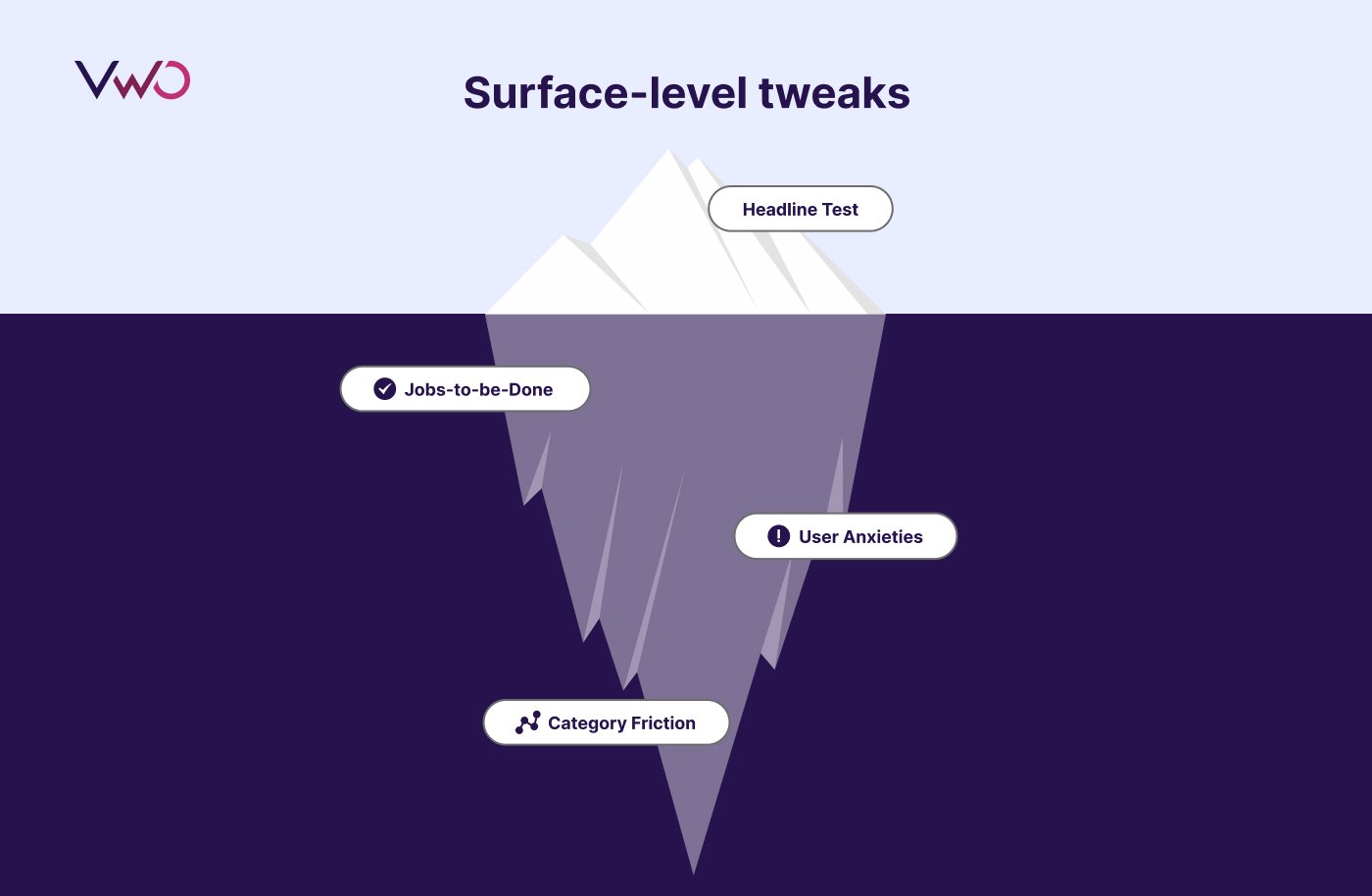

Tactical execution without strategic structure

Brands often test surface-level elements, like hero headlines, without understanding the underlying jobs-to-be-done, user anxieties, or category-specific friction. Without that strategic framing, they misinterpret results and abandon tests that had potential.

What’s needed is not just running more experiments, it’s shifting the mindset from copying tactics to understanding why those tactics work, and how local context affects them.

Further, most Arab brands are still in the awareness-building stage of CRO. That’s precisely why I launched one of the region’s first Arabic CRO courses: to demystify the practice and show that it’s not just for global tech companies.

What I’ve seen is this:

- Once the fundamentals click — data literacy, psychology, funnel mechanics — teams get excited.

- But when AI is introduced too early, it becomes a distraction or, worse, a crutch.

So I teach it in layers:

- The course runs for 8 weeks, and we only introduce AI in the final modules, after which students can do everything manually. That way, AI becomes an accelerator, not a shortcut.

- We teach them how to build their own CRO assistants, trained on their own work, that can help them strategize faster, but only after they understand what makes a good strategy in the first place.

The key is positioning AI as augmentation, not replacement. It gives them speed, structure, and insights, but only if they approach it with the right mindset.

CRO in the region is just getting started, but the hunger is there. When you combine foundational education with powerful tooling, the results are transformational.

What AI can never replace in CRO

There are layers of human understanding that AI just can’t replicate — at least not yet.

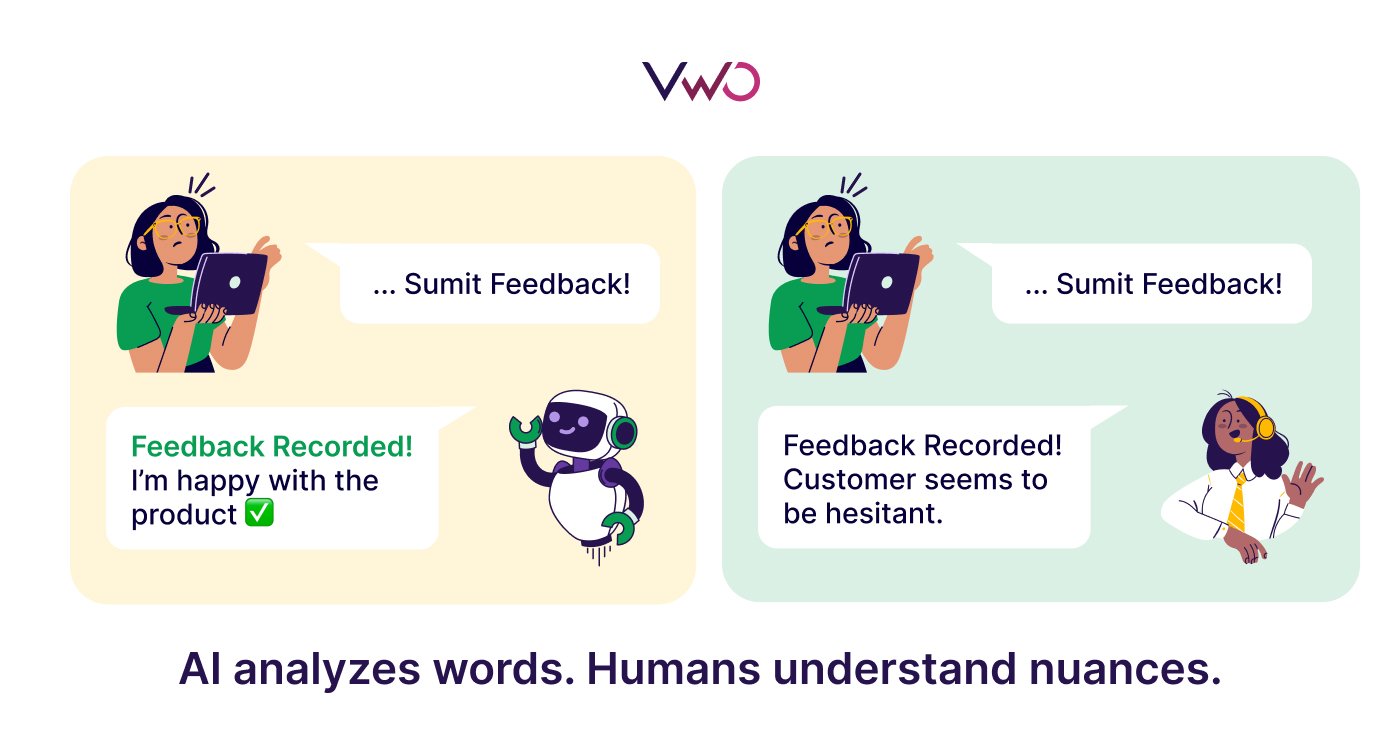

For example, during jobs-to-be-done interviews, I can often pick up on non-verbal cues: when a user pauses before answering, shifts tone when describing a problem, or even changes their posture when talking about a pain point. AI can analyze words, but it can’t feel tension or excitement between the lines.

Empathy, emotional intelligence, cultural nuance — those are still very human territories.

I once ran a JTBD analysis where the AI flagged a user’s comment as a key moment. They had said they were ‘happy with the product.’ On paper, yes, it looked like a win. But I was in the interview. I could tell, from tone and body language, that the statement was superficial.

What really mattered was something the user said later, with more energy and detail, about the product’s flexibility. AI completely missed it.

AI can see the surface, but humans can read the weight of meaning. The key is positioning AI as augmentation, not replacement. It gives speed, structure, and insights — but only if teams approach it with the right mindset.

The value testing platforms should offer

The shift must be from tools to decision-making workflows. What matters most now is making platforms strategically smart, not just operationally fast. This should help teams run not just more tests, but the right tests based on past learnings and behavior signals.

What can be prioritized:

Autonomous test synthesizer

A system that reads past experiments and suggests new hypotheses — not randomly, but based on what’s actually worked in similar contexts.

Real-time personalization engine

Move beyond static A/B tests to AI-driven on-site personalization that adapts page structure and content based on real-time behavior signals.

AI assistants for users

More brands should embed GPT-powered chat on-site to guide, recommend, and remove friction across the funnel. These are not just support bots, they’re conversion assistants.

Custom GPT companion

This is something I already implement for clients — a GPT trained on their test logs, personas, brand rules, and content. It helps them brainstorm ideas, summarize past learnings, and prepare new landing pages — instantly. Experimentation platforms should integrate this concept natively.

Here’s how I use AI today:

- Variation prioritization: I feed previous A/B test logs into my custom GPT (CROVA). It ranks variation ideas based on past success patterns, page type, and audience behavior, making prioritization faster and more evidence-based.

- Sequential learning: Instead of storing results in static docs, I train my GPTs to interpret them. They can tell me which persuasion principles worked across multiple tests, and help shape the next test strategy accordingly.

- Guardrails: While I don’t use automated guardrail alerts yet, I optimize the system prompts in my custom GPTs to:

- Reduce hallucination

- Force citation from source data

- Label confidence levels (e.g., “based on test X with n=20K, CVR improved by Y%”)

AI can also detect micro-trends that we often miss in test analysis:

- Segment-level anomalies

- Interaction effects

- Behavioral clusters tied to outcomes

It can also answer the “So what?” questions for stakeholders. For example:

“Why did this variant win?”

“Which segment should we retarget based on this result?”

AI becomes the real-time analysis partner that helps CROs focus on impact, not just data navigation.

Future of AI in optimization

We’re on the edge of two major frontiers:

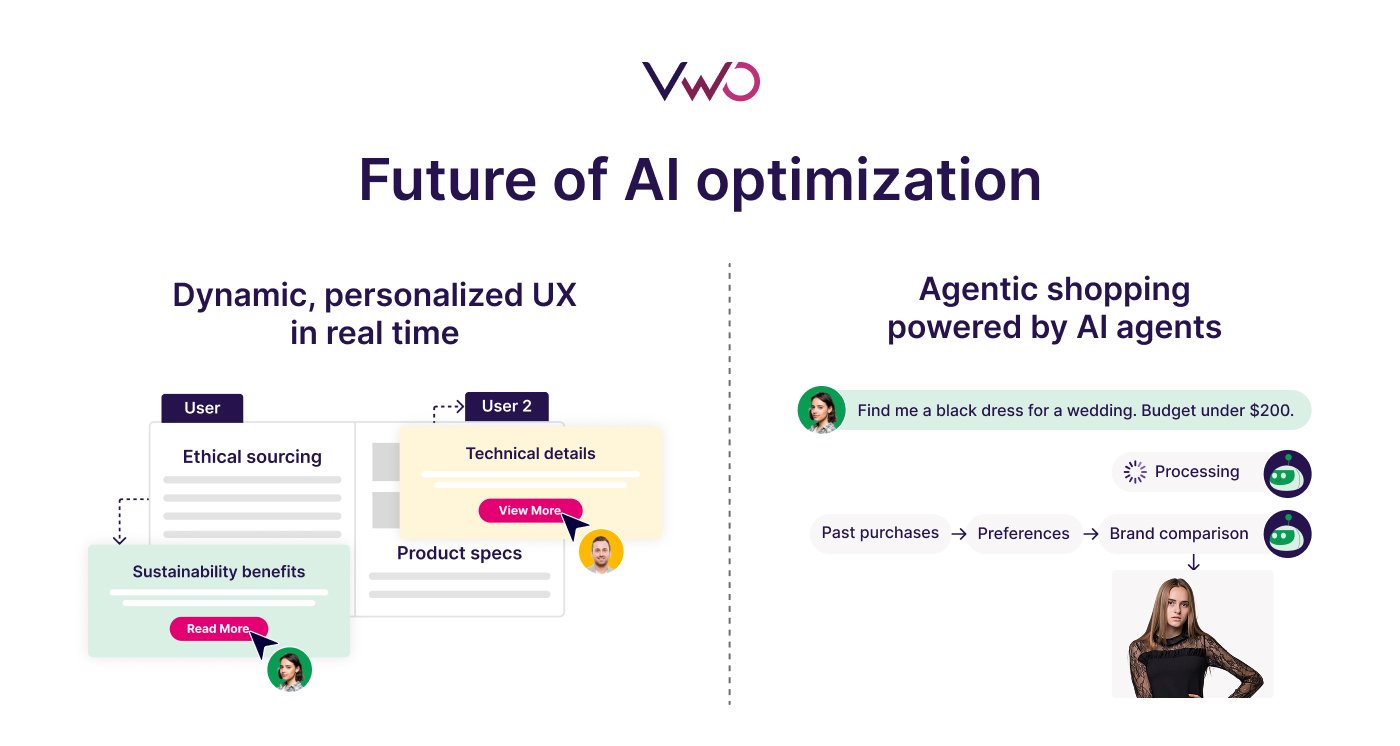

Dynamic, personalized UX in real time

Imagine a website that doesn’t just A/B test variants for groups, but adapts its layout and messaging per user in real time.

If a user dwells on ethical sourcing content, the site reconfigures to highlight sustainability benefits. If another user skims product specs, the site prioritizes technical details upfront.

That’s not science fiction — it’s agent-driven UX personalization, and it’s coming fast.

Agentic shopping

Soon, users won’t browse manually. They’ll just tell their assistant:

“Find me a black dress for this Friday’s wedding. Budget under $200.”

And their AI agent will:

- Pull from past purchase data

- Know your size and style

- Compare across your preferred brands

- Order automatically

This means we’ll need to optimize websites for AI agents, not just humans. Structured data, clarity, and crawlability will directly impact conversion for agents.

The first proof point?

It’s already happening. My new startup, Zaher.AI, focuses on Generative Engine Optimization (GEO) — optimizing brand visibility in AI tools like ChatGPT, Gemini, and Perplexity.

We’re seeing clients already getting traffic and conversions from LLM-generated recommendations, which is proof that AI is reshaping the discovery layer. And as AI agents take over more of the buyer journey, optimization will shift from users to protocols.

CRO is evolving into Agent Experience Optimization (AXO) — and I’m excited to be part of shaping that future.

Where AXO fits in the CRO plan:

- Top-of-Funnel: Ensure your brand is represented correctly and compellingly in generative responses from AI agents.

- Mid-Funnel: As AI agents “navigate” pages to fetch answers or perform tasks, your site must be structured for comprehension, not just design.

- Post-Click: Move beyond personalization for users. AXO anticipates how agents interpret user signals and adapts experiences accordingly.

Practical steps to start AXO:

- Run a GEO audit: Check not only visibility in LLMs but also message continuity between AI intros and landing page delivery (ad-to-LP consistency).

- Structure for machines: Use clear H1s, schema, and tagged content to improve machine interpretation.

- Design for adaptability: Create modular layouts that AI agents can restructure dynamically.

- Test agent flows: Ask ChatGPT to find a product or answer on your site. Fix where it fails.

- Monitor agent traffic: Tools like Zaher.AI and Semrush help measure agent-originated traffic — treat this as a new acquisition channel.

Agent Experience Optimization isn’t future-facing — it’s already happening. The sooner brands align with agent behavior, the stronger their discoverability and conversions.

Conclusion

I hope experts eager to leverage AI in optimization found useful starting points here, along with the caveats that should never be ignored.

To turn these ideas into action, you need a platform that brings speed, structure, and intelligence to your workflow. With capabilities like AI-driven insights, automated variation creation, and personalized experiment ideas, VWO helps teams run smarter tests, faster. Book your personalized demo today.