A/B Testing vs Split Testing: When & How to Use Each

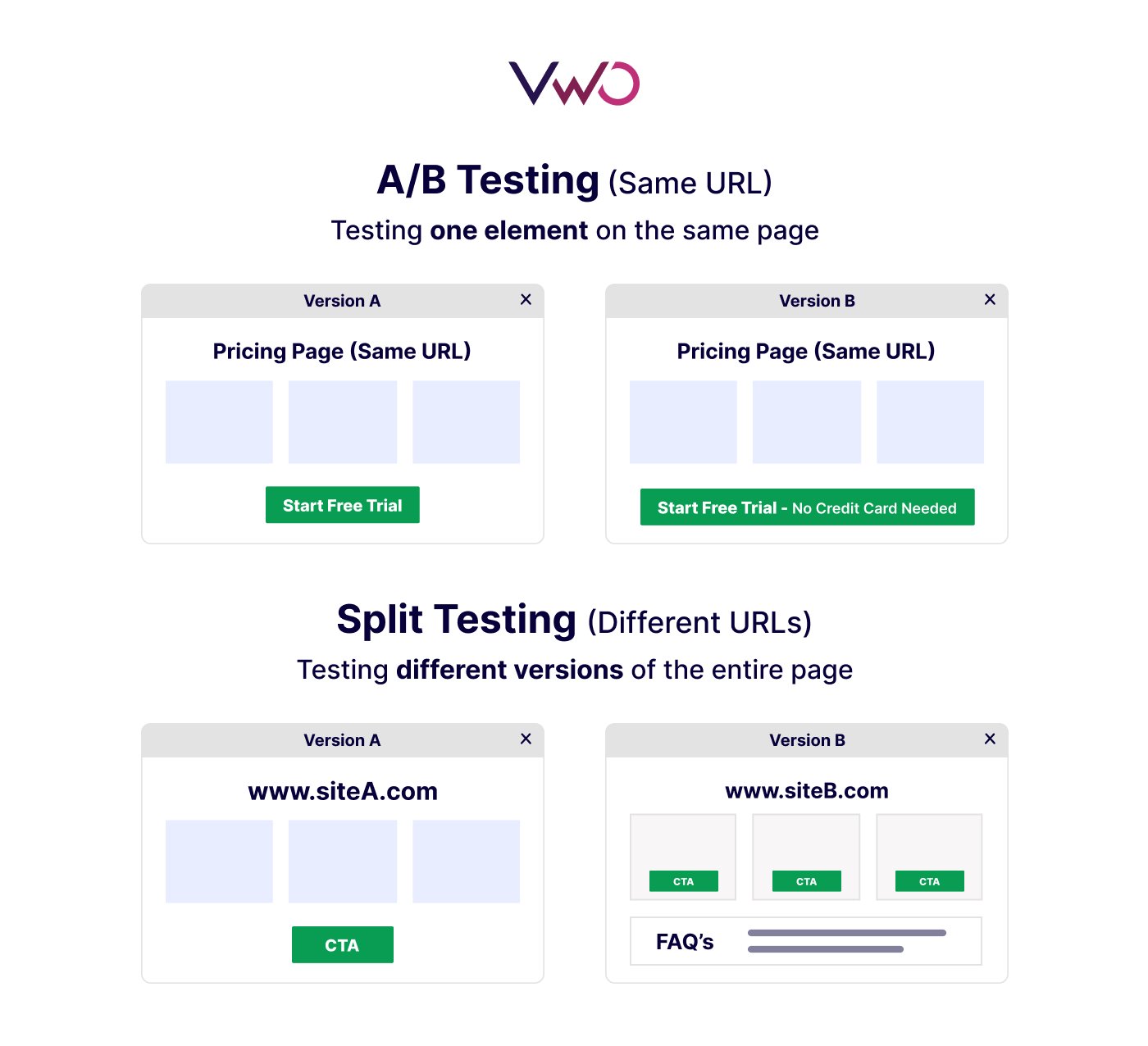

The main difference between A/B Testing and Split Testing lies in their scope. A/B testing compares variations of the same page, while split testing evaluates entirely different pages hosted on separate URLs.

Now imagine a product manager planning to improve a pricing page. The team has ideas ranging from rewriting a few CTAs to restructuring the entire page layout. The question isn’t whether to test, but which testing method makes sense for the change being considered.

This is where many teams hesitate. Without a clear mental model, it’s easy to default to familiar approaches, over-test small changes, or avoid larger experiments altogether. The uncertainty isn’t about capability, it’s about understanding the scope of change you’re trying to validate.

To make that choice clearer, let’s walk through how these two methods differ in practice, and when each one fits best in real-world experimentation.

What is the difference between A/B testing and split testing?

Although A/B testing and split testing often get grouped under the same general concept, showing different versions to different users or site visitors and comparing test results, the way each method works is quite different.

A/B testing keeps users on the same page and same URL, while essentially comparing a variation (version B) against the original control (version A). The experiment typically focuses on individual design elements, such as headline changes, CTA updates, layout tweaks, or copy adjustments. Because everything happens within the same environment, this testing method is ideal for quick iterations, smaller UI decisions, and controlled experiments that deliver reliable data and reach statistical significance with fewer visitors.

Split testing, on the other hand, compares different pages hosted on separate URLs. Each page variation can contain multiple elements, a different layout, or an entirely new user flow. Instead of rendering one variation on the same page, the platform sends different groups of users to different URLs. This approach is ideal when teams want to evaluate a redesigned template, a new onboarding flow, or alternative page structures across the entire user journey.

A concise example to see both in action

Let’s say your team wants to improve conversions on a pricing page.

- A/B testing approach (same URL, one variation):

Visitors land on the same pricing page, but version B updates only the CTA: changing “Start Free Trial” to “Start Free Trial; No Credit Card Needed.” Everything else on the page remains unchanged. This helps determine whether a single, high-intent call to action increases conversions. - Split testing approach (different URLs, broader differences):

Traffic is split between two versions of the pricing page. One uses the existing layout; the other introduces a new tier structure, reworked feature groups, and a short FAQ. Here, you’re not testing one variation; you’re testing different versions of the entire page to obtain meaningful data about a redesigned experience.

The goal of methods aims to improve UX and conversions, but they answer different questions. A/B testing tells you whether one variation of the same page performs better, while split testing helps determine whether a new design direction is worth adopting.

Now that we’ve clarified how the two testing methods differ, let’s identify when each method is best suited for your experimentation strategy.

When to use A/B testing

A/B testing is the right method when you want to optimize specific parts of your digital experience: emails, landing pages, ads, web pages, or app flows, without changing the entire structure.

Focus & purpose

A/B testing is designed to measure the impact of one intentional change at a time within an existing experience. It keeps the page structure, traffic source, and user context constant, so the effect of a single element can be isolated with minimal noise.

This makes it suitable for decisions where clarity, speed, and low complexity matter more than exploring multiple interacting changes.

Advantages

- Requires less traffic and reaches statistical significance faster than broader testing methods.

- Easy to set up because both versions run on the same URL with only one variation.

- Accessible to teams at any experimentation maturity level due to low setup and operational complexity.

Disadvantages

- Only evaluates one variation at a time, limiting the scope of insights.

- Not ideal for evaluating new layouts, templates, or structural changes.

- Can slow progress if large sites rely solely on micro-tests.

Example

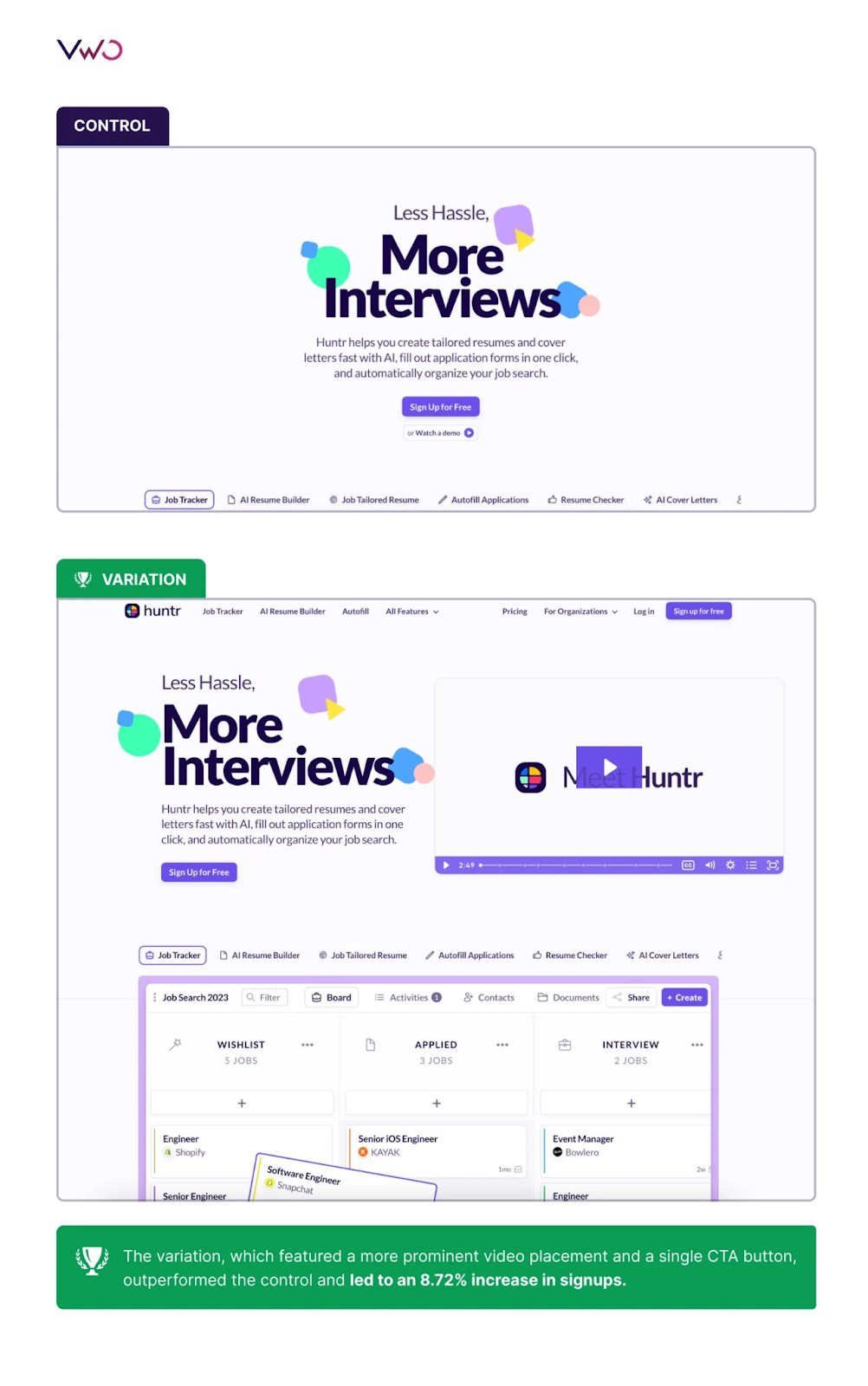

Huntr’s homepage A/B test with VWO

A great example of effective A/B testing comes from Huntr, a fast-growing job search platform. The team suspected that having two CTAs (“Sign up” and “Watch the demo”) on their homepage created friction. To validate this, they ran an A/B test on the same URL, comparing their control version with a simplified variation.

- Version A (control): Two existing CTAs.

- Version B (variation): One primary sign-up CTA and a clearly visible demo video placed beside it.

This single, focused change delivered an 8.72% increase in sign-ups, proving that clarifying the user path and giving visitors easy access to helpful content can significantly improve conversions without redesigning the entire page. Read the full story here.

Download our A/B testing template to plan your experiments, define hypotheses, and track results with confidence.

When to use Split testing

Split testing works well when you need to assess large, end-to-end changes. By routing visitors to different URLs, it allows teams to compare fundamentally different page structures, layouts, or flows rather than isolated elements.

Focus & purpose

Split testing focuses on evaluating how a completely reimagined experience performs, from layout and structure to flow and content hierarchy. Its purpose is to understand which direction delivers stronger user engagement, smoother UX, and better business outcomes, enabling teams to make data-driven decisions even when multiple components shift together.

Advantages

- Evaluates entire experiences where multiple changes are intentionally bundled.

- Compares performance across separate URLs, making it suitable for redesigns or migrations.

- Enables validation of major changes before committing them site-wide.

Disadvantages

- Requires more traffic because visitors are split across multiple versions/URLs.

- More effort to set up separate URLs or environments.

- Harder for data teams to pinpoint which specific element improved performance.

Example

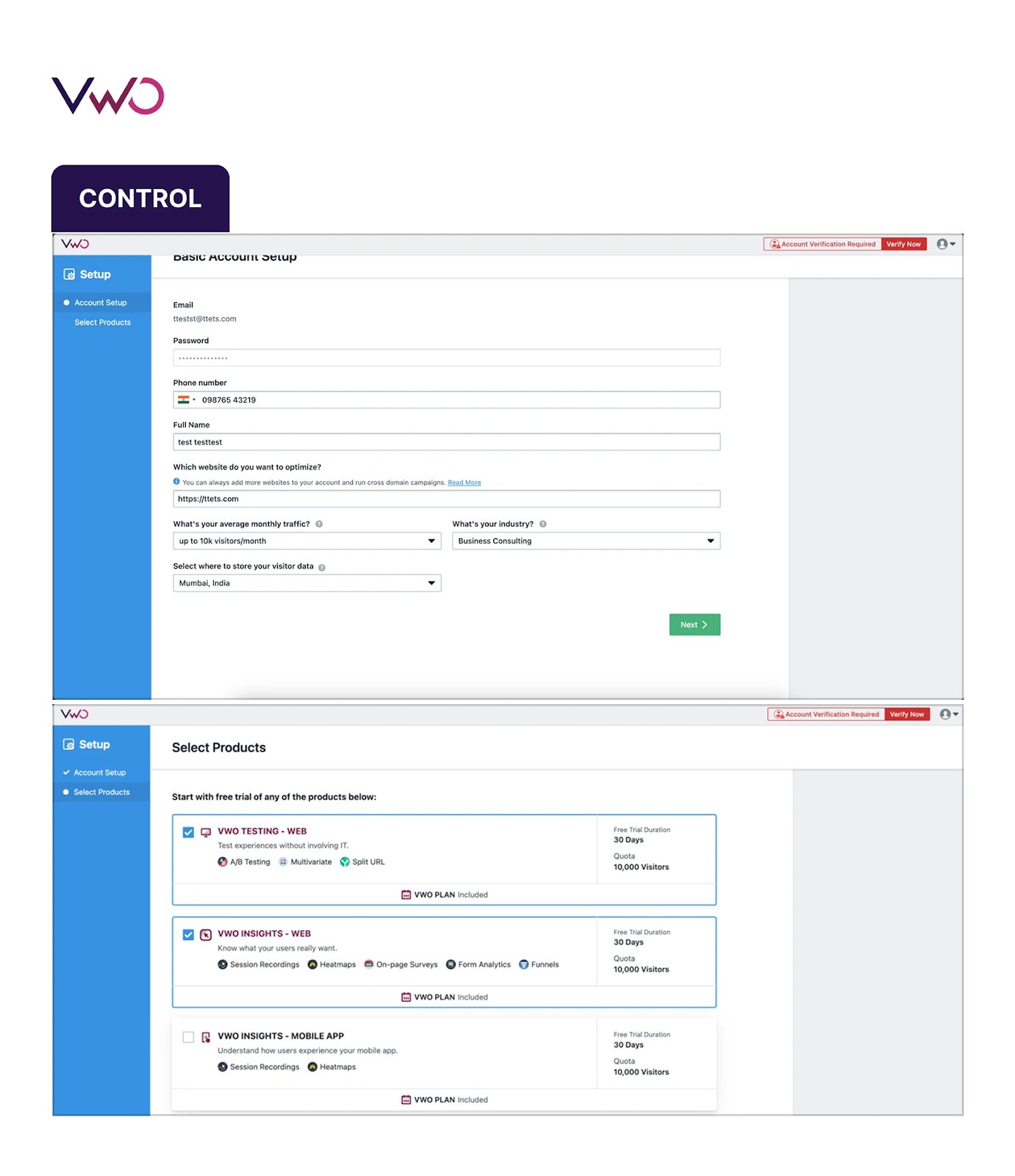

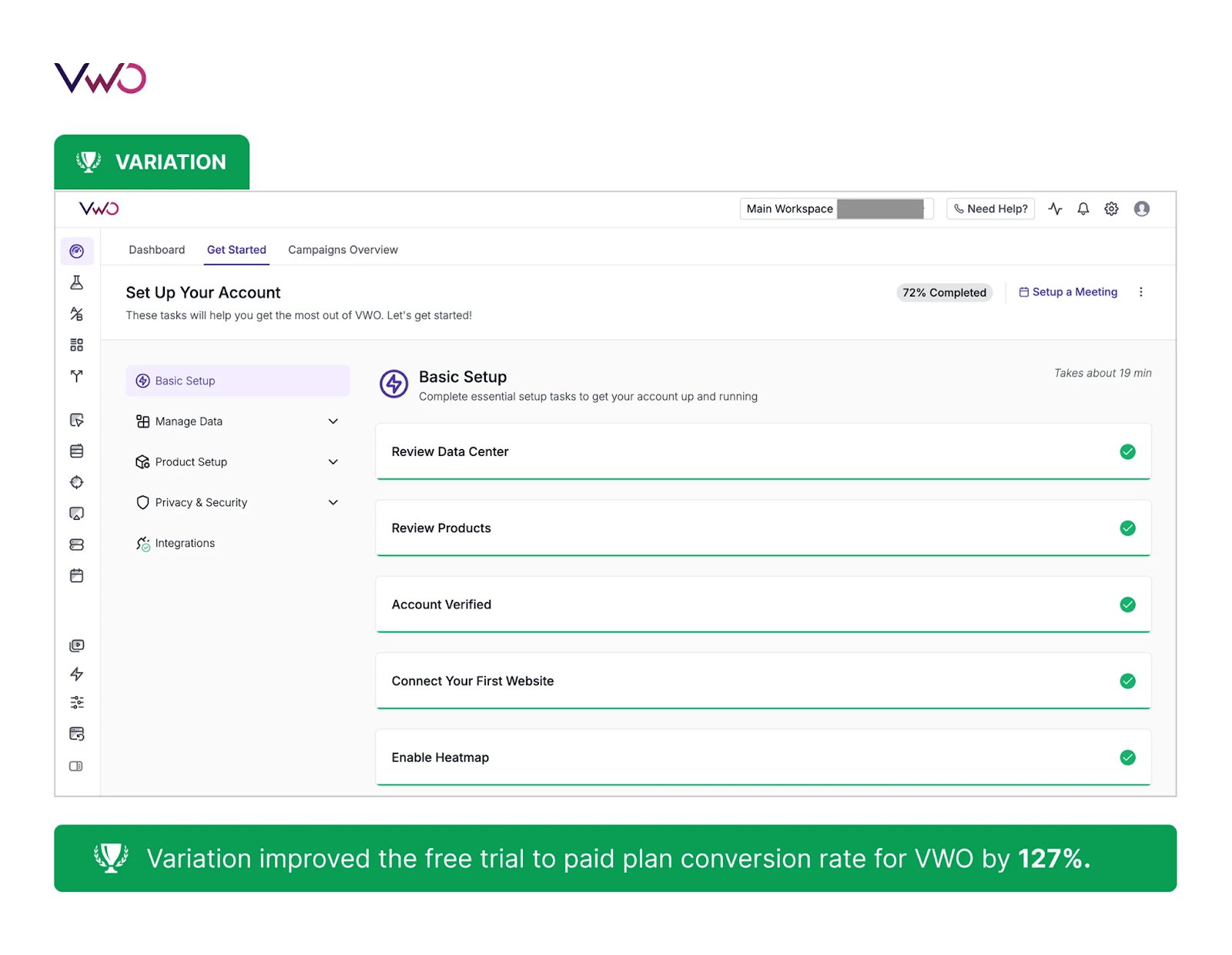

During a recent onboarding revamp, the VWO team needed to evaluate how a completely new user journey would perform compared to the existing one. Because the changes involved multiple elements and a redesigned flow, they used split testing, directing users to two different onboarding experiences hosted on separate URLs.

- Version A (control): The original onboarding flow with scattered setup steps and a traditional free-trial form.

- Version B (variation page): A new centralized “Get Started” experience featuring a structured checklist, clearer activation steps, and improved feature discovery.

The redesigned experience produced a 127% improvement in free-trial to paid conversions, indicating that a clearer, more structured onboarding journey helped users progress with greater confidence. Split testing gave the team the certainty they needed to adopt the new flow across the product.

Explore this guide to split testing, featuring strategies, examples, and tips for running effective experiments.

Integrating A/B and Split testing into your strategy for maximum impact

High-performing experimentation programs don’t rely on a single testing method. A/B testing and split testing are applied based on the scope and impact of the change being evaluated, not as alternatives or progression steps.

If you start with split testing

Use split testing when you are exploring new layouts, workflows, or structural changes and need to determine whether a different experience is worth adopting. Once a winning direction is identified, A/B testing can then be used to refine that experience by optimizing individual elements such as messaging, CTAs, visual hierarchy, and form interactions.

If you start with A/B testing

Use A/B testing when the existing experience is broadly sound, and the goal is incremental improvement. By testing isolated changes, teams can extract performance gains without introducing major disruption. Insights from these tests may later inform larger structural changes, which can then be validated through split testing if needed.

VWO Testing supports this workflow by allowing teams to run split URL tests, A/B tests, multivariate tests, and multipage testing within a single experimentation environment. Teams can route incoming traffic to different versions, track test results, and evaluate conversion rates without switching tools or fragmenting analytics data. This allows teams to run different types of experiments as needed, based on their priorities, timelines, and level of change.

Watch the webinar to learn how to strengthen your A/B testing approach with proven methods for hypothesis design, prioritization, and result analysis.

Use behavioral insights to guide experiments

Equally important is understanding why one version performs better than the other. VWO Insights provides behavioral context through heatmaps, session recordings, form analytics, funnels, and on-page surveys, helping teams see how users engage with pages and flows.

Behavioral insights help teams decide not just what to test, but how to test it. By revealing whether friction is limited to specific elements or rooted in the overall experience, VWO Insights guides teams toward the appropriate testing approach.

Use VWO Copilot to interpret large heatmap datasets and summarize hundreds of session recordings into clear behavioral patterns. By reducing manual review, teams save time and can plan a more focused, evidence-led testing strategy.

Frequently asked questions (FAQs)

An A/B test is used to compare two or more variations of the same page or element to determine which one performs better against a defined goal, such as higher conversions, clicks, or engagement. It helps teams make data-backed decisions by isolating the impact of a single change.

The objective of A/B testing is to incrementally improve performance by identifying which version of an element, such as a headline, CTA, or layout, drives better results. It focuses on refining experiences using reliable data rather than assumptions.

Avoid A/B testing when you’re evaluating large structural changes, full redesigns, or entirely new user flows. It’s also not suitable when traffic is too low to reach statistical significance or when multiple elements interact or change at once, making results unreliable.

The purpose of split testing is to compare fundamentally different versions of a page or experience hosted on separate URLs. It helps teams validate major design, layout, or workflow decisions and understand which overall direction performs better across the entire user journey.