Welcome to the 16th post of CRO Perspectives by VWO!

Each post brings a new voice from the experimentation world—people who are shaping how teams build, test, and scale better digital experiences. These conversations go beyond tactics. They uncover how real experts make experimentation work in the messiness of real organizations, across markets, cultures, and mindsets.

This post features a specialist whose testing lens is just as much about people as it is about platforms. Let’s dive in.

Leader: Sunita Verma

Role: Experimentation Specialist

Location: Singapore

Speaks about: Growth experimentation • Product testing • Cross-market insights • Personalization • Empathy-driven CRO

Why should you read the interview?

With a track record of driving experimentation at major commerce companies like H&M, FairPrice Group, and Zalora, Sunita Verma brings a rare blend of analytical rigor and human-centered thinking. Her work spans product growth, regional testing, and cross-functional collaboration across Asia. She’s helped teams shift mindsets, build experimentation culture from the ground up, and embed empathy into every stage of testing.

If you’re looking to scale CRO across diverse markets or wondering how to build stakeholder trust in a data-driven world, this interview is for you.

Disclaimer: All of the content below reflects Sunita’s personal thoughts, opinions, and beliefs, and does not necessarily reflect those of any companies she is or has been associated with.

What do shoppers value today?

As per Maslow’s hierarchy of needs, people will only start considering higher forms of thinking and needs once more basic needs have been met. We see young shoppers today are increasingly looking for responsible companies instead of ones that are able to give them the best value-for –money. This contrasts with trends we see in Gen X and Y shoppers who tend to rank price, value-for-money as top considerations for purchase.

This behavior presumably emerges as a result of the rapid rise in standard of living of the last few decades in several parts of Asia. For organizations, it becomes important to assess when the market’s population will start seeking higher-level needs—aligned with the market’s development—while planning to capture a new generation of consumers.

The higher levels of thinking and consideration in the younger generation is impressive. However, the rate at which this is becoming more widespread differs significantly from one Asian country to another (seemingly related to the standard of living within the country).

How to prioritize which ideas to test

I typically try to evaluate an idea based on the below factors:

Confidence:

How did this idea come about, and what sort of data or knowledge is backing it?

To maximize the existing data and knowledge that we have (without testing) to understand how confident we can be in the idea.

We’re looking to prevent the testing of ideas that are solely based on gut-feel (across my career so far, this request is usually one that is cascaded down from a HiPPO).

Impact and reach:

Will this idea make a big difference to user behavior or perception?

Once we know that the idea in itself comes from sound-reasoning, we’ll need to understand its chance in making enough of a difference to users such that the test has a good chance of being statistically significant. This is where the testing team would need to do some homework to understand the traffic, conversion, potential impact, and thus the minimum detectable effect (MDE). Stakeholders are not brought into this technical process but conversations with stakeholders will allow the team to understand the possible impact the idea might bring about.

However, if the test idea does not have a good statistical chance, our next step would be to understand if there are reasonable ways to change the test design (e.g. bolder variations, longer test durations, location of test, markets tested)

As you can see, this is a very RICE approach to prioritization and is a fairly popular tool in several prioritization frameworks across organizations.

It is important to engage stakeholders from the start. They need to feel responsible for the purpose of the test, as it should align with their goals and support their objectives. This has to be established right from the start to garner greater trust.

All the technical bits and calculations in the process do not need to be fully walked through to the stakeholders involved but a high-level overview is important for them to understand our outlook on a certain test. This reduces the need to get into lengthy explanations of the different parameters in testing and instead streamlines the conversation to the broad important concept to the layman and thus why we’re suggesting some changes to ideas or suggesting for some ideas to be reviewed/considered for another time.

Making sense of unexpected experiment outcomes

First off, be excited and curious about WHY. As excited as when you would see a positive result in a test. To me, it is critical to ensure you’re in that mindset to make sure you’re not bringing any biases when looking further into the results. If you feel nervous or anxious due to the results, then there are bigger issues than the results—there will need to be a mindset correction in the organization of what testing and learning are about.

Now, when we’re in the right mindset, try to dive further into the results to understand what is causing the results. This is highly dependent on what the test was about. Are different segments of users performing differently? Are there specific steps in the journey where users have fumbled?

Once all of the insights have been gathered to paint a fuller picture of why our customers are reacting in this way. It is time to share the results with the key stakeholders. I usually like to set the tone by making sure stakeholders understand that this has been a very valuable test as it has learnings that went against our judgments that could turn into actionable insights.

It is important to ensure that stakeholders view the results in a productive way instead of being discouraged. From there, it would be easier to discuss how we can utilize these learnings to potentially tweak the idea or drop the idea completely or even retarget the test to specific groups of users.

Designing experiments for local market behaviors

We would not have any tailoring or personalizations until we build a knowledge base of what works and what does not. While there are several beliefs and stereotypes about each market and how their population behaves—this might vary significantly from brand to brand within the same market.

As an example, in certain markets like Vietnam, it is no secret that it is relatively more deal-driven than the other Asia markets listed, and we start to understand how ideas tested start showing different results for this market compared to others. This gives us the ability to log this knowledge down in the testing library so that we can better design future tests.

A unique experience from a competitor in the market does not mean that we should hop on to a similar strategy because it aligns with their (not our) shoppers’ preferences. We’ll have to remind ourselves that our shoppers are unique and while we can borrow the thoughts of general behaviors and preferences of the market, this will need to be tested before it is acted upon. Hence, this might take several rounds of testing.

Which metrics matter most in experiments

Customer metrics are extremely important—from the bottom of the funnel (e.g., NPS) to top of funnel (brand awareness, brand consideration). These metrics should not be success metrics for experiments, as they are long-term metrics.

However, it is important that organizations pay heavy attention to them (possibly even more than profit) as the customer sentiment will reveal where course correction needs to happen for the business to continue thriving in the next few years.

I’ve not particularly found a specific metric that is a holy grail for experiments—the metrics highly depend on the test idea and parameters. What I do find to be of utmost importance, though, are guardrail metrics. Even just going through the process of thinking of the appropriate guardrail metrics for a test is a great exercise in and of itself to ensure we’re not trying to generate rosy test results but that we’re trying to understand the user behavior.

To pick the right guardrail metrics, it’s important to play the devil’s advocate.

For example, say the team has a great idea: testing a ‘Similar Product’ recommender on the product page to give users more options. The goal is to increase the likelihood that users come across something they’d like.

Now, it becomes our role to think about how this change might create a negative impact.

A potential guardrail for this test could be the cart-out rate or the number of days between adding to cart and checking out.

This helps us understand if giving users more choice upfront—via the similar product recommender—introduces the paradox of choice. In other words, whether too many options cause delayed decisions or, in some cases, complete abandonment.

Keeping testing rigor in a fast-paced culture

The core experimentation team will typically have a checklist of items that needs to be ticked in order for us to green-light the design of a test, and another checklist for test analysis. However, that’s the easy bit—there are many checklists out there shared by several CRO professionals that are very valid and comprehensive.

To ensure that the team is able to follow the checklists, it is vital that the experimentation team is treated as a ‘center of excellence’ in end-to-end testing (from design to analysis). Laying the groundwork for setting up the above environment is something that usually starts even before the organization’s first CRO team is hired.

We do typically manage expectations from the get-go with business stakeholders that the analysis period for a test will be 2 weeks, which gives sufficient time for the team to ensure that all checks and deeper dive analysis are completed without the interference of business stakeholders peeking at the results in the process. In this way, we’re able to then prepare a more nuanced results slide that allows us to ensure that the narrative of the final learnings shared closely aligns with our findings.

Learning is a key thing that I try to keep in mind when framing results (especially for tests with exciting results). It is important that the results sharing session re-centers stakeholders on the purpose of the test, what we have learned about our users, and how we can expand on this learning rather than being overly focused on a single metric’s percentage increase.

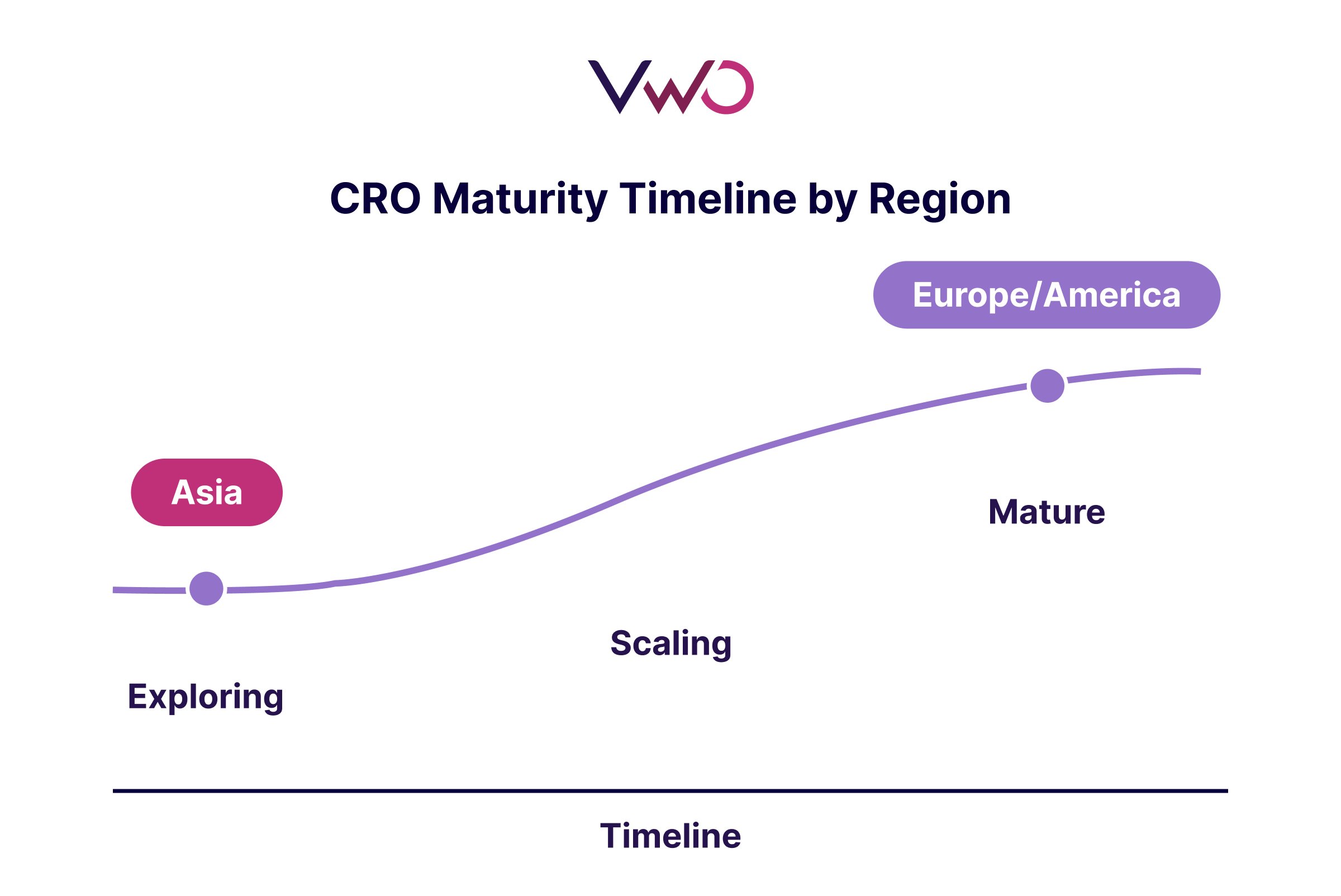

How CRO culture varies across Asia

It is definitely a much newer, up-and-coming area in Asia while other markets like Europe are much more mature in the concept. The receptiveness to it is really fully dependent on how the leaders in the organization view it and also what the employees are used to.

I am lucky enough that most folks I have come across are extremely receptive to it as there is already a top-down organizational communication in a continual, consistent manner that this will be the way forward.

However, there are definitely groups of people where much more work is needed on gaining receptiveness—and so far this has been with those who are used to functioning in the same way for the last decade or two.

This is very understandable as well and I can only imagine the fear for their roles when something like this is introduced. Hence, working with empathy again becomes key. This is not to say that these groups of people only exist in certain markets. They exist in every market as it is not market-dependent but rather organization and department-dependent.

Where AI can help CRO teams most

- Hypothesis generation: Potentially to understand user behavior faster than we can from existing datasets and to suggest ideas to try out (and even suggesting specific segments to focus on).

- Metric selection: It could also help teams with sometimes tricky decisions such as primary, secondary, guardrail metric by suggesting best options based on the test idea.

- Pre-test statistical checks: Suggestions of test duration, confidence, and power based on the test parameters and purpose.

- Variant creation: Automatic creation of variants based on optimizations that the AI has learned might potentially improve results (e.g., copy/assets). I believe I’ve already seen some players doing this (e.g., Pinterest Experimentation).

- Personalization: Automating the creation of different algorithms and the testing of them to continually find improvements to the logic via consistent tweaks.

Set a specific goal for any web page, and VWO Copilot will generate relevant testing ideas tailored to it. Then, seamlessly move forward with variation designs—created automatically with just a few prompts, without relying on design bandwidth.

Sunita’s bold beliefs behind big wins

I have alluded to this in my presentation at the APAC Experimentation Summit last year—empathy and relationship-building is a huge determinant of success in this role. Experimentation in general is treated as a cold topic with the main bulk of it being focused on calculations and designing scientific approaches—at least that was my own mindset when I first ventured into it.

However, over the years, I’ve come to realize that the main portion of my time is spent on people and building trust. Breaking down complex concepts to simpler ones; building relationships and trust that works to ease the acceptance of what I have to say; encouraging teams to think further outside the box without coming off as pushy; being understanding of the limitations that teams are working with and encouraging positive thinking of finding workarounds; recognizing the good work that others are putting in and ideating to help them grow toward their goal—the list goes on.

I thought this podcast episode was pretty good! It essentially highlights how CROs need to have the skills of salespeople. That said, the podcast mainly discusses the concept—actual implementation, of course, is something that’s honed through on-the-ground experience.

Building trust and relationships with the people involved is key to forming strong hypotheses and acting on test learnings, because without their cooperation, even the best testing process is pretty much useless.

Wrapping up

Experimentation isn’t just about metrics. It’s about learning and enabling teams to act on those learnings. As Sunita reminds us, empathy and testing rigour can go hand in hand.

If you’re experimenting across markets or trying to build buy-in from cross-functional teams, there’s a lot to take away from this post. And if you’re ready to get more out of your experiments, talk to VWO today.