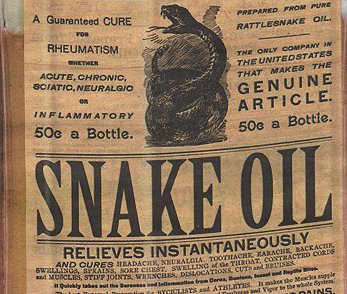

A/B testing is not snake oil

Recently on Hacker News, someone commented that A/B testing has become like snake oil, making grand promises to be a panacea for increasing conversion rates. This comment troubled me not because the commenter was wrong (he was!) but because of how certain people view A/B testing. Let me put this straight and clear:

A/B testing does NOT guarantee an increase in sales and conversions

In fact, we recently featured a guest post by Noah of Appsumo who revealed that only 1 out of 8 split tests that they run produce any actionable results. Yep, read that once again: only 1 out of 8 split tests work. Snake oil guarantees to be a cure for all diseases; A/B testing has no such guarantees.

Download Free: A/B Testing Guide

I think the reason why some people would view split testing suspiciously is because we release a lot of A/B testing success stories. In fact, with a user base so large (6000+ at the time of writing), we see many of our customers getting good results all the time and they if want to share those results with the world, we publish them on our blog.

So, when one week we publish a success story titled 3 dead-simple Changes increased sales by 15% and the next week we publish another success story titled How WriteWork.com increased sales by 50%, it naturally makes some people assume using VWO to do A/B testing would ensure a growth in sales. No, using VWO won’t guarantee anything. It is just an A/B testing tool (albeit, a very good one!) but the real work done is always by the craftsman, not the tool s/he uses.

Survivorship Bias: it helps to know a thing or two about it

Most success stories or case studies (be it about A/B testing, getting rich or losing weight) that you read about on the Internet suffer from something known in psychology as survivorship bias. In context of A/B testing, what it means is simple:

If you run 100 different A/B tests and only 1 of them produces good results, you are only going to publish about that one good result and not the 99 other unsuccessful results.

Obviously, 99 case studies about how A/B testing did not produce any results in unexciting and will bore our blog readers. Hence, this post is a short reminder about all those case studies that don’t produce results.

Download Free: A/B Testing Guide

Why publish A/B testing case studies at all

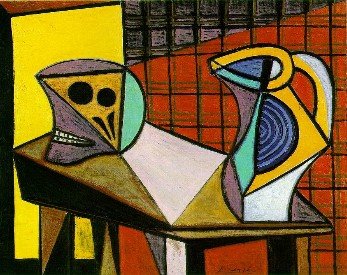

The reason we publish A/B testing case studies is to show the potential of what can be achieved using A/B testing. It is similar to holding an art exhibition for Picasso’s paintings which inspires people to appreciate the work and perhaps pick up a brush themselves to start painting hoping to achieve the level of success that Picasso achieved. To reiterate, just as picking up a brush and canvas does not guarantee good art, doing A/B testing does not guarantee an increase in sales.

In fact, specifically to A/B tests, replicating them almost never works. So, if you read a case study of how video increases conversions by 46% and then implement it on your site hoping for the same magical increase, it may or may not happen. What worked for them may not work for you! So, why publish case studies at all? Here’s why:

A/B testing case studies give ideas on what you can test on your website

Yep, that’s all there is to A/B testing case studies.

And, yes, I hope I convinced you that A/B testing is not snake oil!