The Fidelity of Experimentation

Modern A/B testers, specifically those who work on web-based products, are surprisingly privileged in one regard that often escapes their awareness. Contrast their work with an experimenter designing the outer frame of a car to optimize for maximum speed. The designer would start by proposing some designs on paper. Then she would load the exact design into a computer simulator that would synthetically determine the top speed the design will achieve. She would try to get the simulator to be as close as possible to the real world but still missing out on many finer nuances. She would iterate over multiple designs and optimize the top speed on the simulator before moving ahead.

Finally, when she is fairly confident, she will get a prototype created and probably field test the design. A bunch of unexpected things might happen in the field test, maybe the ride is too noisy or the suspension is too wobbly for higher speeds. All these will need to be corrected by returning to the simulator and iterating again. Only after multiple iterations between the simulator and the field will the car be out in production and be tested in the real world.

The modern A/B tester in that analogy is like someone who directly thinks of a change to make in the car design, then rolls out the new design to a bunch of buyers and waits to see what happens. Such privilege in directly experimenting with the real world has always been rare in the history of experimentation. The technical term for this privilege is fidelity.

In the context of scientific research, fidelity is defined as the extent to which an experiment mimics the real-world scenario in which it will be eventually applied. Experiments that are executed in simple and abstract environments distinct from the real world are low-fidelity. Experiments that are run in close similarity to the real world are high-fidelity. In this blog post, I will describe the tradeoffs of fidelity and present a few pointers toward what modern experimenters can take away from the concept.

Tradeoffs in Fidelity

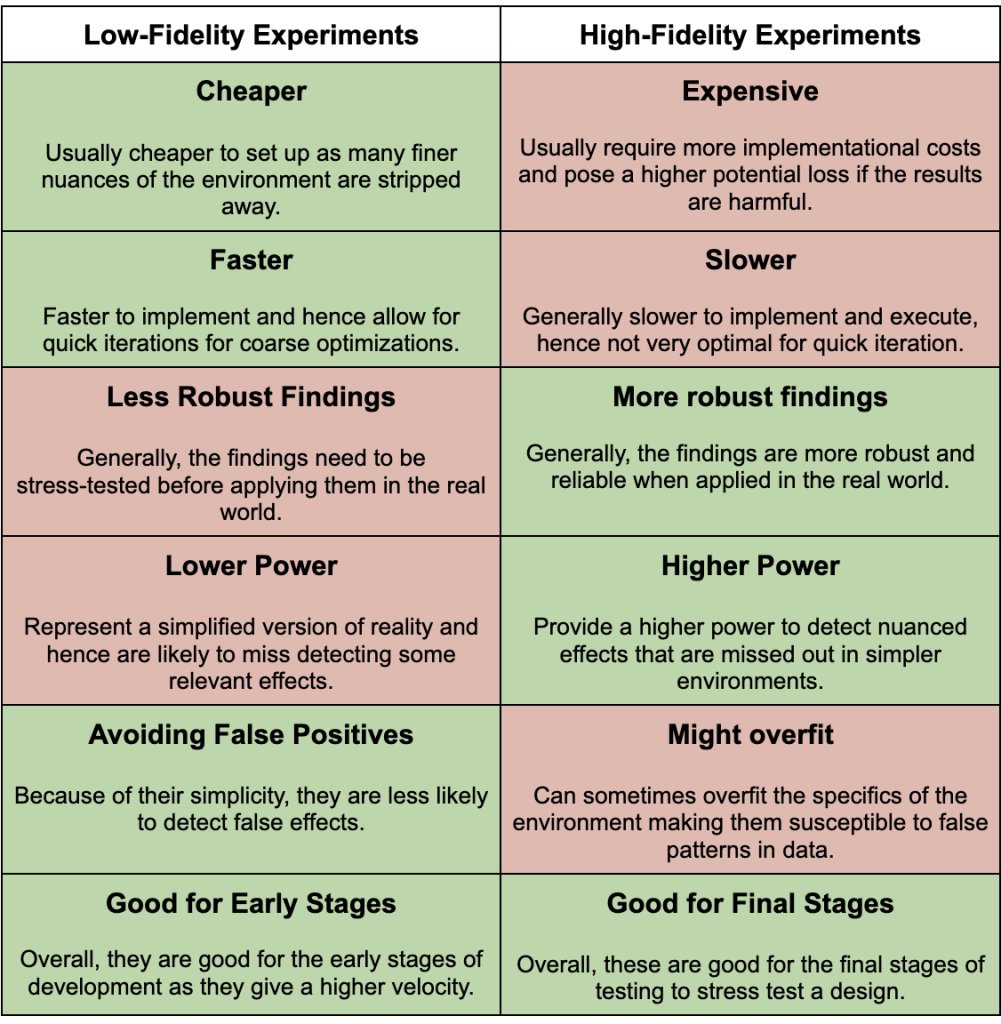

At first look, it seems that a higher-fidelity design would always be better since the results of a high-fidelity model are more likely to be accurate. However, there is a tradeoff between fidelity and costs, and ideally, a mix of low-fidelity and high-fidelity experiments should be run for an optimal experimentation journey. The following table shows the tradeoffs between a low-fidelity and a high-fidelity experiment.

Implications of Fidelity to Modern A/B Testing

Modern A/B testers have the unspoken privilege of being able to run close to 100% fidelity experiments because the costs of high fidelity in the online domain are negligible. However, still there are many ideas and concepts that modern experimenters can learn from fidelity. Some of them are as follows:

- Always gauge the fidelity of your experiments: It is always intelligent to judge how the experimental environment will differ from the one in deployment. Suppose, you are running an experiment on an apparel website over 2 months in summer. However, when the change is deployed the season might change and the experimental results might not apply. Maybe, your experimentation software adds too much overload to your memory, hence increasing the page load latency for your visitors. Consider all the factors and consider how the results might differ from the real world.

- Build simpler versions of features at a higher velocity: It is often not useful to develop an overly optimized version of a feature to begin with. Think about faster ways to iterate and do not worry about building things from the bottom up. It is always better to make small and quick changes from the top down and find areas where efforts pay off more.

- Run targeted experiments to achieve higher fidelity: Sometimes you might be including visitors in a campaign that do not end up seeing the change that you have made. For instance, if you have made a change at the bottom of a page, then including all the visitors on the page adds noise to the result. Carefully think who are the people who will be exposed to the change you have made and for the highest fidelity only include these in your test.

- Run painted door experiments and smoke tests: In the early stages of feature development, it is often valuable to test a feature without developing it at all. Suppose you have five feature ideas in mind for providing value to the customer. You can place a button for these features in your product before building the overall feature. If the user ends up clicking on the button, you can show a simple “feature under development” message. Based on how many visitors actually click on the button, you have learned in advance, which is the most valuable feature idea to work on. These experiments are called painted door experiments and are a great use of low-fidelity design.

- Run experiments on different levels of fidelity: To optimize between speed and accuracy, it is always better to maintain multiple levels of fidelity in your experimentation journey. When you are trying to find the needle in the haystack, prefer stripping down specifications and running an experiment at lower costs. When trying to stress test a design, try to bring it as close as possible to the real-world deployment environment.

Conclusion

Early computers had a high integration between the hardware and the software. Replacing pieces of hardware was difficult, migrating code from one machine to the other was difficult, and making changes to code required one to disassemble the machine entirely. Somewhere along the line, engineers were able to separate the software and the hardware layer entirely. In effect, it meant that you could use the same machine and get it to do anything you want based on the dynamic instructions you wrote.

Today, this has led to web-based apps being increasingly flexible and robust. You can make changes (and revert them) quickly at zero cost and thousands of visitors across the globe can directly see the changes made on their own devices. This is exactly the reason why running high-fidelity experiments is so cheap in modern A/B testing.

No other domain has enjoyed the privilege of fidelity to this extent. Science has never been made available with such degrees of ease.