The Arbitrary, The Random and The Pseudorandom

Does it seem like generating randomness is very hard? You can simply teach a parrot to mindlessly recite a sequence of 0s and 1s. A layman would consider that to be perfectly random because they would not expect the sequence to conform to any particular pattern. That is too unlikely. But, statistically, the sequence is far from random. Statistically, the stream of numbers that the parrot is spitting out is not random at all, it is arbitrary.

From a statistician’s view, there is a huge difference between random and arbitrary. Randomness guarantees some properties that arbitrariness cannot. If you randomly generate 0s and 1s, 1000 times, randomness guarantees that there is a very thin chance that there will be 400 – 0s and 600 – 1s. Randomness guarantees that there is a 99% chance that numbers will be distributed between 460 and 540. Can arbitrariness guarantee that? What if the parrot says more 1s just because they are easier to say?

In common parlance, we often mix up two terms – arbitrary and random. Arbitrary is something generated from a process that is unknown, but not necessarily random. Randomness is something generated from a process that is understood to be fairly random in its output. In that sense, randomness is a stricter condition than arbitrariness.

Randomness plays a central role in all experimentation as it forms the basis of Randomized Controlled Trials. Most implementational complexities regarding randomness have now been solved and you can generally rely on modern random number generators in most cases. However, as an experimenter understanding the potential risks and how we reached where we are is helpful in building a broader vision of experimental design. This blog post explains how we generate the randomness that is used in our experiments.

The Emergence of Synthetic Randomness

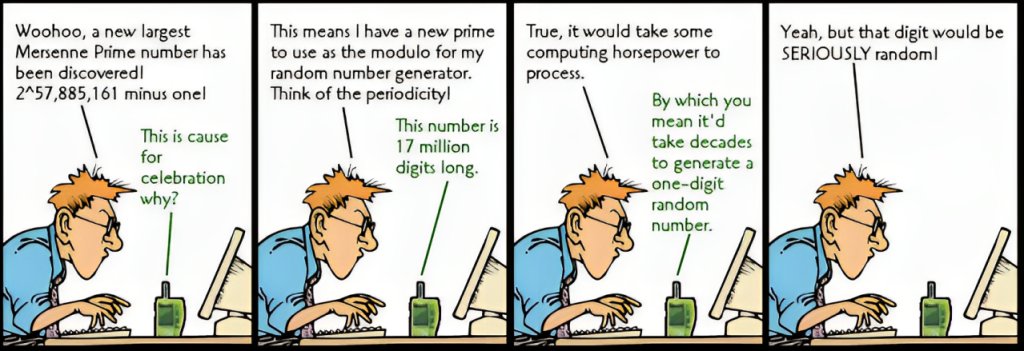

If you think about the nuanced difference between randomness and arbitrariness, you are forced to think if anything is really random in our world. A coin toss has close to random properties, but essentially if you could measure all the physical variables involved (the force of the flip, the size of the coin, and the distance to the land) you would probably be able to predict the exact output of the toss. Hard determinists believe that given enough computing power and information, nothing is essentially random. It is just that processes that cannot be broken down after a point are masked as random for the purpose of simplicity. Such a sequence of numbers that comes from a potentially arbitrary source but can be guaranteed to exhibit the properties of randomness, is called pseudo-random. As we know it, pseudo-randomness forms the basis of all computer-generated random numbers today.

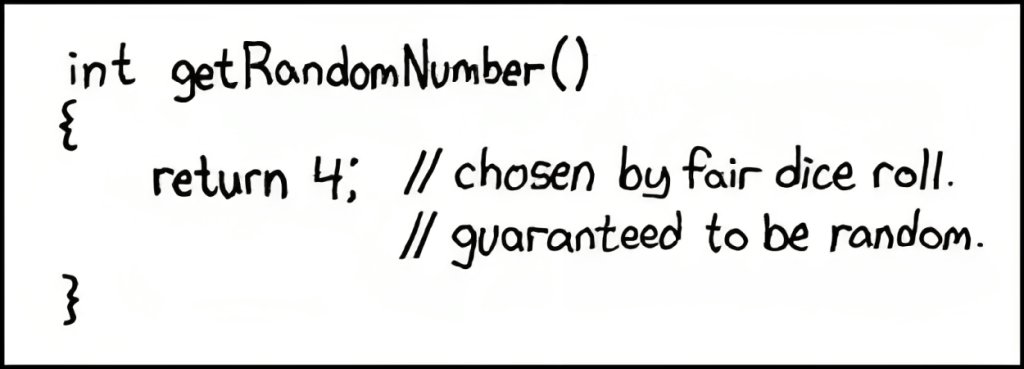

From a physicist’s perspective quantum mechanics has shown that true randomness does exist in nature but from a computer science perspective, algorithms can still not generate truly random numbers in the 21st century. When an algorithm is to generate a sequence of random numbers, it faces the conundrum that a fixed sequence of instructions starting from a given input would always generate a fixed output. Hence, if the input to the algorithm can be found, anyone can generate the exact sequence of numbers again and the sequence will cease to be random. This input is known as the seed of the random number generator.

You can try to think of various ingenious ways to generate a random seed but you will soon realize that it is essentially a cascading problem. All synthetic randomness generated by modern algorithms needs a seed to start with that at best can only be arbitrary. Computer Scientists working on randomized algorithms will be well aware of this seed and how it is used in modern algorithms. Some programs use it from system time, some use it from internet traffic parameters and some take the seed from mouse movements or keystrokes entered by the user.

The underlying algorithms do the heavy lifting of converting this arbitrary seed into a reliable random number and for all practical purposes, you can comfortably use the modern random number generators to get a stream of trustworthy pseudo-random numbers without having to worry about the seed.

Implications in Modern A/B Testing

Modern A/B testing engines use pseudo-random numbers to assign the visitors to Control or Treatment. Sound experiment design demands that some properties should always hold true about this randomized assignment procedure and when these properties are violated, the reliability of the results is compromised. Three such properties that need to be maintained have been outlined by Fabijan et al. as follows:

- The visitors should be equally likely to land in the control group or the variation group.

- A visitor once assigned to a group should be assigned to the same group if they revisit the experiment.

- Visitor allocation between different experiments should not correlate with each other.

The first is a direct consequence of proper randomizer design and the second can be easily handled by using cookies on the visitor machine. However, the third one gets violated sometimes in organizations that run a large number of experiments parallelly. If there is a list of seeds that are being used to generate these random numbers and two experiments end up having the same seed then the user assignment for both experiments will be exactly the same (assuming the unique user ID is used for generating the assignment). It is hence suggested that the list of seeds should be changed regularly to avoid correlation in assignment behaviour.

Conclusion

The arbitrary, the random, and the pseudo-random are nuanced concepts for a layman that hardly matter in practice, but they matter the world when they do. Randomization is the source of trust in an experiment and hence understanding these nuances is crucial for the modern experimenter. Understanding the beautiful properties of randomness has also given rise to an interesting health check in modern A/B testing.

Sample Ratio Mismatch (SRM) is a tool that measures if visitors are being split into control and variation in line with the desired randomization procedure. If the observed visitor assignment is unexpected, SRMs indicate that something is wrong with the setup of the experiment. For instance, if you have 1000 visitors on your test and the randomizer was to allocate each visitor to control or variation with a 50% probability, then it is highly unlikely that the split ends up being 400 and 600. In such a scenario, the SRM alarm goes off letting you know that the test results are not trustworthy.

Randomization is like a double-edged sword. It confounds the observer when he tries to study the patterns of nature. However, once understood, it can be used in ingenious ways to design intelligent solutions to complex practical problems.