Experimentation, Data Science, and Statistics at VWO

In February 2019, I joined VWO, an A/B testing platform as a data scientist, and our A/B test reports were powered by Bayesian statistics. Bayesian statistics by design are much more computationally expensive because they involve mathematical integrations over random variables. The most efficient way to calculate these integrals was Monte Carlo simulations. Monte Carlo simulations draw millions of synthetic samples from the underlying random variables, calculate the mathematical expression for each sample, and take an average of all calculated samples to return an accurate answer. At the time, we were drawing 7 million samples to ensure an accuracy of 0.001% in our statistics.

My coworker had recently made an astute observation which was just too hard to believe. Even if the number of samples drawn were as low as 70,000 the results were well within the desired range of error. But it was only an observation made over multiple simulations, he was on the lookout for proof. A proof that could convince our mentor, Paras (founder of VWO), that the heuristic will stand the test of time.

So, that day we sat down late into the night trying to understand Hoeffding’s inequality, the mathematical theorem that had been used to derive the 7 million number. Breaking down the meaning of every term in the equation, how it relates to VWO’s system, and how it affects the sample size, we made a surprising observation. Monte Carlo simulations did require 7 million samples but only at the start of an experiment. As more and more visitors were collected, Hoeffding’s inequality could be adjusted and accurate statistics could be obtained with exponentially fewer samples going down to as low as 1000 samples. We wrote down a mathematical proof and scrutinized it for errors. We then ran many simulations to computationally prove our findings.

All in all, we made the core Bayesian statistical engine at VWO over 100 times faster in calculation time per hit. The idea was never on the roadmap, there was no leadership push for the work. A simple idea tested on a synthetic simulation led us to exponentially improve a core part of our product. Two years later, a teammate of mine had a similar breakthrough and reduced the whole algorithm to a mere equation giving it a speedup of another 100x.

That is just how the data science team works at VWO. We are a team of three people guided by some of the best mentors. We have learned a lot about experimentation and its underlying statistics, tweaking and playing around with statistical equations, and running complex multi-dimensional simulations to prove our hypotheses. This post describes how we learned, what we learned about experimentation illuminating the path we have taken in research, and the challenges we have faced.

Pushing the Statistical Envelope

Data Science research has many faces to it and a lot of work being done in the field revolves around learning algorithms, deep learning approximations of the ground truth, and sophisticated modeling of real-world problems. At VWO, our journey and our curiosities demanded something different from us. Our learnings in experimentation have been an outcome of three core pillars of the data science team.

- The nuances of statistical depth: At VWO, we have rejoiced in statistical conversations, paradoxes of randomness, and the surprises of discovering novel experimentation errors. Armed with the powers of computer science, demonstrating the efficacy of a heuristic or a learning approach has always been at our disposal. However, we have always tried to look for mathematical proofs for our observations and brainstormed deep into the nature of random variables defining our problems. This has forever led us to novel insights to mold statistical methods to our advantage and to tell convincing stories on why our algorithms work (or fail).

- Large Scale Simulations: Early into our work, we developed a habit of thinking about everything in terms of multi-dimensional matrices. All code that was written assumed that the underlying variables could be anything ranging from a single number to a complex multi-dimensional matrix representing different scenarios. Accentuated by our exponential speed up since 2019 (almost 10,000x as told in the introduction), we could run vast simulations all on our laptops and then summarize the results in multiple ways to understand the properties of experimentation statistics. In essence, we realized the power to synthetically simulate billions of A/B tests and see how our statistics work in each case and how often it is wrong.

- Tailoring research to customer needs: For geeks like us, almost all problems of statistics and mathematics bring an insatiable gleam to our eyes. We owe it to our founder, Paras Chopra, to teach us to think in terms of which problems matter to the customer and which do not. We extensively have tried to look for metrics that our customers care about to guide our work. In our journey, we have found different kinds of accuracy errors that plague experimental statistics and actively tried to choose what matters for the customer and what does not. All proposals that have been made, have first tried to answer what we are optimizing for and justify the causal pathway that should lead to customer benefit.

I identify the above as the three core pillars of our research in statistics. In this blog, I intend to discuss a major part of our explorations in statistics and I aim to take the reader through our journey in detail describing the simulations and our inferences from them.

Learning about Experimentation

While we in the data science team have not experimented in the traditional A/B testing framework, we have regularly worked with the core principles of experimentation and deeply understood the core philosophy of experimentation. Below I highlight our journey into learning the broader context of experimentation.

- Doing Experimentation: Almost every problem that we have tried to solve has first started by understanding the available literature on the problem and then creating hypotheses on how to solve the problem. We have then designed experiments that can prove us wrong and reveal to us the true nature of statistics. We have then gone to replace our hypotheses with better hypotheses and then repeated the whole process again. We have realized that the journey we have taken is an endless one and we can only play a part in the same. At the very base, we believe that is what is at the heart of experimentation in any domain of science. You can never be 100% correct, but you can devise experiments to find out where you are 100% wrong.

- Learning about Experimentation: The second thing that we have done is that we have read about experimentation at length ranging from books like Experimentation Works by Stefan Thomke and The Book of Why by Judea Pearl. We also took the class, Accelerating Innovation with AB Testing by Ronny Kohavi to learn from the experts. We have read through the Experimentation blogs of Netflix Research and research papers on statistical significance and the history of experimentation. Overall, we have always followed the principle that what we don’t know must have been probed into by someone else around the globe and always chosen to learn before reinventing the wheel.

- Writing about Experimentation: To consolidate our learnings and explain them to our peers, we have written regularly about experimentation. Ranging from our research findings to mathematical proofs, to stories that explain an algorithm, and finally to educational pieces, we have focussed extensively on a culture of writing. Writing has allowed us to build an intuition, come up with ideas, and develop a long-term retrievable memory of all the research we have done. Today, we stand on a corpus of our writings from which we can imagine how to tailor and share our learnings with the outer world in the form of a blog.

- Talking about Experimentation: We have also given talks on experimentation and led interesting discussions with fellow experimenters across the globe. Over time, we have refined the understanding of our audience and learned through engaging conversations about the most pressing issues on experimentation today. Armed with our learnings, we now aim to move ahead with talking about experimentation and building a community of experimenters. This blog is an attempt to do the same.

With this blog, I aim to craft everything that we have learned about experimentation into a cogent narrative that throws light on the most important concepts of experimentation. As will be shared in the next blog post, I plan to explore different topics within a coherent hierarchical structure to share our learnings.

Conclusion

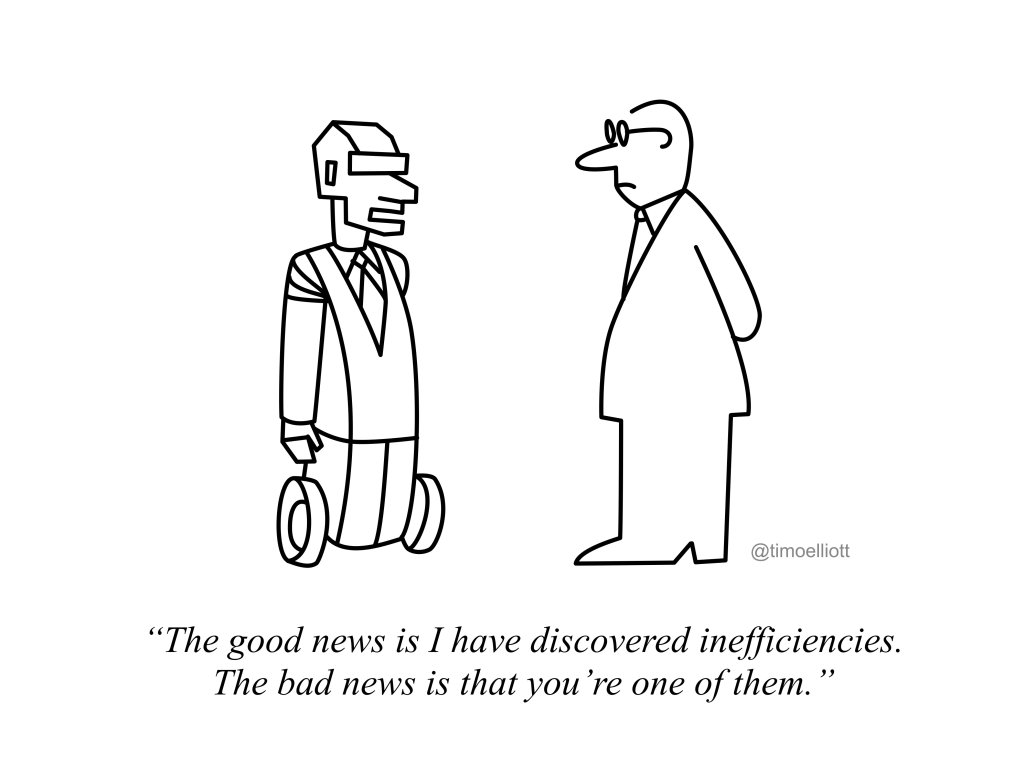

It was in 2021, we were looking to explain why there is a significant win rate in our A/A tests. A teammate of mine persistently argued with me on the observation he had made that the false positive rates inflate irrespective of the uplift in the test. The observation was so contradictory to our entire understanding of the Bayesian engine, that I was naive enough to argue back with him in the absence of data. I was trying to propose a solution, but I kept feeling that I was not able to explain my idea. Throughout the time he was understanding what I was sharing but trying to tell me that my solution was not solving the problem but rather just circumventing it. The conversation ended with me reaching a state of frustration and probably him as well.

I sat down on my laptop and simply wrote down a simulation to prove my point which took no more than half an hour. When the results came, it dawned on me how wrong I had been and what he had been trying to convey throughout. In age and experience, the teammate was younger than me and even though it was hard, I made sure that I verbally accepted that I was wrong. The reason I remember the ordeal two years down the line is because it was a deeply humbling experience for me.

The biggest thing we have learned about statistics in the past three years is that statistics is more slippery than you can imagine. Statistics will cheat you where you least expect it to. Statistics will not warn you before leading you down a path of demise. Statistics will not even utter a word after you fall deep into a pit of misconception. It will be days, months, and even years before you realize that you were deceived. You need to learn to hold the beast by the neck and rely on the first principle truths to make sense of anything once randomness gets involved.

The only way to move forward in experimentation is to be aware that no matter how confident you feel, you can be wrong. You need to be constantly on the lookout for where you are wrong and then replace your beliefs with something better. That is the spirit of experimentation and the way to survive in an uncertain world.